What is LLM in Security?

In the context of cybersecurity, LLM security refers to the set of practices, technologies, and governance policies used to protect large language models (LLMs) from vulnerabilities, misuse, and adversarial attacks. As LLMs such as GPT, Claude, Gemini, and others become embedded in enterprise AI systems, securing their inputs, outputs, training data, and operational environments is critical to maintaining trust, compliance, and resilience.

When left unprotected, LLMs can become gateways for data security breaches, misinformation, and even regulatory violations—making LLM security a cornerstone of responsible AI security.

What are Large Language Models (LLMs)?

Large Language Models are advanced machine learning algorithms trained on massive datasets to interpret, generate, and manipulate human language. These models—often based on transformer architectures—can predict and generate text with remarkable coherence, powering a wide range of GenAI (generative AI) applications.

Common LLM use cases include:

- Virtual assistants and chatbots

- Automated code generation

- Customer support automation

- Content creation and summarization

- Legal and healthcare document processing

Their flexibility and real-time adaptability make LLMs indispensable in modern organizations—but also expand their attack surface, requiring continuous oversight and AI security controls.

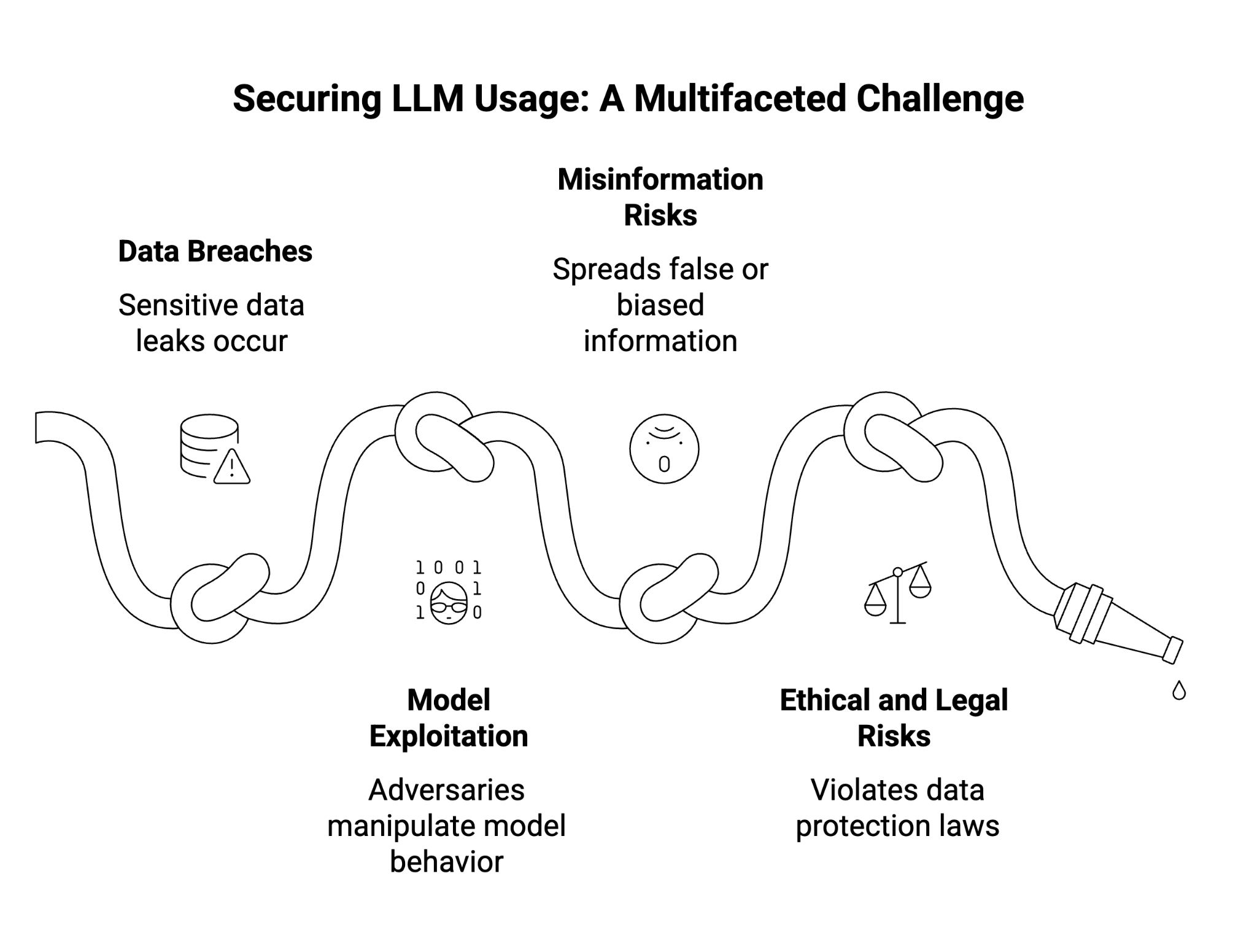

Importance of Security in LLM Usage

As artificial intelligence systems scale across industries, the importance of securing LLMs becomes paramount. Weak or poorly governed LLM integrations can expose enterprises to multiple risk vectors:

Data Breaches

LLMs trained on unfiltered or proprietary data can inadvertently memorize and reproduce sensitive information, such as authentication credentials, trade secrets, or personal identifiers. Without robust data security measures, these leaks can trigger regulatory penalties and reputational damage.

Model Exploitation

Adversaries may exploit prompt injection, data poisoning, or insecure APIs to manipulate model behavior. When connected to downstream systems (like CRMs, databases, or automation tools), a compromised LLM can act as a launchpad for wider cybersecurity incidents.

Misinformation and Output Risks

Unvalidated LLM outputs can spread false or biased information—especially problematic in critical fields like healthcare, finance, or law. Lack of validation pipelines and human review amplifies these risks.

Ethical and Legal Risks

Using unverified or opaque GenAI models may violate data protection and intellectual property laws. For organizations under frameworks like GDPR or the EU AI Act, failure to validate training sources or safeguard model outputs can result in non-compliance and loss of trust.

In short: Secure LLM usage is not only a technical challenge—it’s a governance, ethics, and compliance mandate.

Top LLM Security Threats

As organizations adopt large language models (LLMs) across critical business functions, understanding their unique security risks is essential. Unlike traditional software, LLMs can be manipulated through language-based inputs, unfiltered data, or insecure integrations—creating new and often unpredictable vulnerabilities. The following section outlines the top LLM security threats organizations must recognize and mitigate to ensure the safe, compliant, and trustworthy use of generative AI systems.

1. Prompt Injection

Attackers craft malicious inputs that override instructions or trigger unintended behavior. These indirect prompt injection techniques can manipulate downstream actions, especially when models are connected to plugins, APIs, or other systems.

2. Data Leakage

LLMs may expose sensitive information from training datasets or prior user interactions, leading to data privacy violations or security breaches.

3. Insecure Third-Party Integrations

Connecting LLMs to unvetted APIs, plugins, or data sources introduces supply chain vulnerabilities, especially when output handling is not properly sanitized.

4. Model Theft and Reverse Engineering

Adversaries may attempt to steal or reverse-engineer a proprietary llm model, gaining access to training techniques or valuable datasets.

5. Malicious Output Generation

Without proper output validation, models can produce harmful or misleading content, including phishing emails, false medical advice, or even code execution instructions leading to XSS or denial of service.

6. Jailbreaking and Safety Filter Evasion

Users can employ sophisticated jailbreak prompts to bypass safety filters, enabling access to harmful or restricted outputs.

7. Inadequate Access Controls

Lack of role-based permissions or authentication mechanisms can lead to unauthorized access and potential misuse of LLMs.

8. Training Data Poisoning

Malicious actors can insert harmful patterns into public or enterprise data sources used for fine-tuning, corrupting model behavior over time.

9. Overreliance Without Validation

Blindly trusting llm outputs without human or algorithmic validation can lead to the spread of inaccurate, biased, or offensive content.

10. Regulatory and Compliance Violations

Failure to align LLM practices with laws such as GDPR, HIPAA, or data protection regulations can expose organizations to legal penalties.

Who Is Responsible for LLM Security?

LLM security is a shared responsibility across the AI ecosystem:

- Model Providers (e.g., OpenAI, Anthropic) must ensure secure training data sourcing, model alignment, and safety filters.

- Enterprise Users must implement application security, access controls, and continuous monitoring.

- IT and Security Teams need to enforce cybersecurity best practices and conduct regular audits.

- Developers must implement input sanitization, validation, and ethical usage guidelines.

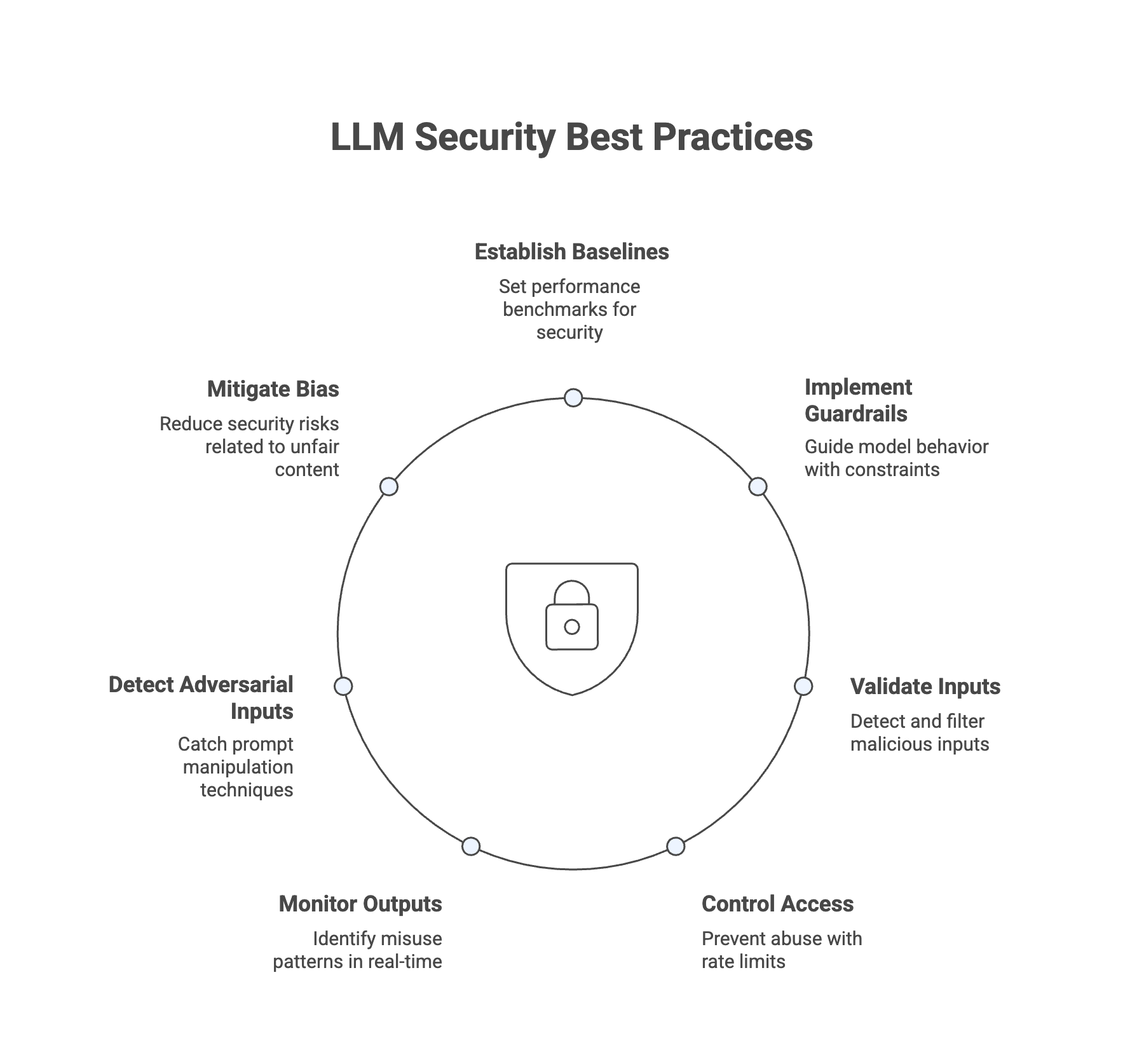

How Can Organizations Secure LLMs?

1. Measurement and Benchmarking

Establish and track performance baselines across security posture, output quality, bias, and compliance to detect anomalies.

2. Guardrails

Implement safeguards such as keyword filters, output constraints, and contextual boundaries to guide model behavior.

3. Input Validation and Filtering

Use input validation and sanitization to detect malicious inputs, such as embedded commands or misleading prompts.

4. Rate Limiting and Access Controls

Apply rate limits and role-based access controls to prevent abuse and unauthorized access to LLM endpoints.

5. Model Behavior Monitoring

Continuously monitor llm outputs in real-time to identify misuse patterns, insecure output handling, or drift in performance.

6. Adversarial Input Detection

Deploy techniques such as red teaming, automated stress testing, and embedding similarity checks to catch prompt manipulation.

7. Bias Detection and Mitigation

Evaluate LLMs for algorithmic bias by simulating real-world queries and fine-tune models to reduce security risks related to unfair or offensive content.

Conclusion

As generative AI continues to transform how businesses operate, the importance of LLM security cannot be overstated. From prompt injection attacks to training data poisoning, the threats to large language models are complex and evolving. Organizations must implement layered security measures, combining access controls, output validation, monitoring, and compliance safeguards to protect against misuse, maintain trust, and ensure safe deployment across llm applications.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at https://witness.ai.