This article explores what LLM guardrails are, the benefits they offer, the different types available, and how organizations can implement them effectively.

Large Language Models (LLMs) have become the backbone of generative AI systems, powering chatbots, copilots, search assistants, summarizers, and more. Their ability to understand and generate natural language at scale has unlocked new use cases across industries—from legal and healthcare to customer service and software development.

However, the very power of LLMs brings new security challenges. These models can be manipulated, misused, or simply behave unpredictably. That’s why LLM guardrails are essential. They provide structure, safety, and reliability for deploying AI responsibly in real-world environments.

What Are LLM Guardrails?

LLM guardrails are structured mechanisms designed to constrain and guide the behavior of large language models in both input handling and output generation. Their purpose is to reduce risks associated with misuse, security vulnerabilities, and unintended behavior in generative AI systems.

Guardrails can be rule-based, embedding-based, or model-assisted, and they work at various stages of the LLM lifecycle—from validating user inputs to modifying or filtering LLM outputs. These frameworks are designed to ensure that AI systems align with organizational policies, regulatory requirements, and ethical norms.

Popular toolkits like NVIDIA NeMo Guardrails, Guardrails AI, and Microsoft’s safety layers demonstrate how guardrails can be implemented in production. Developers can also create custom filters using Python, regex, and validation templates.

LLM guardrails are critical in AI systems that:

- Expose public interfaces (e.g., chatbots, APIs)

- Process sensitive or proprietary data

- Generate content for end users

- Operate in regulated industries (healthcare, finance, legal)

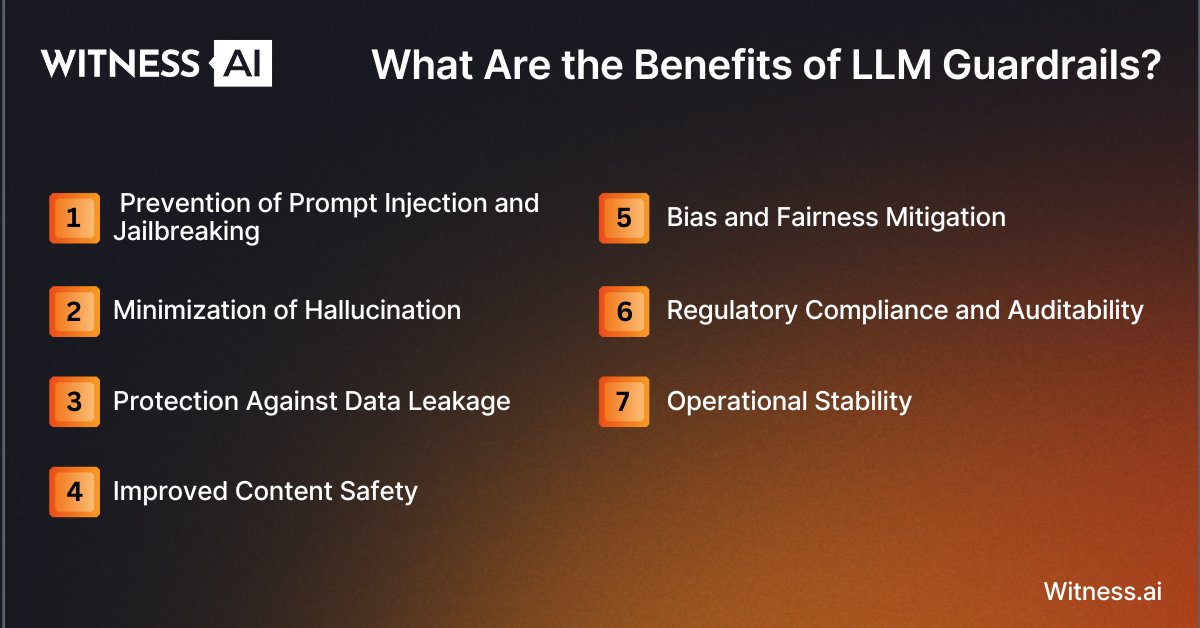

What Are the Benefits of LLM Guardrails?

Integrating guardrails into LLM workflows provides foundational safeguards for building reliable, ethical, and secure AI systems. Below are key advantages:

1. Prevention of Prompt Injection and Jailbreaking

Without guardrails, LLMs are vulnerable to prompt injection—a technique where users manipulate the input prompt to override intended behavior. Attackers may also attempt jailbreaks, tricking the model into producing prohibited outputs. Guardrails recognize these attempts and stop them at the source.

2. Minimization of Hallucination

LLMs often hallucinate—confidently generating false or misleading information. Guardrails can enforce fact-checking, introduce retrieval-augmented generation (RAG), and validate LLM responses to reduce hallucinated content.

3. Protection Against Data Leakage

Trained on vast datasets, LLMs can sometimes output snippets of sensitive training data, including PII or proprietary information. Output filters can detect and suppress such leaks in real-time.

4. Improved Content Safety

Guardrails prevent the generation of toxic, offensive, or illegal content by applying NLP classifiers and blocklists. This ensures AI systems avoid producing inappropriate content or violating content moderation policies.

5. Bias and Fairness Mitigation

AI systems can perpetuate or amplify biases present in training data. Guardrails can flag biased responses and substitute more neutral outputs, supporting fairness in automated decision-making.

6. Regulatory Compliance and Auditability

In industries subject to data protection laws like GDPR or HIPAA, guardrails help enforce compliance by ensuring that AI interactions remain within the bounds of privacy regulations. They also provide audit logs and explainability.

7. Operational Stability

Guardrails make AI systems more predictable. This reduces edge cases and user errors, ensuring that real-time applications function with lower latency, higher reliability, and repeatable behavior.

What Are the Types of LLM Guardrails?

LLM guardrails can be broadly classified into input guardrails (proactive) and output guardrails (reactive). Each category addresses a different phase of the model interaction.

Input Guardrails

Input guardrails evaluate, validate, or transform incoming user prompts to prevent malicious or dangerous queries from reaching the model.

Prompt Injection

Prompt injection exploits system instructions by inserting adversarial content. Guardrails use pattern detection (e.g., prompt delimiters or nested instructions) to block or reformat such inputs.

Jailbreaking

Guardrails analyze the structure and intent of prompts to detect jailbreak attempts, which try to disable safety filters. Techniques include embedding comparisons, few-shot examples, or matching known jailbreak patterns.

Privacy Filters

Inputs containing PII—such as names, birthdates, account numbers—are flagged and masked. Filters may use NER (Named Entity Recognition) or regex to enforce privacy constraints.

Topic Restriction

Certain use cases require banning discussions around sensitive topics—e.g., medical advice, adult content, political activism. Guardrails enforce topic-based restrictions using classifiers trained on topic-specific datasets.

Toxicity Detection

User inputs are scored for toxicity, hate speech, or profanity using tools like Perspective API or custom classifiers. Inputs above a risk threshold are rejected or rephrased.

Code Injection Defense

Inputs attempting to inject executable code (e.g., in Python or JavaScript) are flagged to prevent backend abuse, especially in code execution APIs or developer assistants.

Output Guardrails

Output guardrails apply after the model has generated a response. They intercept problematic content before it reaches the user.

Data Leakage

Guardrails check for the presence of sensitive data, including credentials, training artifacts, or user-specific information, and redact or block them accordingly.

Toxicity

Guardrails suppress toxic or harmful output, especially in conversational systems. They apply NLP toxicity scoring or template-based rules to modify or reject outputs.

Bias and Fairness

Output is reviewed for signs of racial, gender, or socioeconomic bias, particularly in decision-making contexts like hiring or lending. Scoring systems or alternative templates may be applied.

Hallucination

Responses are compared against knowledge bases, fact-checking tools, or external APIs to validate factual accuracy. RAG techniques are often combined with output guardrails.

Syntax Validation

When LLMs generate code, schemas, or structured data formats like JSON or XML, syntax guardrails ensure the output is valid and executable. This is especially relevant for function calling, embedding, and template-based outputs.

Illegal Activity Detection

Output guardrails detect and block responses related to criminal activity—such as generating malware, accessing dark web content, or creating phishing messages.

How Do LLM Guardrails Improve AI Security?

Guardrails serve as a critical line of defense across multiple threat vectors in LLM-powered systems:

- Attack Surface Reduction: Guardrails limit how users can manipulate or exploit AI models via inputs, minimizing attack vectors like prompt chaining or sandbox escape.

- Data Protection: Guardrails prevent LLMs from exposing internal training data, safeguarding both enterprise and personal data.

- Integrity and Trust: Guardrails prevent hallucinated facts or unauthorized behavior, ensuring that users and stakeholders can trust AI-generated content.

- Misuse Prevention: Guardrails stop LLMs from being used for malicious purposes, such as writing ransomware scripts, recommending banned substances, or aiding in scams.

- Safe Open-Source Deployment: Open-source models like LLaMA or GPT-J can be fine-tuned or deployed privately, but guardrails are required to ensure their outputs remain secure.

Together, these defenses promote responsible AI development and deployment, building trust among users, developers, and regulators alike.

How to Implement LLM Guardrails

Effective implementation of LLM guardrails involves a combination of technical tooling, organizational policy, and continuous iteration. Here’s a step-by-step approach:

1. Assess LLM Use Cases and Risk Exposure

Map each LLM application to potential risks. A customer support bot might need strong toxicity filters, while a legal assistant may require fact-checking and hallucination detection.

2. Select or Develop a Guardrail Framework

Leverage platforms like:

- NVIDIA NeMo Guardrails (open-source, Python-based, customizable)

- Guardrails AI (schema-first validation toolkit)

- LangChain & LlamaIndex integrations

- Custom tools using regex, embedding matching, and prompt engineering

3. Embed Guardrails Into Your Pipeline

Guardrails should be tightly coupled with your LLM workflow—whether it’s hosted in OpenAI, Azure, Anthropic, or on-prem. Middleware layers can validate inputs before the model and outputs before user delivery.

4. Monitor and Benchmark Guardrail Effectiveness

Track metrics like:

- Prompt rejection rate

- Output filter rate

- False positives/negatives

- Hallucination detection accuracy

- Latency impact

Use dashboards to visualize performance and optimize thresholds accordingly.

5. Incorporate Human Feedback

Use human reviewers or red team evaluations to catch bypasses, edge cases, and novel jailbreak strategies. Retrain your filters or update your rules with this feedback loop.

6. Continuously Update Models and Policies

New LLMs (e.g., GPT-4, LLaMA 3) and new threats emerge constantly. Stay current by following research communities, monitoring GitHub, and applying new prompt engineering techniques or guardrail models.

7. Establish Documentation and Governance

Maintain full documentation of guardrails: input rules, output schemas, filter thresholds, and fallback responses. This supports auditability, transparency, and cross-functional collaboration with legal and compliance teams.

Final Thoughts

As generative AI becomes integral to enterprise workflows, education, healthcare, and creative industries, securing these systems becomes non-negotiable. LLM guardrails serve as the control layer between AI capabilities and real-world safety.

Whether you’re building AI copilots, powering customer support chatbots, or deploying open-source LLMs, investing in strong guardrails ensures your systems are secure, compliant, and aligned with human values.

Don’t let your AI systems run unchecked. Guardrails are the bridge between innovation and responsibility.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.