What is AI Security?

AI security refers to the frameworks, policies, and best practices designed to safeguard AI systems from cybersecurity threats, vulnerabilities, and misuse. As artificial intelligence becomes deeply integrated into critical industries such as healthcare, finance, and national security, protecting AI models, datasets, and decision-making processes is essential. AI security encompasses risk management, data security, AI model security, and ethical deployment strategies to prevent adversarial attacks and ensure compliance with regulatory standards.

Why AI Security is Important

The use of AI in security operations and various AI applications has grown exponentially, but this expansion has also increased the attack surface for cyber threats. AI-powered systems rely on vast amounts of data, complex algorithms, and continuous learning, making them prime targets for security vulnerabilities. AI security is crucial for:

- Safeguarding sensitive data against data breaches and data poisoning.

- Protecting AI models from adversarial attacks that manipulate outputs.

- Ensuring compliance with regulations such as GDPR, HIPAA, and NIST AI risk management guidelines.

- Maintaining public trust in AI technologies through responsible AI governance.

Benefits of AI Security

Investing in AI security provides numerous advantages, including:

- Enhanced Cybersecurity Posture: Secure AI deployment mitigates security risks and strengthens security operations by implementing real-time threat detection, automated incident response, and adaptive security frameworks to counteract emerging cyber threats.

- Protection of AI Investments: Prevents financial losses due to AI model corruption or data leaks by ensuring that AI models remain functional, accurate, and resistant to adversarial threats. This includes securing AI supply chains and implementing robust validation mechanisms for AI-generated outputs.

- Regulatory Compliance: Ensures adherence to global AI governance policies such as GDPR, HIPAA, and NIST AI security guidelines, reducing the risk of legal consequences, fines, and reputational damage. Compliance frameworks help organizations align AI security with ethical and legal standards.

- Resilience Against Cyber Threats: Reduces the likelihood of malware, unauthorized access, and security incidents by incorporating advanced AI-powered security analytics, encryption strategies, and access control policies to safeguard AI applications and datasets from cyberattacks.

- Optimized AI Performance: Secure AI tools function more reliably, reducing AI model drift and decay. Continuous security monitoring, regular updates, and adaptive learning mechanisms help maintain model accuracy and ensure that AI systems operate effectively over time.

- Strengthened Data Security: AI security protocols ensure that sensitive data remains protected throughout its lifecycle, from collection to storage, processing, and deployment. This minimizes risks associated with data breaches, data poisoning, and insider threats.

- Improved AI Governance and Trust: Organizations that implement strong AI security measures can enhance stakeholder confidence in AI-powered applications. Transparent AI governance frameworks promote responsible AI use, reducing biases, errors, and unintended security risks.

- Support for Critical Sectors: AI security is vital in industries such as healthcare, finance, and national security, where data integrity and model accuracy are paramount. Ensuring that AI systems remain secure enables them to function as reliable decision-making tools in high-stakes environments.

AI Security Risks

AI security risks span multiple categories, each posing unique challenges.

Data Security Risks

AI systems process massive datasets, often containing sensitive information. If these datasets are compromised through data breaches or data poisoning, the integrity and reliability of AI models are threatened.

- Data Poisoning Attacks: Malicious actors inject manipulated or biased data into training datasets, causing AI models to make incorrect predictions. These attacks can lead to security vulnerabilities in fraud detection, healthcare diagnostics, and financial decision-making systems.

- Unauthorized Access: Without proper access control mechanisms, attackers can exfiltrate sensitive data or tamper with AI models, leading to compromised security and privacy concerns.

- Lack of Encryption: Insecure data storage and transmission increase the risk of cyber threats, necessitating encryption techniques and zero-trust architectures to safeguard AI-powered applications.

AI Model Security Risks

AI model security involves protecting AI-powered applications from unauthorized modifications, model extraction attacks, and adversarial inputs that can alter AI outputs.

- Model Extraction Attacks: Attackers attempt to reverse-engineer AI models by probing them with numerous queries, ultimately reconstructing the model’s behavior and exposing sensitive intellectual property.

- Model Inference Manipulation: Cybercriminals manipulate the outputs of AI models by altering input parameters, leading to misclassification, biased decision-making, or security incidents in automated systems.

- Model Backdooring: Threat actors inject hidden triggers into AI models that remain dormant until activated under specific conditions, leading to malicious outputs that bypass traditional security controls.

Adversarial Attacks

Adversarial attacks manipulate AI models by feeding deceptive inputs, causing incorrect decision-making. These attacks can compromise AI capabilities in industries such as healthcare, finance, and autonomous systems.

- Evasion Attacks: Attackers subtly alter input data, such as changing pixels in an image or modifying text, tricking AI models into misclassifying the input while remaining undetected by humans.

- Poisoning Attacks: Attackers tamper with training data to skew AI model behavior, potentially leading to security vulnerabilities in generative AI and deep learning models.

- Trojan Attacks: Threat actors embed hidden adversarial triggers in AI models, which activate only under specific conditions, leading to dangerous outcomes in critical security systems.

Ethical and Safe Deployment

Responsible AI development involves ensuring fairness, transparency, and security best practices. AI governance frameworks must address biases in training data, secure AI development lifecycles, and establish mitigation strategies for unethical use cases.

- Bias and Fairness Issues: AI models trained on biased datasets can produce discriminatory outputs, leading to ethical concerns in hiring processes, credit scoring, and law enforcement applications.

- Lack of Explainability: Black-box AI models pose challenges in accountability and decision-making transparency, making it difficult to identify and mitigate security threats effectively.

- Misuse of AI Capabilities: The deployment of AI technologies for malicious purposes, such as deepfake generation or automated hacking, necessitates strict regulation and monitoring mechanisms.

Regulatory Compliance

Global regulations, including NIST AI security guidelines and GDPR, require organizations to implement strong AI security measures. Non-compliance can lead to hefty penalties and reputational damage.

- GDPR and Data Privacy Regulations: Organizations must adhere to strict data protection laws to prevent legal liabilities and security vulnerabilities in AI-powered applications.

- AI Governance Frameworks: Compliance with industry standards such as ISO/IEC 27001 and NIST AI RMF ensures responsible AI risk management and cybersecurity resilience.

- Cross-Border AI Regulations: With AI technologies being adopted globally, organizations must navigate varying jurisdictional policies to ensure lawful deployment and secure AI workflows.

Input Manipulation Attacks

Malicious actors can manipulate AI models by feeding misleading inputs, leading to compromised decision-making. This risk is particularly prevalent in generative AI and large language models (LLMs), requiring enhanced validation processes.

- Prompt Injection Attacks: Attackers craft deceptive queries to influence the responses of LLMs, leading to misinformation and security threats in automated decision-making systems.

- Data Tampering: AI-powered systems that rely on real-time data inputs can be manipulated to produce false outputs, impacting sectors such as cybersecurity, healthcare, and financial markets.

- Automation Exploits: Automated AI tools can be misled into performing unintended actions, such as bypassing security controls or generating harmful content, necessitating strict validation protocols.

AI Model Drift and Decay

AI models degrade over time due to changes in data distributions and evolving cyber threats. Continuous monitoring and retraining are essential to optimize AI performance and maintain security posture.

- Concept Drift: AI models become less accurate as real-world conditions change, impacting fraud detection, predictive analytics, and security monitoring applications.

- Data Distribution Shifts: Training data that does not reflect current environments can lead to erroneous predictions, requiring frequent model updates to maintain accuracy.

- Security Patch Gaps: Outdated AI models are more vulnerable to adversarial attacks, necessitating ongoing risk assessment and proactive security updates.

What Role Does Visibility and Control Play in AI Security Posture Management?

Visibility and control are fundamental to securing AI workloads. Security teams must have:

- Real-time Monitoring: AI security operations must track anomalies and detect emerging threats.

- Access Control Mechanisms: Restricting unauthorized access to AI applications and datasets minimizes risk.

- Incident Response Strategies: Effective remediation plans ensure swift action against security incidents.

- AI Governance Policies: Organizations must establish security best practices and enforce compliance across stakeholders.

How Does Artificial Intelligence and Machine Learning Contribute to Security Blind Spots?

While AI and machine learning enhance cybersecurity capabilities, they also introduce security blind spots due to:

- Complexity of AI Models: The more advanced an AI model, the harder it is to interpret and secure.

- Lack of Transparency: AI tools, especially black-box models, lack explainability, making threat detection challenging.

- Automated Decision-making Risks: AI-powered security workflows may inadvertently ignore new cyber threats if not continuously updated.

- Dependence on Open-Source AI Technologies: Vulnerabilities in open-source AI frameworks can be exploited if not properly secured.

What Are the Biggest Challenges in Ensuring AI Security?

Securing AI systems involves multiple challenges, including:

- Protecting AI Supply Chains: Ensuring security across the entire AI development lifecycle, from dataset collection to model deployment.

- Managing Large Attack Surfaces: AI applications interact with various networks, increasing exposure to cyber threats.

- Balancing Security with Performance: Overly restrictive security measures can hinder AI capabilities and real-time functionality.

- Ensuring AI Governance Compliance: Aligning AI development with regulatory frameworks remains a complex task.

- Adapting to Evolving Cyber Threats: AI security threats are constantly changing, requiring proactive risk management strategies.

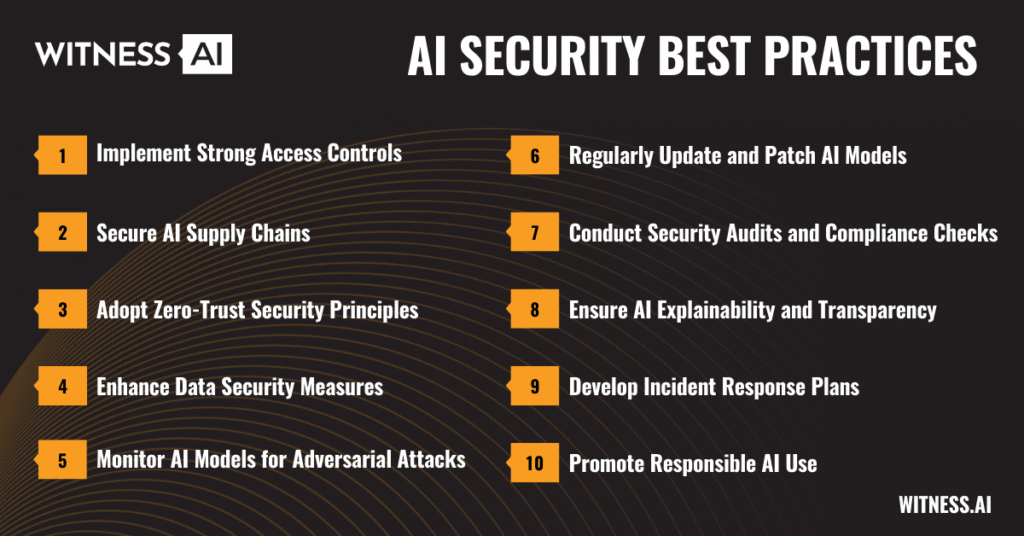

Best Practices for AI Security

Implementing AI security best practices helps organizations mitigate risks and ensure the safe deployment of AI systems. The following strategies are essential for maintaining a robust AI security posture:

- Implement Strong Access Controls: Restrict unauthorized access to AI systems and datasets using role-based access control (RBAC) and multi-factor authentication (MFA).

- Secure AI Supply Chains: Ensure that AI models, datasets, and software components originate from trusted sources and are free from vulnerabilities.

- Adopt Zero-Trust Security Principles: Continuously verify users, devices, and data interactions before granting access to AI-powered applications.

- Enhance Data Security Measures: Encrypt sensitive data, apply differential privacy techniques, and implement robust data governance policies to prevent unauthorized access and leaks.

- Monitor AI Models for Adversarial Attacks: Use adversarial training and anomaly detection techniques to identify and mitigate potential attack vectors.

- Regularly Update and Patch AI Models: Address security vulnerabilities by continuously monitoring AI model performance and applying necessary updates.

- Conduct Security Audits and Compliance Checks: Ensure AI governance policies align with regulatory frameworks, such as GDPR, HIPAA, and NIST AI security guidelines.

- Ensure AI Explainability and Transparency: Use interpretable AI techniques to enhance trust, accountability, and bias mitigation in AI decision-making.

- Develop Incident Response Plans: Establish a proactive security incident response strategy to detect, contain, and remediate AI security threats effectively.

- Promote Responsible AI Use: Foster ethical AI development by incorporating fairness, transparency, and accountability principles into AI design and deployment.

Conclusion

AI security is a critical aspect of modern cybersecurity, ensuring that AI-powered systems remain resilient against security vulnerabilities and cyberattacks. By implementing robust security best practices, organizations can safeguard sensitive data, optimize AI capabilities, and maintain compliance with global AI governance standards. As AI continues to evolve, proactive mitigation strategies and continuous monitoring will be essential to protecting the integrity of AI systems and their real-world applications.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.