Today, WitnessAI extends the confidence layer for enterprise AI to secure agents in two ways. First, WitnessAI governs agent activity the same way it governs employee AI usage. It monitors which agents are active, which MCP servers and tools they access, and what data they share. Second, WitnessAI extends AI application protection from models to agents, blocking attacks and malicious prompts before they reach the agent.

The shift is already underway. Employees are installing agentic plugins that connect to remote MCP servers, giving agents access to internal systems, external APIs, and third-party tools. Engineering teams are building and deploying agents that accept natural language inputs and automatically execute tasks through tool calls. In both cases, security teams lack visibility into which agents exist, which MCP servers and tools they access, and how to protect them from adversarial manipulation.

“AI workflows are maturing and starting to cross corporate and cloud LLMs, bots, and agents. We are the only AI security vendor that can secure every AI interaction, everywhere, with a unified solution. The alternative is trying to stitch together secure workflows using network proxies, firewalls, DLP products, and XDR agents. In short, the alternative is a complex mess.” — Rick Caccia, CEO, WitnessAI

The Agentic Attack Surface

Agents introduce risks that traditional security tools were not built to detect.

A developer installs a Claude Desktop extension that connects to an MCP server with code execution tools. An analyst enables a ChatGPT plugin that queries CRM databases using natural language. An engineering team deploys LangChain agents that process customer transactions without human review.

Each scenario creates pathways for prompt injection, data exfiltration, and unauthorized actions. Agents amplify this risk because they use every granted permission of their human or machine identity—without the judgment that constrains human behavior. A single manipulated prompt can cascade through tool calls, API requests, and database queries before anyone intervenes. The result is a governance vacuum at precisely the moment enterprises are accelerating agent deployment.

Observe: Agent and Tool Discovery

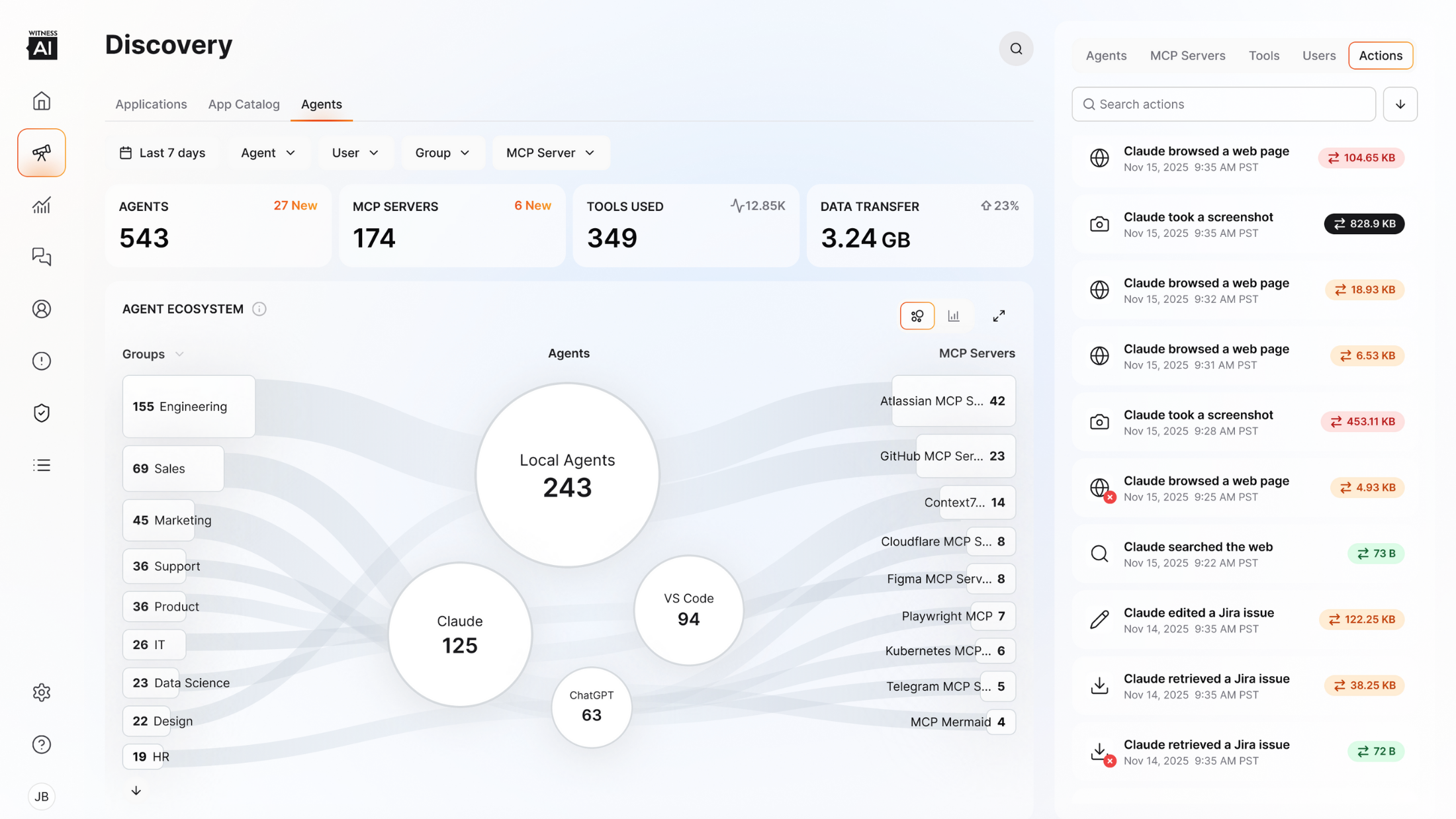

WitnessAI automatically discovers agentic activity across the highest-risk vectors in enterprise environments: Claude Desktop and Claude plugins, VSCode with AI extensions, ChatGPT with enabled plugins, and local agents running on developer machines (including LangChain, LlamaIndex, CrewAI, AutoGPT, and custom implementations).

The platform distinguishes standard chat sessions from agentic sessions by analyzing tool advertisements in traffic payloads. When a client requests access to external MCP servers, the platform classifies the session as agentic. This classification lets security teams permit approved AI interactions while still keeping visibility into connections to unverified tools.

Beyond agent discovery, the platform maps the MCP server ecosystem across your environment. Security teams can see which public and private servers are being accessed. Each server is identified with a unique fingerprint, and information about that server is enriched with intent classification and a functional category (Software Development, Research, or Data Access). Continuous observability tracks MCP tool calls, exposed APIs, and configuration parameters over time.

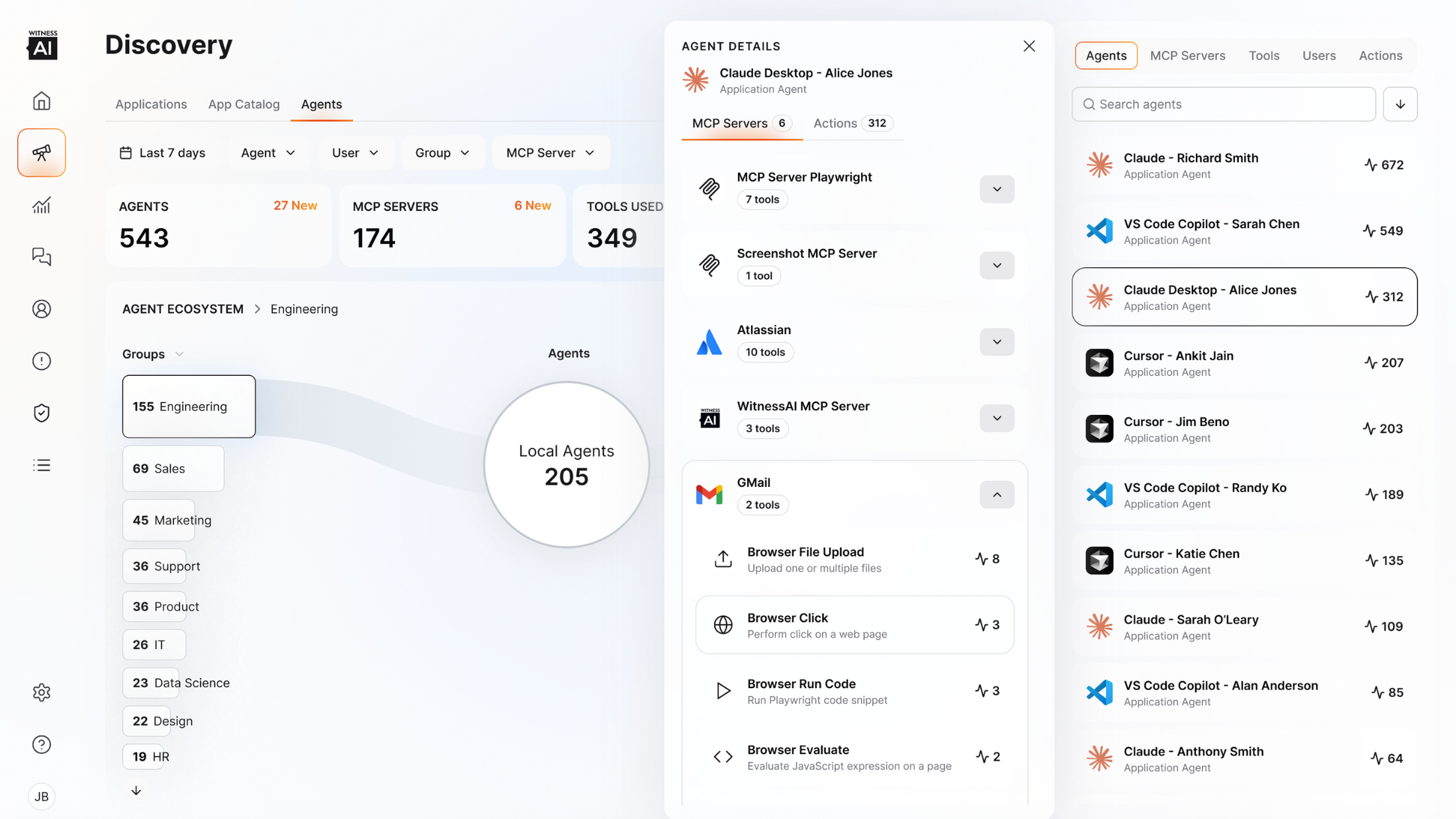

Control: Unified Human and Agentic Attribution

When something goes wrong, security teams need to know who is accountable. With agents acting on behalf of humans—and triggering other agents—that chain of responsibility can break quickly. WitnessAI can see what an agent is doing on an employee’s or customer’s behalf. It connects human and agent identities and captures decision-making context at runtime, including agent state and execution commands. This provides explainability for agent actions. When agents communicate with other agents, those interactions are attributed to the human who triggered the original action. This ensures policy enforcement reflects both who initiated the workflow and what the agent attempted to do.

This creates powerful observability across both workforces: human and agentic. Security teams can apply one policy framework to employees and agents and capture both in a single audit log. They can manage the entire AI ecosystem without separate dashboards or reconciling policy drift between systems.

Protect: Bidirectional Runtime Defense

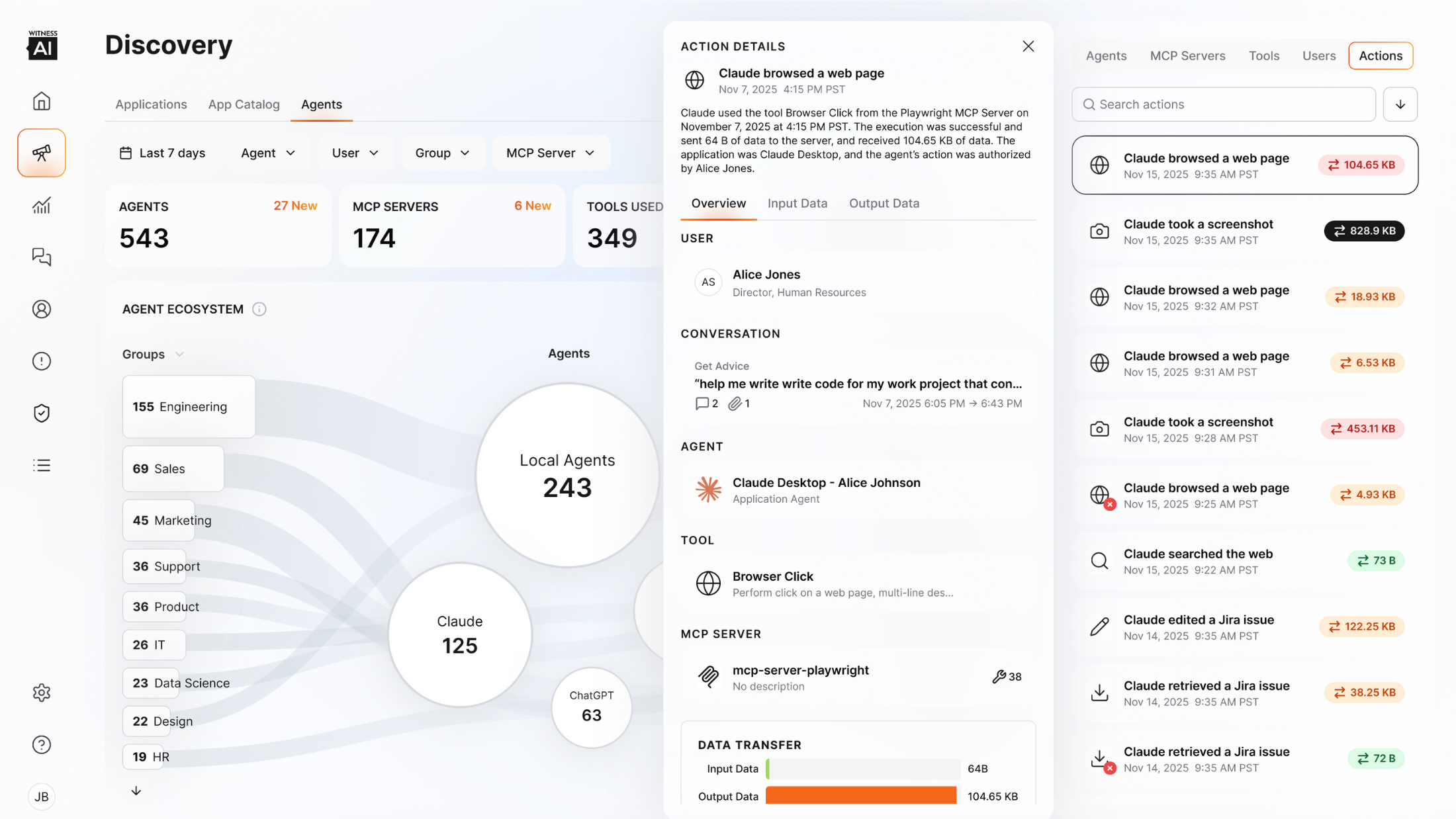

Pre-execution protection scans prompts before agents process them to detect and block prompt injection, jailbreaking, and role-playing attacks. Sensitive data—including PII, credentials, and financial information—can be tokenized in real time. This ensures the agent never sees sensitive values, and neither do downstream tools or databases it accesses.

Response protection scans agent output before delivery to users to filter harmful content and enforce policy compliance. By securing both directions of agent communication, the platform closes the gap that input-only or output-only solutions leave exposed. Engineering teams can integrate this protection into their agent orchestration layer without deploying endpoint clients or modifying application architecture.

Unlike security tools that rely on keyword matching, the platform provides policy control based on behavioral intent. It understands the meaning and intention of prompts—not just the words. This enables smarter, more accurate policy enforcement to block advanced threats such as prompt injection or multi-turn attacks.

Built for This Moment

WitnessAI was designed as a multi-generational AI security platform from the start. It’s not a collection of point solutions assembled in response to each new threat. It’s a single architecture designed to observe and protect AI at every stage of its evolution—including stages that have not yet arrived. These capabilities protect multi-generational AI apps built on foundational model APIs, custom LLMs, and AI agents.

Agentic security is the realization of that design. Enterprise AI agents are here. Our platform is ready.

WitnessAI Agentic Security is available January 2026.

Register for the webinar to see Agentic Security in action, or book a demo to speak with an AI security expert.