What Is Human in the Loop AI?

Human-in-the-loop (HITL) AI is a design pattern in artificial intelligence systems where human intelligence is strategically embedded into various stages of the machine learning lifecycle. This includes training, validation, and real-time operation, allowing human users to supervise, fine-tune, and intervene in AI workflows as needed.

Rather than fully delegating control to algorithms, HITL introduces human oversight to guide, review, and correct AI models. This approach is essential for use cases where machine learning models may lack context, encounter ambiguous inputs, or face high consequences for errors.

HITL vs Full Automation

In traditional automation systems, AI models process inputs and generate outputs without interruption. However, in human-in-the-loop AI, human users actively participate in one or more of the following:

- Data annotation: Supplying labeled data to improve model performance.

- Model training and tuning: Using human feedback to correct errors and bias.

- Inference oversight: Reviewing and validating model outputs in real time.

- Edge case handling: Detecting and responding to scenarios that fall outside normal distribution.

The HITL model ensures a feedback loop where machine outputs continuously benefit from human interaction, optimizing both performance and accountability.

The Importance of Human in the Loop AI

As AI capabilities become more advanced—especially with the emergence of LLMs and generative AI (GenAI)—the need for responsible, transparent, and human-guided AI has never been greater.

Why Human Oversight Matters

Even the most advanced AI systems struggle with:

- Low-resource domains lacking sufficient training data

- Context-sensitive decisions that require cultural or domain-specific knowledge

- Moral, legal, or ethical judgments that exceed algorithmic scope

- Novel edge cases that fall outside a model’s learned behavior

Human-in-the-loop machine learning bridges these gaps. It enables AI systems to function not as autonomous black boxes, but as collaborative tools governed by human knowledge, values, and control.

HITL in Compliance and Ethics

Human-in-the-loop systems are essential for organizations navigating both the regulatory landscape and the ethical challenges of deploying AI at scale. By embedding human oversight into critical decision points, enterprises can reduce risk, maintain accountability, and ensure their AI systems operate within legal and societal boundaries.

HITL for Regulatory Compliance

AI regulations around the world—including the EU AI Act, U.S. Executive Order 14110, GDPR, HIPAA, FINRA, and sector-specific guidelines—are increasingly requiring organizations to implement safeguards for automated systems. HITL provides a direct mechanism for meeting these regulatory obligations by reinforcing human control and documentation throughout the AI lifecycle.

Key compliance benefits of HITL include:

- Automated decision review: Many regulations restrict or condition the use of fully autonomous decision-making, particularly when decisions affect individual rights, financial status, or health. HITL ensures that human accountability is maintained, with real people validating or approving critical outcomes.

- Explainability and transparency: When regulators demand transparency in algorithmic decisions, HITL supports model interpretability by enabling humans to assess and explain how outputs were generated—especially important in black-box systems like deep learning or generative models.

- Auditability and traceability: Human-in-the-loop workflows inherently produce logs of decisions, interventions, and model outputs. These serve as audit trails that can be reviewed by compliance teams or regulatory bodies during investigations or routine assessments.

- Risk classification alignment: Under frameworks like the EU AI Act, HITL systems can help lower the risk classification of certain AI applications by ensuring human control is present. This may reduce the compliance burden and expand the viability of AI use in sensitive contexts.

By designing systems with compliance-by-default, organizations that embed HITL mechanisms are better positioned to adapt to rapidly evolving AI regulations and demonstrate good-faith efforts toward safe deployment.

HITL for Ethical AI Practices

Beyond legal compliance, HITL plays a vital role in supporting ethical AI development, ensuring that systems are fair, accountable, and aligned with human values.

Key ethical advantages of HITL include:

- Bias detection and mitigation: Algorithms trained on biased datasets can perpetuate discrimination in areas like hiring, lending, or criminal justice. Human reviewers can detect subtle patterns of bias and correct them before outputs reach production environments.

- Fairness and inclusion: HITL enables oversight by diverse human stakeholders, which improves fairness and reduces cultural, gender, or socio-economic blind spots. This is particularly important in global deployments of AI systems.

- Moral and social judgment: AI systems lack the capacity for empathy, nuance, or contextual ethics. In contrast, human reviewers can apply moral reasoning to complex or sensitive decisions, such as evaluating speech content or making life-impacting health assessments.

- User trust and social license: Public trust in AI systems often depends on perceptions of human involvement. Knowing that a person is still “in the loop” enhances transparency and confidence, especially for applications that affect people’s rights, identities, or futures.

- Responsibility and harm prevention: When AI systems are used in environments where errors could cause real-world harm, HITL offers a mechanism for real-time human intervention, helping to prevent or contain damage.

Embedding human intelligence into AI systems is a core tenet of responsible AI—and HITL is one of the most practical and effective methods for achieving it.

What Is the Difference Between Human in the Loop and AI in the Loop?

Although they sound similar, human-in-the-loop and AI-in-the-loop differ fundamentally in terms of control hierarchy and workflow design.

| Concept | Human-in-the-Loop (HITL) | AI-in-the-Loop |

| Primary decision-maker | Human | AI |

| AI’s role | Support and assist | Lead with optional oversight |

| Human role | Validate and correct AI outputs | Monitor or occasionally override AI |

| Example | Doctor approves AI diagnosis | AI system filters resumes with optional HR review |

In HITL systems, humans retain final control, ensuring that machine learning decisions are always subject to human review, especially in real-world, high-risk scenarios.

What Is the Difference Between Human in the Loop and Human Over the Loop?

Another common distinction is between human-in-the-loop (HITL) and human-over-the-loop (HOTL) systems. Both involve human participation but differ in timing and depth of involvement.

- Human-in-the-loop: Human actively engages during the AI process (e.g., correcting or approving outputs).

- Human-over-the-loop: Human monitors passively and steps in only if thresholds are exceeded or failures are detected.

| Feature | Human-in-the-Loop | Human-Over-the-Loop |

| Timing | Synchronous / real-time | Asynchronous / periodic |

| Involvement | Direct input at each decision point | Intervention only in exceptional cases |

| Use case | Model training, annotation, feedback loops | AI-powered surveillance, automated trading alerts |

HOTL is appropriate when human supervision is necessary but not practical at scale. In contrast, HITL is favored for safety-critical systems, or when model uncertainty is high.

Benefits of Human in the Loop AI

1. Enhancing Decision-Making Processes

AI models often process vast amounts of data faster than humans, but their decision-making lacks:

- Contextual understanding

- Moral or ethical reasoning

- Legal accountability

HITL systems enable hybrid intelligence, where algorithms process data efficiently, and humans interpret nuanced meaning, ensuring better-informed, more reliable decisions.

For example:

- In healthcare, an AI model can identify patterns in radiology scans, but a radiologist provides final approval.

- In finance, AI flags potential fraud, but a compliance officer evaluates if escalation is required.

This collaborative approach safeguards against overreliance on automation in high-stakes environments.

2. Improving Accuracy Through Feedback Loops

Human feedback plays a crucial role in:

- Correcting model errors

- Providing edge-case annotations

- Optimizing reinforcement learning policies

When data scientists apply HITL techniques—especially in active learning or semi-supervised learning contexts—they often:

- Prioritize samples that the model is most uncertain about

- Use human-in-the-loop validation to improve prediction confidence

- Fine-tune algorithms with targeted examples from real-world applications

This leads to higher-quality training datasets, fewer false positives, and more generalizable models.

Examples of Human in the Loop AI

HITL is used across industries and applications where precision, accountability, and real-time validation are critical.

1. Medical Diagnosis and Imaging

- AI assists in identifying anomalies in X-rays or MRIs.

- Physicians verify AI outputs, ensuring accurate clinical interpretations.

- Reduces diagnostic errors and improves treatment planning.

2. Generative AI Editing and Moderation

- LLMs (like GPT) can generate content but may introduce factual or ethical issues.

- Human reviewers validate or edit outputs before publication.

- Ensures alignment with brand tone, compliance, and factual accuracy.

3. Autonomous Vehicles

- AI systems manage navigation, object detection, and environmental awareness.

- In testing phases, human safety drivers intervene when AI fails.

- Hybrid operation reduces accidents during model development.

4. Financial Fraud Detection

- Machine learning detects transaction anomalies.

- Compliance officers review alerts before customer accounts are flagged.

- Prevents false accusations and maintains customer trust.

5. Customer Support Bots

- AI responds to routine queries.

- Human agents handle escalations or emotionally sensitive issues.

- This ensures a balanced user experience between speed and empathy.

6. Data Labeling and Annotation

- Human annotators label datasets for computer vision, NLP, or speech recognition.

- Labeled data improves supervised learning model accuracy.

- Common in industries like e-commerce, autonomous systems, and security surveillance.

7. Content Moderation

- Social platforms use AI to scan for policy-violating content.

- Human moderators review flagged items to confirm or dismiss.

- Balances free expression with safety and legal requirements.

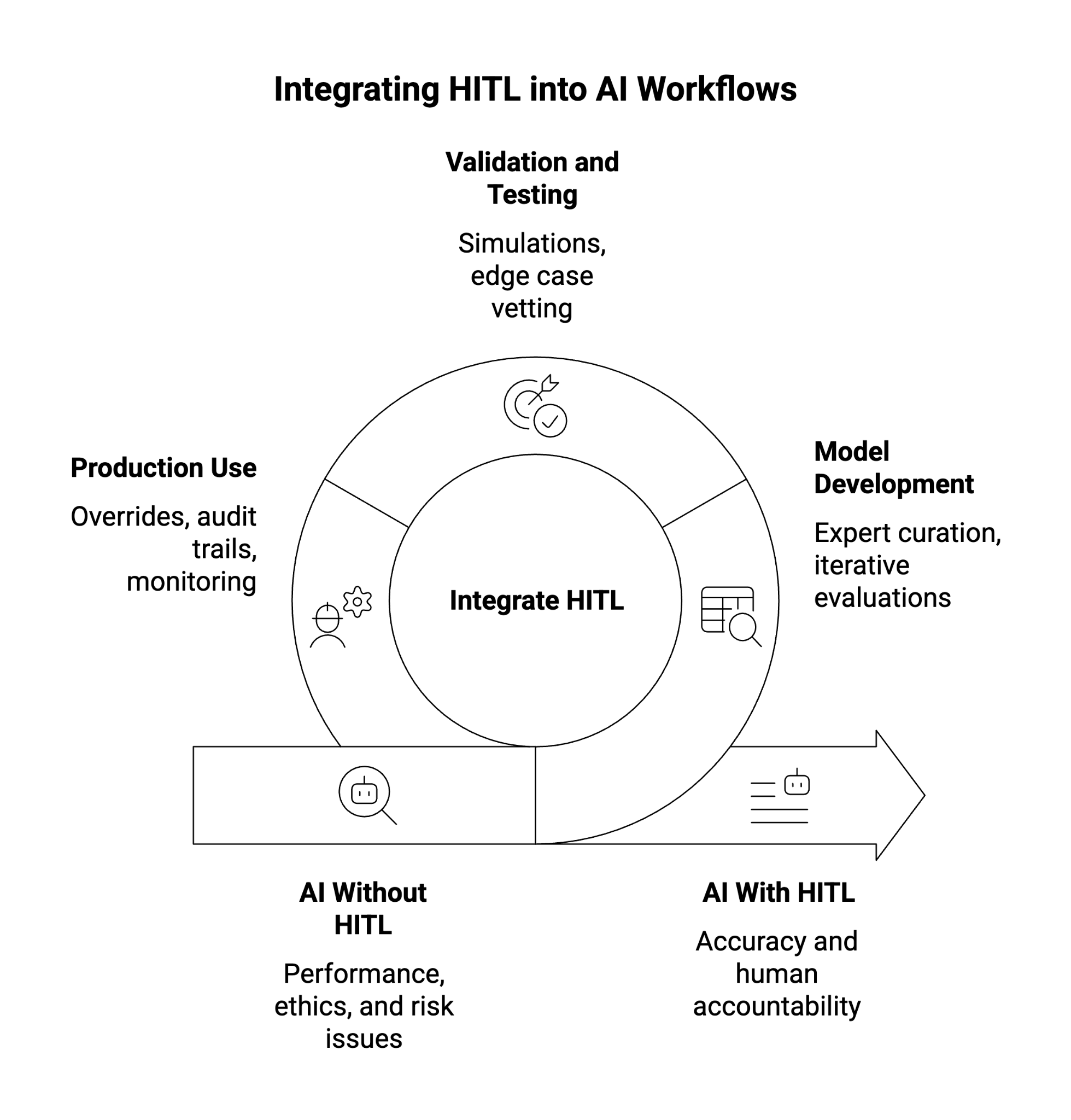

Integrating HITL into Enterprise AI Workflows

For companies building or deploying AI solutions, incorporating HITL into the AI lifecycle offers a robust way to manage performance, ethics, and risk. Here’s how to integrate HITL effectively:

1. During Model Development

- Involve domain experts in curating training data.

- Conduct iterative model evaluations using human feedback.

2. During Validation and Testing

- Run human-in-the-loop simulations to detect failure modes.

- Use annotators or reviewers to vet edge cases and outliers.

3. During Production Use

- Enable manual overrides and human audit trails in production.

- Use dashboards for real-time monitoring and alerts that escalate to human agents.

By embedding HITL throughout the AI lifecycle, teams ensure not only algorithmic accuracy, but also human accountability.

Challenges of HITL and How to Overcome Them

While HITL adds value, it also introduces complexities:

1. Scalability

Deploying humans for every model decision doesn’t scale easily.

Solution: Use HITL selectively—for high-risk tasks, edge cases, or during model drift detection.

2. Latency

Real-time human intervention can slow down system response.

Solution: Combine with confidence thresholds—only route low-confidence predictions to humans.

3. Consistency of Human Review

Different reviewers may apply different judgment criteria.

Solution: Standardize annotation guidelines and conduct reviewer training.

4. Cost

Hiring human annotators or specialists increases operational expense.

Solution: Leverage outsourced labeling platforms or automated pre-screening to reduce workload.

The Future of Human-in-the-Loop AI

As AI systems grow in complexity, HITL will remain central to:

- Managing regulatory compliance in AI deployments

- Training domain-specific LLMs with accurate context

- Deploying responsible AI in consumer, enterprise, and governmental applications

Advanced AI platforms are already combining human review tools, prompt tuning interfaces, and real-time annotation UIs into their stacks—ensuring continuous feedback, risk management, and model evolution through human collaboration.

Conclusion

Human-in-the-loop AI is not just a safety net—it’s a strategic advantage. By embedding human expertise, feedback, and validation into the heart of machine learning workflows, organizations can:

- Increase model accuracy

- Strengthen trust and transparency

- Support ethical and compliant deployment

- Handle edge cases and real-world complexity

- Enable responsible innovation at scale

As the demand for GenAI, LLMs, and automated decision systems rises, human-in-the-loop machine learning will continue to be the gold standard for building resilient, accountable, and human-centric AI systems.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.