What Is AI Risk Management?

AI risk management refers to the structured process of identifying, assessing, mitigating, and monitoring potential risks associated with the development and use of artificial intelligence (AI) systems. As AI technologies are integrated into critical decision-making processes across sectors like finance, healthcare, cybersecurity, and logistics, the need for effective risk management has become paramount.

AI risk management frameworks aim to protect organizations and stakeholders from unintended or harmful outcomes—ranging from algorithmic bias and data breaches to regulatory violations and operational failures. A robust AI risk management strategy is essential not only to ensure compliance with evolving legal mandates but also to build trust in the responsible use of AI.

What Is AI Risk?

AI risk encompasses the potential for harm, failure, or misuse arising from the use of AI systems. These risks stem from various factors, including:

- Bias in training data or algorithms

- Lack of transparency in decision-making

- Data privacy violations

- Security vulnerabilities and adversarial attacks

- Model drift or degradation over time

- Errors in real-time outputs due to poor validation or oversight

Some AI risks are classified as high-risk, especially under legislative frameworks such as the EU AI Act, which targets systems that may significantly impact safety, fundamental rights, or socio-economic well-being.

The risk landscape evolves rapidly as generative AI, machine learning, and automated decision-making technologies advance. Without proper safeguards, these tools can produce inaccurate, unethical, or even dangerous outcomes.

What Are the 4 Levels of AI Risk?

AI risks can be categorized into four primary levels based on potential impact and regulatory classification. Understanding these levels helps organizations prioritize mitigation strategies and design controls proportional to risk severity.

- Minimal or Low Risk

AI applications that pose little or no threat to safety or rights.

Examples: Spam filters, product recommendations, or automated scheduling assistants.

- Focus: Maintain transparency and reliability standards.

- Goal: Optimize usability while preserving data integrity.

- Limited Risk

Systems that interact with users but have limited potential for harm if misused or incorrect.

Examples: Chatbots or customer service assistants.

- Focus: Ensure clear user disclosures that AI is being used.

- Goal: Manage expectations and prevent overreliance on automated outputs.

- High Risk

Applications that influence critical decisions in domains like healthcare, employment, education, or law enforcement.

Examples: Credit scoring systems, medical diagnosis tools, or recruitment algorithms.

- Focus: Conduct ongoing risk assessments, data quality audits, and explainability reviews.

- Goal: Mitigate bias, safeguard sensitive information, and ensure regulatory compliance.

- Unacceptable Risk

AI systems that pose serious threats to human safety, rights, or democratic values.

Examples: Social scoring systems or AI models used for surveillance and manipulation.

- Focus: Typically prohibited under AI regulation frameworks such as the EU AI Act.

- Goal: Eliminate deployment or restrict development entirely.

By mapping AI systems across these levels, organizations can automate parts of their risk evaluation process and ensure that AI security and compliance controls scale appropriately with system complexity.

AI Risk Management and AI Governance

AI governance establishes the policies, standards, and oversight mechanisms that ensure AI technologies are designed and used responsibly. AI risk management, on the other hand, provides the tactical foundation for managing the specific threats identified within those governance structures.

While governance defines who makes the decisions and how accountability is maintained, risk management defines what risks exist and how they are controlled.

Stronger integration between governance and risk management leads to:

- Holistic Oversight: AI providers and internal teams collaborate to align security, ethics, and compliance goals.

- Automated Risk Controls: Embedding automated monitoring tools within governance workflows enables faster detection of anomalies or security risks.

- Cross-Functional Collaboration: Legal, compliance, data science, and cybersecurity teams share a unified risk taxonomy to enhance communication.

- Enhanced Trustworthiness: Transparency, documentation, and stakeholder engagement ensure that AI systems are explainable and auditable.

Ultimately, AI risk management serves as the operational backbone of AI governance—linking strategic policy decisions to measurable, enforceable outcomes that sustain organizational trustworthiness and public confidence.

Why Risk Management in AI Systems Is Important

The unique characteristics of AI introduce novel risk vectors. Traditional risk management tools often fall short in addressing the complexity of AI models, datasets, and automated outputs.

Here’s why AI-specific risk management is critical:

- Preventing Harm: In healthcare or criminal justice, AI decisions can directly impact human lives. Risk-based frameworks help mitigate harm to individuals and communities.

- Ensuring Fairness: AI systems trained on biased or incomplete datasets may discriminate against certain demographics, creating societal and legal consequences.

- Maintaining Trust: Users and stakeholders are more likely to adopt AI solutions that are safe, secure, and explainable.

- Meeting Compliance Requirements: Regulatory bodies like the National Institute of Standards and Technology (NIST) and ISO emphasize risk-based approaches for aligning with regulatory requirements.

- Protecting Sensitive Data: AI models often process large amounts of personal and proprietary information, making data privacy a top concern.

- Adapting to Real-World Use Cases: As AI systems are deployed in real-time, they must respond accurately to dynamic and unpredictable inputs.

What Is an AI Risk Management Framework?

An AI risk management framework is a structured set of principles, processes, and tools that guide organizations in identifying, evaluating, mitigating, and monitoring risks throughout the AI lifecycle. Unlike traditional risk management approaches, AI-specific frameworks address unique challenges such as model opacity, evolving datasets, and the ethical implications of automated decision-making.

A strong framework helps organizations operationalize AI security, trustworthiness, and governance across departments—ensuring that technical safeguards and policy controls evolve alongside technological advancements.

Key objectives of an AI risk management framework include:

- Standardization: Establish consistent methods for assessing and reporting AI-related risks.

- Prioritizing Risks: Rank potential threats based on their likelihood and potential impact, allowing teams to focus on the most critical vulnerabilities.

- Accountability and Oversight: Define clear ownership of risk across providers, developers, and business stakeholders.

- Integration with Compliance: Align with regulatory expectations such as the EU AI Act, NIST AI RMF, and ISO/IEC 23894.

- Continuous Improvement: Regularly update controls as AI models evolve and new security risks emerge.

By implementing a formalized framework, organizations can strengthen operational resilience, reduce exposure to sensitive information breaches, and foster public confidence in AI systems’ reliability.

What Is the NIST AI Risk Management Framework?

The NIST AI Risk Management Framework (AI RMF), released by the National Institute of Standards and Technology, is a comprehensive guide designed to help organizations manage AI risks systematically. It provides a voluntary, rights-preserving, and use-case-agnostic roadmap for integrating risk-based decision-making into AI development and deployment.

Key features of the NIST AI RMF:

- Core Functions: The framework is organized into four iterative functions—Map, Measure, Manage, and Govern—designed to guide AI risk management throughout the AI lifecycle.

- Risk-Based Approach: Encourages organizations to assess the potential impact and likelihood of harm, then prioritize mitigation strategies accordingly.

- Stakeholder Engagement: Promotes collaboration across technical, operational, and governance teams, including data scientists, engineers, risk officers, and executives.

- Interpretable and Explainable AI: Stresses the importance of building interpretable, explainable, and trustworthy AI systems.

- Alignment with Industry Standards: The framework supports compatibility with global standards such as ISO/IEC 23894 and complements emerging regulatory requirements like the EU AI Act.

The NIST AI RMF is both forward-looking and adaptable, making it one of the most referenced methodologies for AI risk management in both the public and private sectors.

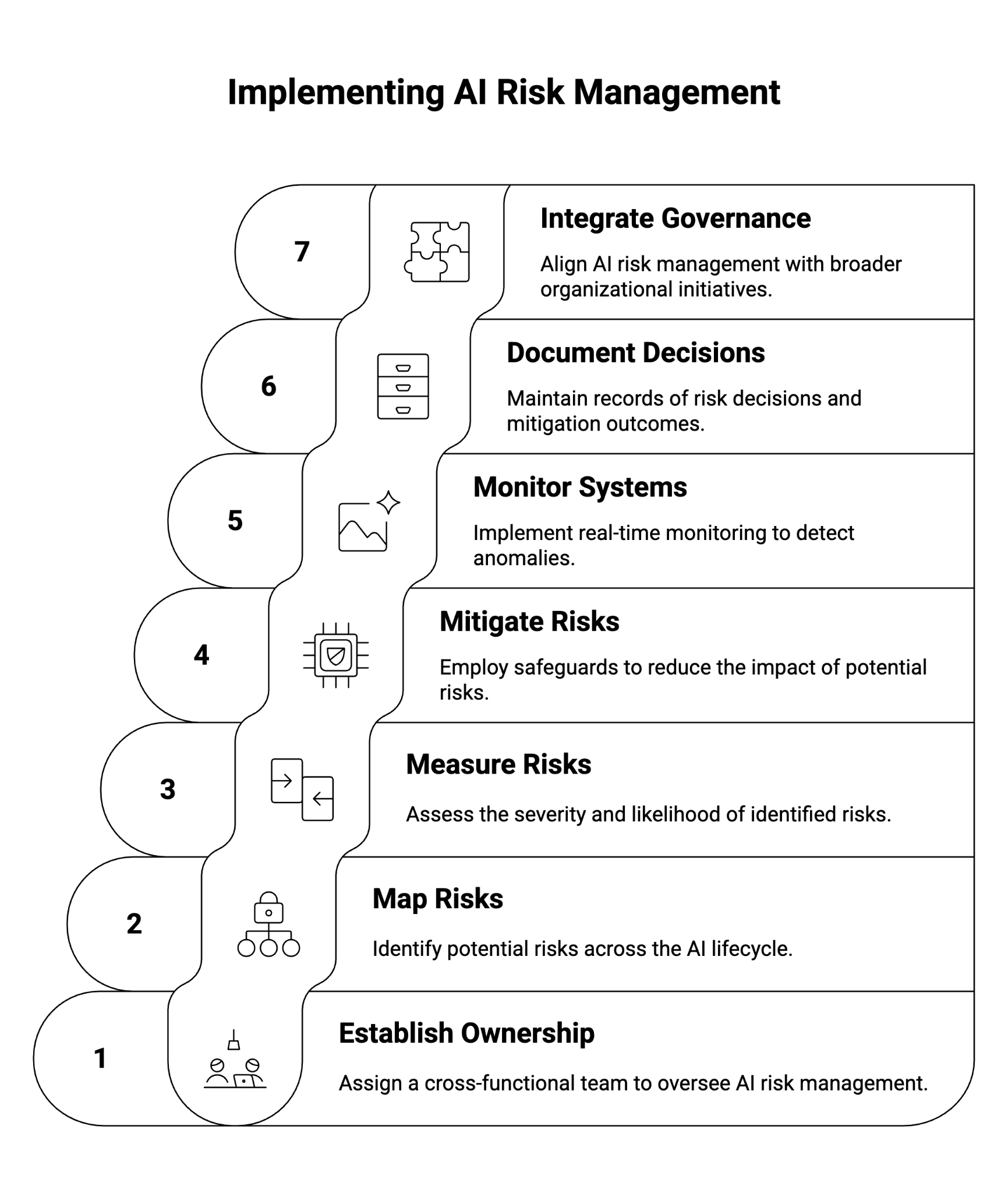

How to Implement an AI Risk Management Framework

Implementing a successful AI risk management framework requires a structured approach that spans technical, operational, and governance domains. Here are the key steps:

1. Establish AI Risk Management Ownership

- Assign a cross-functional team responsible for risk assessment, compliance, and continuous monitoring.

- Include roles from data science, cybersecurity, legal, and business units.

2. Map Risks Across the AI Lifecycle

- Identify potential risks during data collection, training, model development, validation, deployment, and monitoring.

- Account for both internal and external threats, including supply chain vulnerabilities.

3. Measure and Prioritize Risks

- Use qualitative and quantitative metrics to assess the severity and likelihood of each identified risk.

- Prioritize based on impact potential, regulatory exposure, and system criticality.

4. Mitigate Risks Using Targeted Controls

- Employ safeguards such as:

- Bias detection tools

- Input/output validation

- Red teaming

- Access controls

- Differential privacy techniques

- Build robustness into AI models to withstand adversarial manipulation.

5. Monitor and Audit AI Systems

- Implement real-time monitoring to detect anomalies or shifts in behavior.

- Conduct regular audits to ensure that risk mitigation strategies remain effective.

6. Document and Communicate Risk Decisions

- Maintain records of identified risks, risk-based decision-making, and mitigation outcomes.

- Communicate clearly with stakeholders and policymakers to promote transparency.

7. Integrate with Broader Governance and Compliance Initiatives

- Align AI risk management with:

- Organizational cybersecurity programs

- Responsible AI initiatives

- Regulatory compliance efforts

- Industry playbooks and benchmarks

Conclusion: Building Trust Through Effective AI Risk Management

As AI technologies become embedded in the fabric of modern life, organizations must take a risk-based approach to ensure safe, ethical, and lawful use. Effective AI risk management is not a one-time effort but a continuous process—rooted in governance, transparency, and accountability.

By adopting frameworks like the NIST AI Risk Management Framework, organizations can optimize AI performance while mitigating risks to people, systems, and society at large. With a solid foundation in risk management, stakeholders can confidently embrace AI’s potential while safeguarding against its pitfalls.

About WitnessAI

WitnessAI is the confidence layer for enterprise AI, providing the unified platform to observe, control, and protect all AI activity. We govern your entire workforce, human employees and AI agents alike, with network-level visibility and intent-based controls. We deliver runtime security for models, applications, and agents. Our single-tenant architecture ensures data sovereignty and compliance. Learn more at witness.ai.