What Are AI Regulations?

Calls for AI regulation have been steadily increasing since the early 2010s, driven by the rapid acceleration of artificial intelligence capabilities and their impact on society. High-profile incidents involving algorithmic bias, facial recognition misuse, and privacy violations have spotlighted the need for governance.

Early regulatory efforts such as the EU’s General Data Protection Regulation (GDPR), implemented in 2018, set the tone for global policy conversations. While not AI-specific, GDPR established foundational rights over personal data—forcing AI developers and companies to consider how AI systems collect, process, and store information. This law became a model for data protection worldwide and indirectly influenced AI legislation across regions.

Artificial intelligence (AI) regulations are legal frameworks, standards, and policies designed to govern the development, deployment, and use of AI systems. These regulations aim to ensure that AI technologies are implemented responsibly, ethically, and safely while fostering innovation and protecting public interest. As AI technologies such as generative AI and machine learning algorithms become increasingly embedded in sectors like healthcare, finance, law enforcement, and critical infrastructure, the call for trustworthy and transparent AI governance intensifies.

AI regulations can be enacted at various levels:

- International bodies create guiding principles.

- National governments enact binding laws.

- States and provinces develop localized mandates.

- Private and public sectors adopt self-regulatory measures.

These regulations address concerns ranging from algorithmic discrimination, personal data privacy, and intellectual property to national security threats like deepfakes and automated decision-making in law enforcement.

What Are the Specific Laws That Regulate AI?

Governments around the world are introducing AI-specific laws, as well as amending existing legal structures to cover AI-related concerns. These laws fall into several broad categories and are increasingly being enforced.

Real-World Enforcement Examples

- In 2023, Italy’s data protection agency temporarily banned ChatGPT over concerns about improper data collection, prompting OpenAI to implement new transparency measures.

- The Dutch Data Protection Authority fined a local government for using AI in welfare fraud detection without sufficient explainability.

- Clearview AI faced multiple lawsuits in the U.S. and Europe for scraping biometric data without consent, leading to regulatory penalties and operational restrictions.

Emerging Frameworks from 2024–2025

- India’s Digital Personal Data Protection Act (2023) lays groundwork for future AI regulation by focusing on consent, data fiduciaries, and cross-border data flow.

- South Korea introduced AI safety testing guidelines and plans legislation modeled after the EU AI Act.

- Singapore expanded its Model AI Governance Framework to address generative AI and foundation models.

- The UAE launched the Artificial Intelligence and Blockchain Council to oversee national AI ethics and regulation pilots.

These developments signal a shift from theoretical discussion to real-world enforcement and compliance obligations.

1. Comprehensive AI Laws

- EU AI Act: Categorizes AI systems by risk levels—unacceptable, high, limited, or minimal—and sets requirements for providers and deployers of high-risk AI systems.

- AI Bill of Rights (US): A non-binding blueprint outlining five key principles to protect citizens in the age of AI, including data privacy, algorithmic fairness, and human oversight.

2. Data Protection and Privacy Laws

- GDPR (EU): Applies to AI systems that process personal data, mandating lawful, transparent, and limited use.

- California Consumer Privacy Act (CCPA) and California Privacy Rights Act (CPRA): Influence AI data handling and user consent in the U.S.

3. Sector-Specific Laws

- Healthcare: HIPAA (U.S.) and the EU’s Medical Device Regulation (MDR) govern AI applications in diagnostics and medical devices.

- Finance: The Equal Credit Opportunity Act (U.S.) and PSD2 (EU) restrict algorithmic discrimination in financial decisions.

4. Algorithmic Accountability Laws

- NYC Local Law 144: Requires annual bias audits for automated employment decision tools.

- Colorado SB 169: Protects consumers from algorithmic discrimination in insurance underwriting.

5. Cybersecurity and Critical Infrastructure

- NIST AI Risk Management Framework (U.S.): Offers voluntary guidance for secure and responsible AI use.

- Executive Order on Safe, Secure, and Trustworthy AI (2023): Establishes federal principles for responsible AI innovation and oversight.

How Do Different Countries Approach AI Regulation?

India

India has taken a phased approach to AI regulation. The Digital Personal Data Protection Act (DPDPA), effective 2023, sets the foundation for data-driven AI governance with strong consent and localization rules. The IndiaAI Mission (2024) aims to develop ethical standards, while the Ministry of Electronics and IT is drafting dedicated AI legislation addressing algorithmic transparency and discrimination. India’s National Strategy for AI also promotes responsible innovation in agriculture, healthcare, and education.

South Korea

South Korea’s AI Basic Act (2024 draft) emphasizes safe and ethical AI deployment, focusing on national security, healthcare, and manufacturing. The Ministry of Science and ICT introduced AI safety testing centers and national certification schemes. The government also funds education programs to train developers in responsible AI, ensuring technology aligns with ethical norms.

Singapore

Singapore continues to lead in AI governance. The Model AI Governance Framework (3rd Edition, 2024) now covers generative AI, transparency reporting, and human oversight. The Personal Data Protection Commission (PDPC) enforces these principles through regulatory sandboxes, allowing companies to test AI systems safely while remaining compliant.

United Arab Emirates (UAE)

The UAE maintains one of the world’s most forward-looking AI governance strategies. Through the Artificial Intelligence and Blockchain Council and Minister of State for AI, the UAE is developing national standards for AI ethics, data privacy, and sector-specific regulation. Pilot initiatives in transportation, energy, and smart cities test AI accountability mechanisms before full rollout.

Australia

Australia’s approach focuses on guidance and ethical principles rather than strict legislation. The AI Ethics Framework promotes fairness, transparency, and human-centered values. The Department of Industry, Science and Resources is evaluating public feedback on potential legislation while expanding funding for trustworthy AI research.

Brazil

Brazil’s General Data Protection Law (LGPD) serves as a key foundation for AI governance. Proposed AI Bill PL 2338/23, currently under review, introduces risk-based regulation similar to the EU AI Act. The government aims to balance innovation with accountability, particularly in public-sector automation.

Canada

Canada’s Artificial Intelligence and Data Act (AIDA), expected to take effect in 2025, will regulate “high-impact” AI systems, emphasizing fairness, transparency, and human oversight. It complements existing privacy laws enforced by the Office of the Privacy Commissioner (OPC) and aligns with the Digital Charter Implementation Act.

China

China enforces some of the most comprehensive AI regulations globally. The Interim Measures for Generative AI (2023), Deep Synthesis Regulations (2022), and Algorithmic Recommendation Guidelines (2022) impose content and safety requirements on AI providers. The Cyberspace Administration of China (CAC) oversees compliance, emphasizing content moderation, national security, and societal harmony.

Council of Europe

The Framework Convention on AI, Human Rights, Democracy, and the Rule of Law (2024) represents the first binding international treaty on AI governance. It focuses on safeguarding human rights and democratic principles across member states.

European Union

The EU AI Act (2024) is the world’s first comprehensive AI law. It mandates risk-based classification, transparency obligations, and penalties for non-compliance. The EU is also developing AI liability reforms and coordinating with the European Data Protection Board (EDPB) for joint enforcement.

Germany

Germany’s AI Strategy 2025 complements the EU AI Act by promoting innovation in industry and automation while ensuring robust oversight. National initiatives fund explainable AI research and ethical development in automotive manufacturing and robotics.

Israel

Israel’s regulatory philosophy blends innovation with ethics. The Israel Innovation Authority and Ministry of Innovation released national AI principles promoting safety and fairness. Sectoral regulations in healthcare and cybersecurity are being drafted to ensure responsible deployment of advanced AI models.

Italy

Italy’s Garante per la Protezione dei Dati Personali remains one of Europe’s most active AI regulators, enforcing GDPR violations and leading EU discussions on generative AI ethics. In 2024, Italy established a national committee to study algorithmic accountability in public services.

Morocco

Morocco is developing its National AI Strategy 2030, emphasizing responsible use in education, agriculture, and public service. The country collaborates with the African Union on AI ethics frameworks aligned with continental priorities.

New Zealand

New Zealand’s Algorithm Charter for Aotearoa guides ethical AI use in government. In 2024, new public-sector audits evaluated algorithmic decision-making transparency and bias, integrating Māori ethical frameworks into AI governance.

Philippines

The Philippines’ AI Roadmap (DTI) outlines plans to establish AI research centers and develop an AI regulatory framework emphasizing job creation, education, and industrial innovation. Regulatory principles prioritize transparency and equitable access to AI benefits.

Spain

Spain created the Spanish Agency for the Supervision of Artificial Intelligence (AESIA), the EU’s first national AI regulator. AESIA enforces compliance with both national laws and the EU AI Act, offering a model for other countries establishing AI oversight agencies.

Switzerland

Switzerland promotes a “soft law” approach, applying existing privacy and consumer protection laws to AI. The Federal Council supports voluntary AI ethics standards aligned with OECD principles, encouraging transparency and innovation.

United Kingdom

The UK’s AI Regulation White Paper (2023) outlines a flexible, sector-based approach focusing on pro-innovation governance. Regulators like the FCA, ICO, and CMA coordinate oversight. In 2024, the UK announced plans for an AI Safety Institute to test foundation models and assess risk before deployment.

United States

The U.S. lacks a single federal AI law but has robust sectoral and state-level frameworks.

- Federal Level: NIST’s AI RMF, the White House’s Executive Order on AI Safety (2023 – rescinded), and agency-specific guidelines collectively form a de facto regulatory framework.

- State Level:

- California: Enforces data privacy through CCPA/CPRA.

- New York: Requires bias audits for automated employment systems.

- Colorado: Protects consumers from algorithmic discrimination.

- Illinois and Massachusetts: Lead on biometric data and facial recognition laws.

How Are States Currently Regulating AI Systems?

Several U.S. states are setting precedents for AI regulation, targeting areas such as biometric data, algorithmic transparency, and consumer rights. These laws focus on:

- Biometric data collection and use

- Automated decision-making in employment and lending

- Algorithmic explainability and fairness

- Data privacy and consumer consent

As state-level frameworks multiply, companies must implement unified compliance programs that map requirements across jurisdictions.

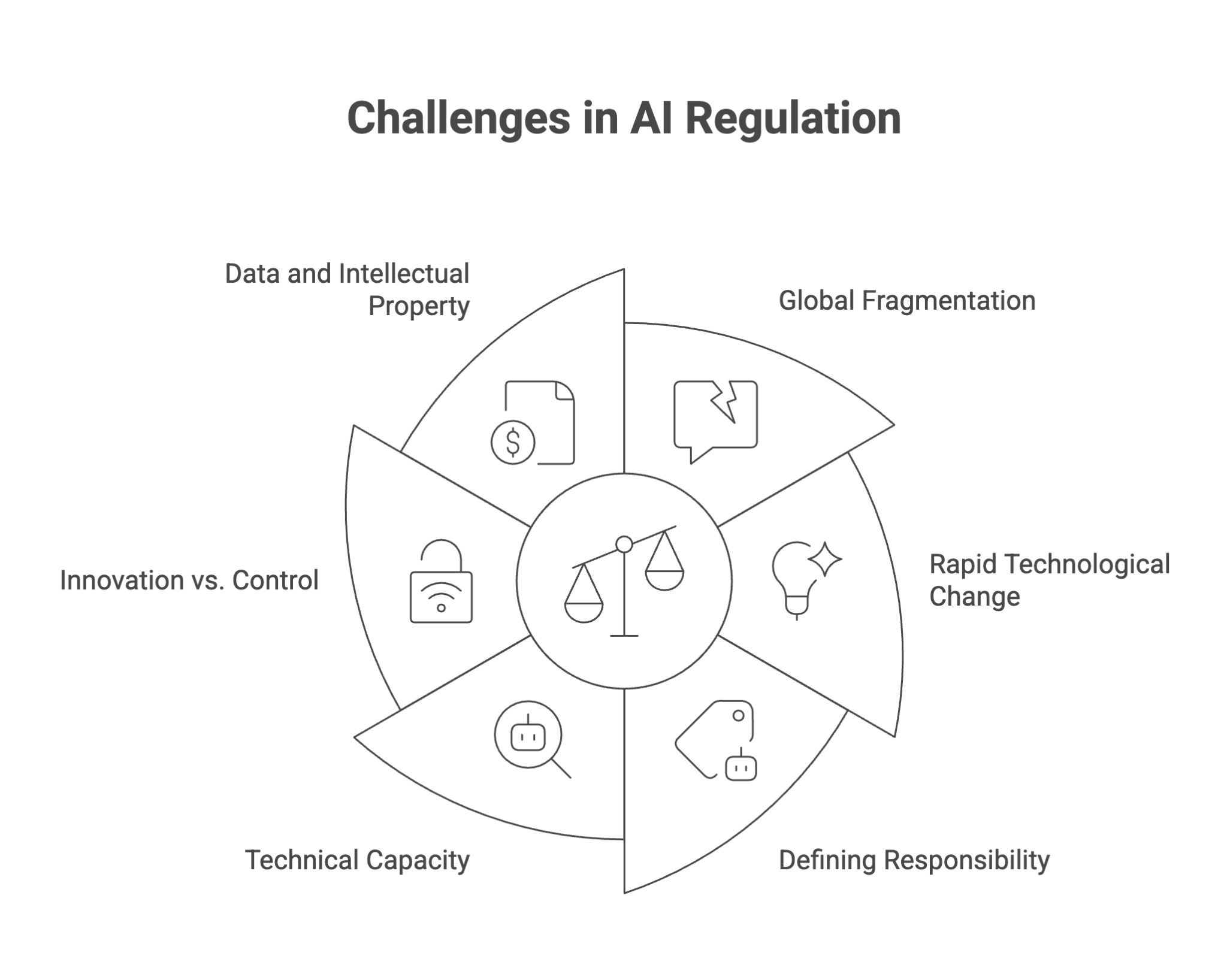

What Are the Challenges in Creating Effective AI Regulations?

Designing strong yet flexible regulation of AI remains one of the most complex legal challenges of the 21st century.

1. Global Fragmentation

Different definitions and priorities across jurisdictions hinder international alignment. AI companies must navigate overlapping requirements between the EU, U.S., and Asia.

2. Rapid Technological Change

AI evolves faster than laws. Emerging technology like multimodal systems, generative models, and autonomous agents challenges traditional legal frameworks.

3. Defining Responsibility

When AI outputs cause harm or bias, determining liability—developer, deployer, or user—remains ambiguous under existing laws.

4. Technical Capacity

Many regulators lack the tools or expertise to evaluate complex models. Working groups and AI offices are being established globally to close this enforcement gap.

5. Innovation vs. Control

Overregulation risks stifling AI innovation. Policymakers must balance safety with flexibility, ensuring responsible experimentation through regulatory sandboxes.

6. Data and Intellectual Property

AI models rely on large datasets, raising questions about ownership, copyright, and fair use—especially for chatbots and generative models that reuse creative content.

How Do AI Regulations Impact Businesses and Innovation?

AI regulations have both positive and negative implications for businesses:

Positive Impacts

- Increased Trust and Transparency: Regulations improve public confidence, encouraging adoption of AI-powered solutions.

- Market Differentiation: Compliant organizations can position themselves as responsible leaders in their sectors.

- Improved Data Governance: Stronger data management and documentation processes reduce operational risk.

- Long-Term Sustainability: Ethical AI practices align with environmental, social, and governance (ESG) goals.

Negative Impacts

- Compliance Complexity: Navigating overlapping laws across multiple jurisdictions increases legal and administrative costs.

- Innovation Bottlenecks: Startups and SMEs may face barriers due to regulatory overhead.

- Operational Friction: Continuous audits, documentation, and transparency requirements can slow deployment cycles.

- Unclear Accountability: In complex AI supply chains, defining liability between providers and deployers remains challenging.

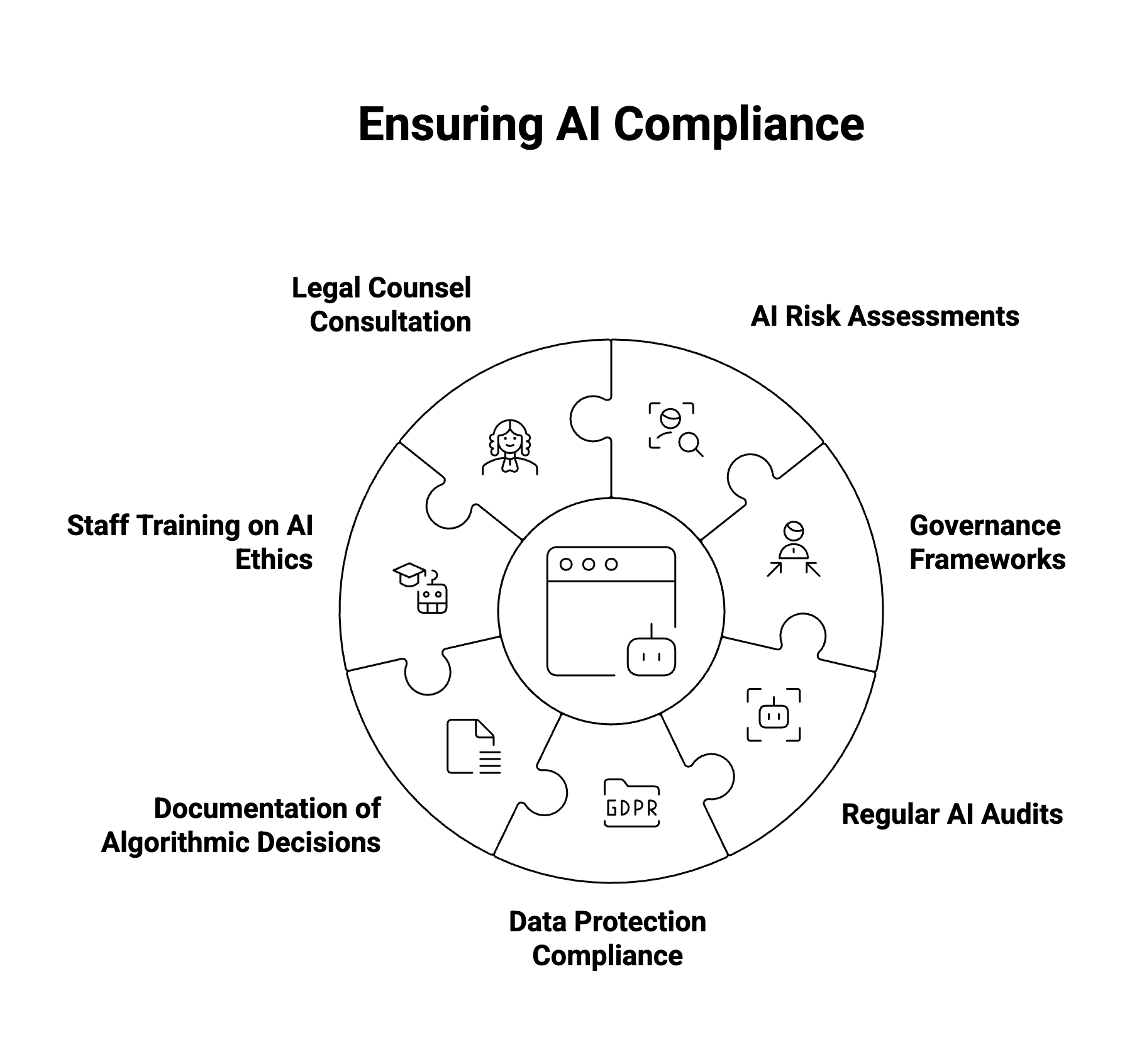

How Can You Make Sure Your AI Efforts Are Compliant?

Organizations can follow these best practices to align with emerging AI regulatory frameworks:

All Organizations

- Conduct AI Risk Assessments: Identify risks associated with the use of AI applications and datasets.

- Implement Governance Frameworks: Establish internal AI oversight committees and protocols.

- Audit AI Systems Regularly: Evaluate AI models for bias, fairness, and accuracy.

- Ensure Data Protection Compliance: Align with GDPR, CCPA, and other privacy laws.

- Document Algorithmic Decisions: Maintain logs and documentation for high-risk AI use cases.

- Train Staff on AI Ethics and Compliance: Educate employees on the legal and ethical use of AI tools.

- Work With Legal Counsel: Consult experts on AI regulation and emerging compliance requirements.

Small and Medium Enterprises (SMEs)

- Use Pre-Built Compliance Platforms: Leverage tools like Microsoft Responsible AI Dashboard or Google’s Model Cards to assess risks.

- Outsource Risk Audits: Engage third-party assessors for bias detection and data privacy compliance.

- Focus on Transparency and Explainability: Use open-source toolkits like LIME or SHAP to help customers understand AI decisions.

Large Enterprises

- Deploy AI Governance Platforms: Tools like IBM Watson OpenScale, Fiddler AI, or Arthur help monitor model drift, fairness, and compliance.

- Create Cross-Functional AI Committees: Include legal, technical, and operational leads to guide enterprise-wide governance.

- Conduct Continuous Monitoring: Automate logging and alerts for compliance violations in real-time AI systems.

AI Regulation Tracker

Keeping up with the fast-changing regulation of AI is a full-time challenge. To simplify this, WitnessAI created the AI Regulation Tracker—an interactive resource that visualizes current and proposed AI laws worldwide.

The tool allows users to:

- Search and filter by region, country, or framework type.

- Track new legislation like the Artificial Intelligence Act, Canada’s AIDA, or state-level U.S. initiatives.

- Access summaries of existing laws, compliance timelines, and risk mitigation best practices.

This tracker helps compliance teams, policymakers, and business leaders stay informed about the evolving global landscape—empowering proactive governance and strategic decision-making.

Explore the AI Regulation Tracker

The Future of AI Regulation

The next decade will likely bring greater international harmonization in AI governance. Organizations like the OECD, UNESCO, and the G7 are pushing toward unified global standards that promote safety without stifling innovation.

Future regulations will expand into new domains—intellectual property for AI-generated works, labor market effects, and environmental sustainability of large-scale model training. Regulatory sandboxes and dedicated AI agencies (like Spain’s AESIA and the UK’s AI Safety Institute) will play a growing role in shaping safe innovation.

Ultimately, success will depend on collaboration between governments, academia, and industry to ensure AI remains both powerful and aligned with human values.

Conclusion

As artificial intelligence continues to evolve, the global patchwork of AI regulations is becoming more sophisticated and interdependent. From the EU AI Act to emerging frameworks in Asia, the Americas, and Africa, a coordinated response is forming to ensure responsible innovation.

For businesses, compliance is no longer optional—it’s strategic. Companies that embed AI governance and ethical design into their operations will not only meet legal obligations but also build lasting trust with customers and regulators alike.

The future of AI regulation will define how society harnesses artificial intelligence: responsibly, transparently, and for the collective good.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.