What is AI Privacy?

AI privacy refers to the principles, practices, and safeguards designed to ensure that artificial intelligence (AI) systems protect individual privacy rights during data collection, processing, and decision-making. As AI technologies evolve—particularly in areas like machine learning algorithms, generative AI, and facial recognition—their capacity to process vast quantities of personal data raises serious privacy concerns.

From healthcare diagnostics to social media monitoring and chatbots like ChatGPT, AI applications rely on user data to train models and generate outputs. But without stringent privacy protection mechanisms in place, this data can be misused, exposed, or exploited, endangering consumer privacy and regulatory compliance. As such, AI privacy has become a critical focal point for policymakers, technologists, and stakeholders across jurisdictions.

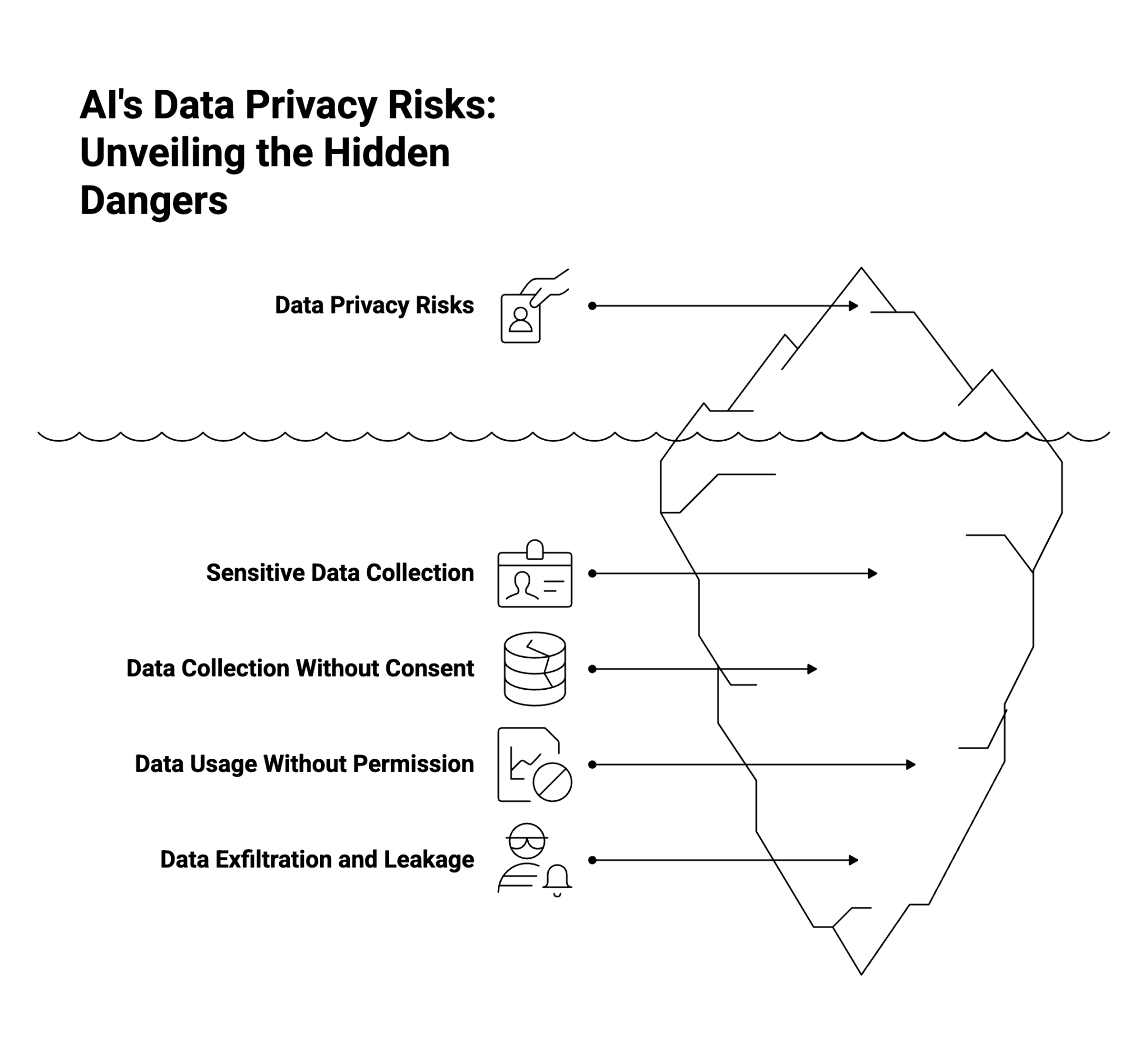

What Are the Data Privacy Risks of AI?

Collecting Sensitive Data

AI systems frequently ingest and process sensitive information such as biometric data, financial records, and details related to sexual orientation, health, or identity. The indiscriminate use of such data, especially without adequate anonymization or safeguards, creates high-risk scenarios that can result in identity theft, discrimination, or reputational harm.

Collecting Data Without Consent

Some AI-driven platforms collect user data without explicit consent—often by scraping public content or harvesting information from social media. This raises ethical and legal questions regarding the scope of informed consent and challenges the core principles of privacy laws like the General Data Protection Regulation (GDPR).

Using Data Without Permission

AI tools often reuse training datasets containing user data in ways not originally intended. For example, large language models (LLMs) may inadvertently generate responses that reference or infer real-world individuals. This practice undermines trust and violates the principle of data minimization—a cornerstone of responsible data governance.

Data Exfiltration and Leakage

As AI systems interface with numerous applications and APIs, they become potential vectors for data breaches and exfiltration. AI privacy issues are compounded when sensitive information is leaked through poorly secured outputs or via adversarial attacks such as prompt injection or model inversion.

Learn More: Understanding AI Risks: A Comprehensive Guide to the Dangers of Artificial Intelligence

Real-World Examples of AI Privacy Issues

Facial Recognition Surveillance

Facial recognition technologies have triggered global privacy debates. In several high-profile cases, law enforcement and private entities used AI systems to track individuals without consent. For instance, Clearview AI’s large-scale scraping of social media images sparked lawsuits in multiple countries for violating biometric data protection laws.

Voice Assistants and Accidental Recordings

Smart assistants like Alexa and Google Assistant have been found to record and store private conversations, sometimes inadvertently. These recordings—reviewed by human contractors for “quality control”—illustrate how AI systems can unintentionally violate user privacy.

Chatbot Data Retention

Generative AI tools trained on user prompts have occasionally stored sensitive or confidential data. In one instance, an enterprise user’s proprietary information was unintentionally retained in a chatbot’s training dataset, posing significant intellectual property and privacy risks.

These examples highlight that AI privacy violations are not theoretical—they have direct legal, ethical, and reputational consequences.

How Is AI Collecting and Using Data?

Personal Data

Personal data used in AI development includes names, email addresses, geolocation, facial images, voice recordings, and behavioral patterns. These data points feed into machine learning algorithms to improve personalization, recommendation engines, and real-time decision-making. However, without safeguards, they also expose users to privacy breaches.

Public Data

AI systems also leverage massive amounts of publicly available data—such as social media content, online forums, and scraped websites—for training purposes. While technically accessible, the use of public data raises ethical questions about context, user expectations, and the potential for misuse in profiling or behavioral prediction.

How to Mitigate AI Privacy Risks

Data Minimization

Organizations should implement data minimization principles by collecting only what is strictly necessary for the intended AI use cases. This reduces the risk of overexposure and helps meet compliance obligations under privacy regulations like the GDPR and the AI Act.

Encryption

Robust encryption mechanisms—both at rest and in transit—should be applied to all personal and sensitive data. This prevents unauthorized access, secures training datasets, and mitigates the impact of potential data breaches or cyberattacks.

Transparent Data Use Policies

Organizations must clearly disclose how personal data is being collected, processed, and shared by AI models. Transparent privacy policies, consent management tools, and audit trails are essential to establishing trust with users and meeting legal obligations across jurisdictions.

AI Privacy Protection Legislation

GDPR (General Data Protection Regulation)

The GDPR remains the most comprehensive privacy law globally, setting stringent requirements for data collection, processing, and user consent. It mandates that AI systems conducting high-risk data processing—including profiling and automated decision-making—undergo Data Protection Impact Assessments (DPIAs). Violations can lead to significant fines and reputational damage.

EU AI Act

The European Union’s AI Act builds on the GDPR by introducing risk-based classifications for AI systems. High-risk AI applications—such as those used in law enforcement or biometric identification—must meet strict data protection and transparency requirements. The act also prohibits certain AI-driven surveillance techniques deemed a threat to fundamental rights.

U.S. Privacy Regulations

In the United States, privacy protections are more fragmented. While there is no federal AI privacy law, individual states have enacted regulations such as the California Consumer Privacy Act (CCPA), the California Privacy Rights Act (CPRA), and the Virginia Consumer Data Protection Act (VCDPA). These laws grant consumers rights to access, delete, and opt out of data collection and automated decision-making.

Learn More: AI Regulations Around the World: Laws, Challenges, and Compliance Strategies

How Can AI Be Designed to Protect User Privacy?

Privacy by Design and Default

AI systems should embed privacy principles directly into architecture and workflows. This means default settings that favor minimal data retention, limited access permissions, and anonymization before analysis.

Explainability and Accountability

Building explainable AI helps users understand how their data influences outcomes. Transparent models also support audits, enabling organizations to trace potential misuse and ensure accountability.

Regular Model Audits

Routine auditing of datasets, training pipelines, and outputs helps detect privacy vulnerabilities before deployment. Automated monitoring can flag risks such as bias, data drift, or unauthorized access in real time.

What Can Companies Do to Reduce AI Privacy Risks?

Build a Data Governance Framework

Establish governance structures that define who owns, accesses, and audits data. Integrate privacy risk management into broader AI governance policies to ensure accountability.

Train Teams on Responsible AI Use

Privacy protection is not just a technical issue—it’s cultural. Regular training helps teams understand legal obligations, identify red flags, and apply privacy-by-design principles consistently.

Monitor and Audit Continuously

AI privacy risks evolve with new technologies. Continuous monitoring, logging, and reporting ensure ongoing compliance with regulations like GDPR, ISO/IEC 42001 (AI management systems), and upcoming AI-specific standards.

Partner with Trusted Providers

When using third-party AI services or APIs, ensure vendors comply with recognized privacy frameworks. Conduct due diligence on their data handling, model transparency, and security practices.

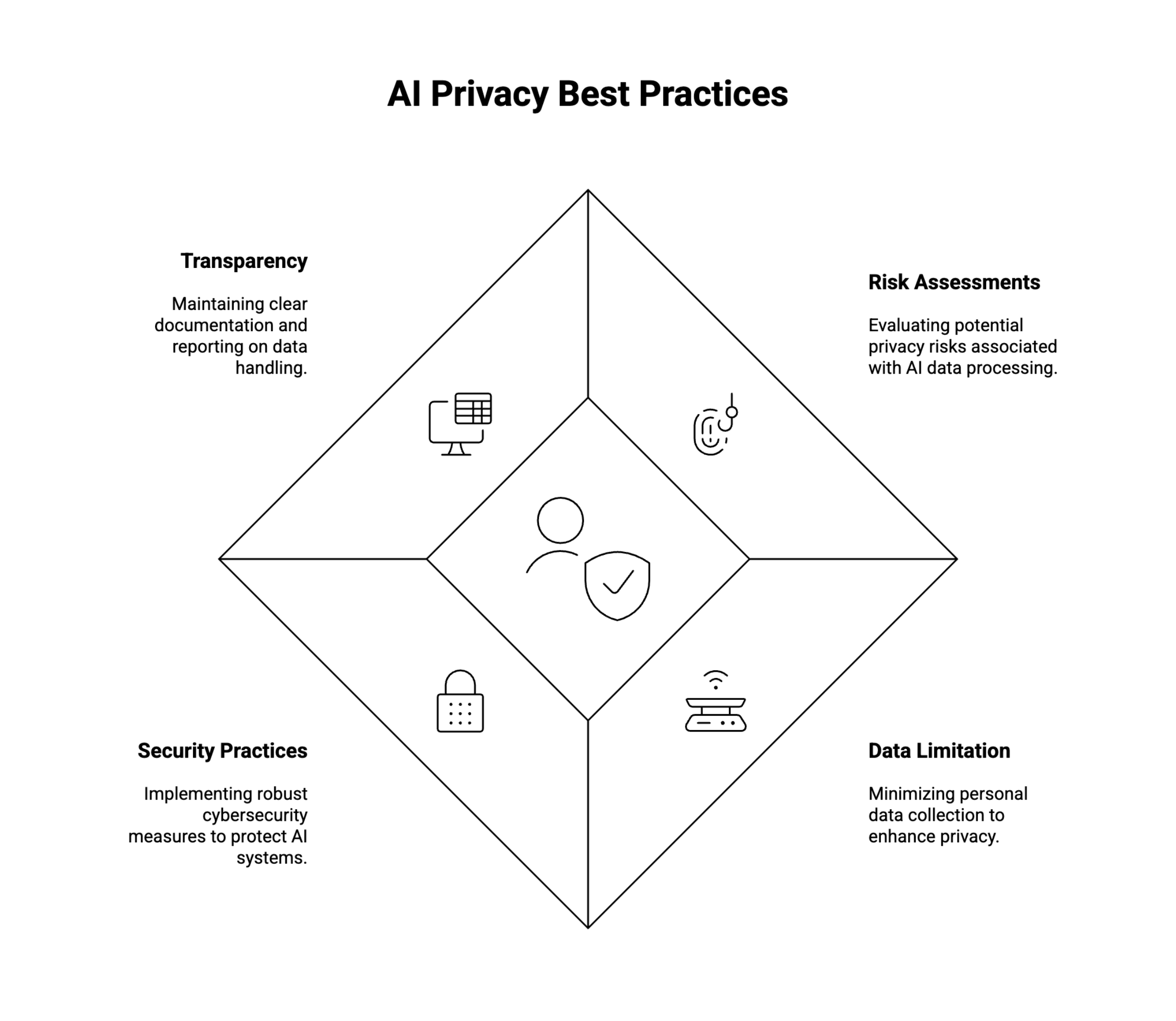

AI Privacy Best Practices

Conduct Risk Assessments

Organizations deploying AI technologies should routinely conduct privacy impact assessments to evaluate potential risks associated with data processing. These assessments should include an analysis of how algorithms make decisions and what personal data is involved.

Limit Data Collection

Avoid over-collection by designing AI systems that function effectively with minimal personal data. Use synthetic data or anonymized datasets where possible to train models while preserving individual privacy.

Follow Security Best Practices

Incorporate strong cybersecurity practices, including intrusion detection, continuous monitoring, and endpoint protection. AI systems should be hardened against threats such as model leakage, adversarial manipulation, and insider risk.

Report on Data Collection and Storage

Maintain detailed documentation of what data is collected, how it’s stored, and who has access. Regular audits and reports are crucial for internal governance and regulatory compliance. Transparency is especially important when AI systems interact with sensitive sectors like healthcare, finance, and law enforcement.

Conclusion: Building Trust Through Responsible AI Privacy

As artificial intelligence continues to permeate daily life—from healthcare diagnostics and automated chatbots to facial recognition and generative AI—the need for robust AI privacy frameworks has never been greater. The risks of AI, including misuse of personal data, unauthorized surveillance, and identity theft, demand comprehensive safeguards, regulatory compliance, and ethical design.

Protecting individual privacy in the age of AI means aligning technological advancements with privacy laws, ensuring accountability through audits and assessments, and empowering consumers with greater control over their data. Whether developing AI tools, deploying machine learning models, or managing user-facing outputs, organizations must prioritize privacy by design and default.

By embedding privacy protection into AI development, companies can build trust, reduce legal exposure, and uphold the rights of individuals across global jurisdictions.

About WitnessAI

WitnessAI is the confidence layer for enterprise AI, providing the unified platform to observe, control, and protect all AI activity. We govern your entire workforce, human employees and AI agents alike, with network-level visibility and intent-based controls. We deliver runtime security for models, applications, and agents. Our single-tenant architecture ensures data sovereignty and compliance. Learn more at witness.ai.