As AI systems—particularly those powered by machine learning (ML) and large language models (LLMs)—increasingly drive decision-making across industries, the ability to monitor, debug, and optimize these models in real time has become critical. This is where AI observability comes into play.

More than just a buzzword, AI observability is a foundational capability for ensuring AI systems perform reliably, ethically, and efficiently throughout their lifecycle. It enables teams to proactively address issues such as model drift, performance degradation, and unexpected outputs—while also supporting responsible AI practices.

What is AI Observability?

AI observability refers to the end-to-end capability to monitor, analyze, and understand the internal states, behaviors, and outputs of AI-powered applications. It extends the principles of traditional software observability—focused on logs, metrics, and traces—to encompass the unique characteristics and challenges of AI and ML models.

In an AI context, observability means being able to answer key questions such as:

- Is the model performing as expected?

- Has the model behavior changed due to new or shifting input data?

- Are there anomalies or signs of hallucination in the responses?

- How are LLMs using tokens, and what does that imply for cost and latency?

- Is the AI system upholding transparency, fairness, and responsible AI guidelines?

By leveraging telemetry, instrumentation, and real-time analytics, AI observability helps teams detect issues early, reduce downtime, and maintain high-performance AI workflows.

What Are the Pillars of AI Observability?

A comprehensive AI observability solution is built on the following six pillars:

1. Data and Metadata Monitoring

Monitoring the input datasets, embeddings, and feature pipelines used in model training and inference is critical for ensuring data quality and pipeline consistency. Observability platforms track transformations, lineage, and metadata throughout the AI lifecycle.

2. Model Performance Metrics

AI observability tools track both technical (e.g., latency, token usage, throughput) and functional metrics (e.g., accuracy, BLEU scores, response quality) across ML models and LLMs.

3. Drift and Anomaly Detection

AI systems must detect and respond to data drift, concept drift, and unexpected behavioral shifts. Advanced anomaly detection methods—both rule-based and ML-based—help teams identify deviations from expected model behavior.

4. Root Cause Analysis and Debugging

When model outputs degrade, root cause analysis helps trace issues back to upstream components, data changes, or pipeline bottlenecks. Integrated debugging tools streamline investigation and remediation.

5. Real-Time Dashboards and Alerting

Dynamic dashboards allow AI teams to visualize system health and model performance. Alerts can be configured based on custom thresholds, metadata, or latency spikes, with smart correlation reducing noise and false positives.

6. Responsible AI and Governance

Observability is also key for enforcing responsible AI practices. Tools can audit bias, track explainability metrics, and ensure outputs adhere to regulatory frameworks (e.g., GDPR, AI Act).

What Are the Use Cases for AI Observability?

AI observability enables several real-world use cases that support operational reliability, cost optimization, and ethical governance. Below are some of the most critical applications.

Token Usage Monitoring

LLMs and RAG-based systems incur costs and latency based on token consumption. Observability platforms track token counts, prompt/response length, and trends across usage to inform pricing models and efficiency optimizations.

Model Drift Detection

Models may degrade over time due to data drift or label drift. AI observability continuously compares current outputs with historical baselines, surfacing anomalies and triggering retraining workflows when necessary.

Response Quality Monitoring

Observability solutions can measure and flag quality issues, including:

- Incomplete or incorrect answers

- Repetitive or verbose outputs

- Hallucinations or fabricated content

- Toxic, biased, or unsafe responses

This is especially important for GenAI and chatbot deployments in regulated industries.

Responsible AI Monitoring

AI observability supports ethical use through:

- Bias detection in outputs

- Explainability tracking using tools like SHAP or LIME

- Auditable logging for regulatory reporting

- Monitoring of sensitive data exposure in outputs

Automated Anomaly Detection

Modern observability platforms leverage ML-powered anomaly detection to automatically identify deviations across telemetry streams without relying on static thresholds.

Alerting Correlation and Noise Reduction

Traditional alerting systems often produce excessive notifications. AI observability uses correlation engines to suppress duplicate alerts, cluster related issues, and notify only when business-impacting thresholds are crossed.

How Does AI Observability Improve Model Performance and Reliability?

The operational value of AI observability is significant. When implemented effectively, it can transform how teams build, scale, and maintain AI applications. Here’s how:

Optimize Model Performance

Observability tracks latency, response times, and pipeline bottlenecks—allowing engineering teams to proactively tune performance and avoid service degradation.

Reduce Downtime and Debug Faster

With end-to-end traceability and granular logging, observability tools reduce MTTR (mean time to resolution) by surfacing the root cause of issues such as infrastructure failures, model regressions, or API errors.

Improve User Experience

Tracking metrics like response consistency, language fluency, and personalization quality helps teams improve the final user experience—critical for customer-facing GenAI apps.

Drive Continuous Improvement

Through longitudinal tracking of model performance and drift metrics, AI teams can set up automated retraining or recalibration pipelines—improving accuracy over time.

Support Governance and Auditability

Observability provides a record of model behavior, data flows, and system decisions—essential for regulatory audits, compliance reporting, and internal reviews.

What Are the Challenges of AI Observability?

Despite its importance, organizations face several hurdles in implementing effective AI observability:

Data Overload

AI systems generate enormous volumes of data—including structured metrics, unstructured outputs, and metadata. Storing, processing, and querying this data at scale requires careful instrumentation and cost management.

Tool Fragmentation

Many observability tools focus on specific components (e.g., infrastructure vs. models vs. pipelines). Integrating these into a unified platform requires orchestration and custom development.

Lack of Standardization

AI lacks agreed-upon standards for performance and observability metrics, making benchmarking difficult and tooling interoperability inconsistent.

Real-Time Requirements

Critical systems require real-time monitoring and alerting, but processing and analyzing high-frequency telemetry can introduce latency and compute overhead.

Explainability and Interpretability

Tracking raw output alone isn’t enough—teams need explainability mechanisms to understand why a model made a decision. Building this into observability pipelines is non-trivial.

Security and Privacy

Observability must not compromise sensitive user data or PII. Proper access controls, data masking, and privacy engineering are essential, especially when exporting observability data to third-party tools.

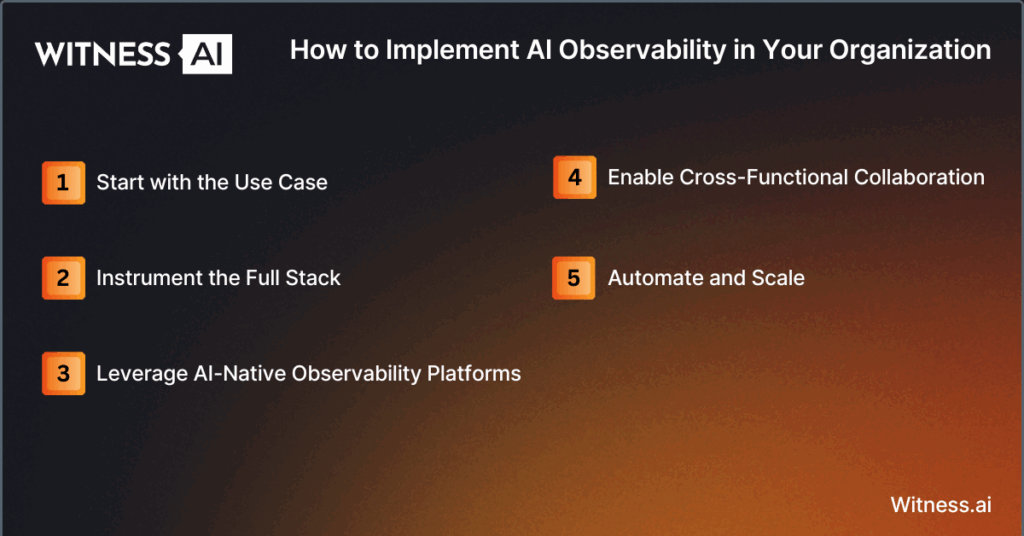

How to Implement AI Observability in Your Organization

Building an effective AI observability practice involves a combination of tooling, culture, and process. Here are best practices to follow:

- Start with the Use Case

Identify critical AI use cases (e.g., chatbots, fraud detection, recommendation systems) and define KPIs and SLOs to monitor. - Instrument the Full Stack

Use OpenTelemetry and other open-source libraries to gather observability data across models, pipelines, APIs, and infrastructure. - Leverage AI-Native Observability Platforms

Adopt platforms designed specifically for LLM observability and ML monitoring to handle model-specific requirements like drift, bias, and response evaluation. - Enable Cross-Functional Collaboration

Involve data scientists, MLOps engineers, DevOps teams, and AI governance leads to ensure observability insights are actionable and integrated across the organization. - Automate and Scale

Implement automated workflows for anomaly response, retraining, and auditing. Design solutions that are scalable across growing workloads and models.

Conclusion

AI observability is essential to building high-performing, transparent, and AI-driven systems that are resilient, secure, and aligned with human values. As AI becomes more embedded in real-time decision-making, business automation, and customer-facing applications, the ability to observe, understand, and govern these systems is no longer optional.

By investing in tools, integrating end-to-end instrumentation, and aligning observability with responsible AI frameworks, organizations can ensure their AI models deliver accurate, fair, and auditable outcomes—at scale.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.