The increasing reliance on artificial intelligence (AI) across industries—from finance and healthcare to logistics and defense—has made AI data security a critical concern. As organizations leverage AI to automate decision-making, enhance workflows, and drive innovation, the use of AI introduces new attack surfaces and exposes previously unknown security risks.

AI systems are only as secure as the data they ingest and the safeguards put in place throughout their lifecycle. Whether built on proprietary datasets or using public genAI models, AI-driven systems require a robust, comprehensive security strategy that addresses data privacy, model integrity, and real-time threat detection.

What is AI Data Security?

AI data security refers to the comprehensive set of practices, technologies, and controls aimed at protecting the data, models, and outputs associated with AI systems. It extends beyond traditional cybersecurity by focusing specifically on the unique vulnerabilities introduced by AI technologies, including:

- The vast amount of sensitive data used during model training

- The complexity of machine learning algorithms

- The unpredictability of AI-powered outputs

- The use of third-party providers and models (e.g., open-source or cloud-hosted LLMs)

Ultimately, AI data security is about ensuring the confidentiality, integrity, and availability of AI systems while maintaining compliance, data governance, and ethical use standards.

Why is AI Data Security Necessary?

The use of AI for mission-critical applications makes it an attractive target for cybercriminals and nation-state adversaries. With AI’s capabilities expanding into highly regulated areas like finance, healthcare, and defense, the security posture of an organization depends heavily on its ability to protect AI infrastructure and data pipelines.

Key Drivers for AI Data Security:

- Protection of Sensitive Information: AI often processes personal data, proprietary research, financial transactions, and other regulated information.

- Regulatory Compliance: Frameworks like GDPR, HIPAA, and emerging AI regulations (e.g., the EU AI Act) require strict data protection and transparency.

- Supply Chain Risks: Third-party AI tools, models, or datasets can introduce risks from providers that lack adequate controls.

- Evolving Threats: Traditional perimeter defenses may not detect AI-specific attacks, such as data poisoning or model inversion.

- Reputation and Trust: A single data breach involving an AI system can undermine trust in both the model and the organization behind it.

What Are the Data Security Concerns of AI?

AI systems face an evolving landscape of security threats that target both their data and underlying architecture. Below are four of the most pressing threats:

1. Data Poisoning

Data poisoning is the deliberate manipulation of training datasets to corrupt the behavior of an AI model. This can degrade accuracy, introduce backdoors, or cause harmful behaviors in production.

- Example: In an e-commerce fraud detection model, poisoned data could teach the model to ignore certain fraudulent transaction patterns.

Data poisoning attacks often occur upstream, making them difficult to detect during initial model training unless rigorous validation and supply chain security are in place.

2. Model Inversion Attacks

In model inversion, attackers use repeated inputs to infer information about the training data, potentially reconstructing sensitive information such as user identities or private health records.

- Risk Amplifier: The more powerful and overfit a model is, the easier it becomes to reverse-engineer outputs back to training inputs.

This risk is particularly high in genAI systems and large language models (LLMs) that interact with external users.

3. Adversarial Attacks

These attacks involve modifying input data in subtle ways that are imperceptible to humans but cause the AI model to misclassify or misbehave.

- Use case: In self-driving cars, a slightly altered stop sign image can cause the AI to read it as a yield sign, potentially leading to accidents.

Adversarial attacks can exploit AI tools used in facial recognition, natural language processing, or image classification, bypassing normal security controls.

4. Automated Malware

Threat actors are increasingly using AI-driven techniques to create adaptive, automated malware capable of bypassing antivirus tools and threat detection systems.

- Concern: Such malware can learn from its environment and mutate in real-time, requiring AI-powered cybersecurity solutions to counter them.

AI Data Security Best Practices

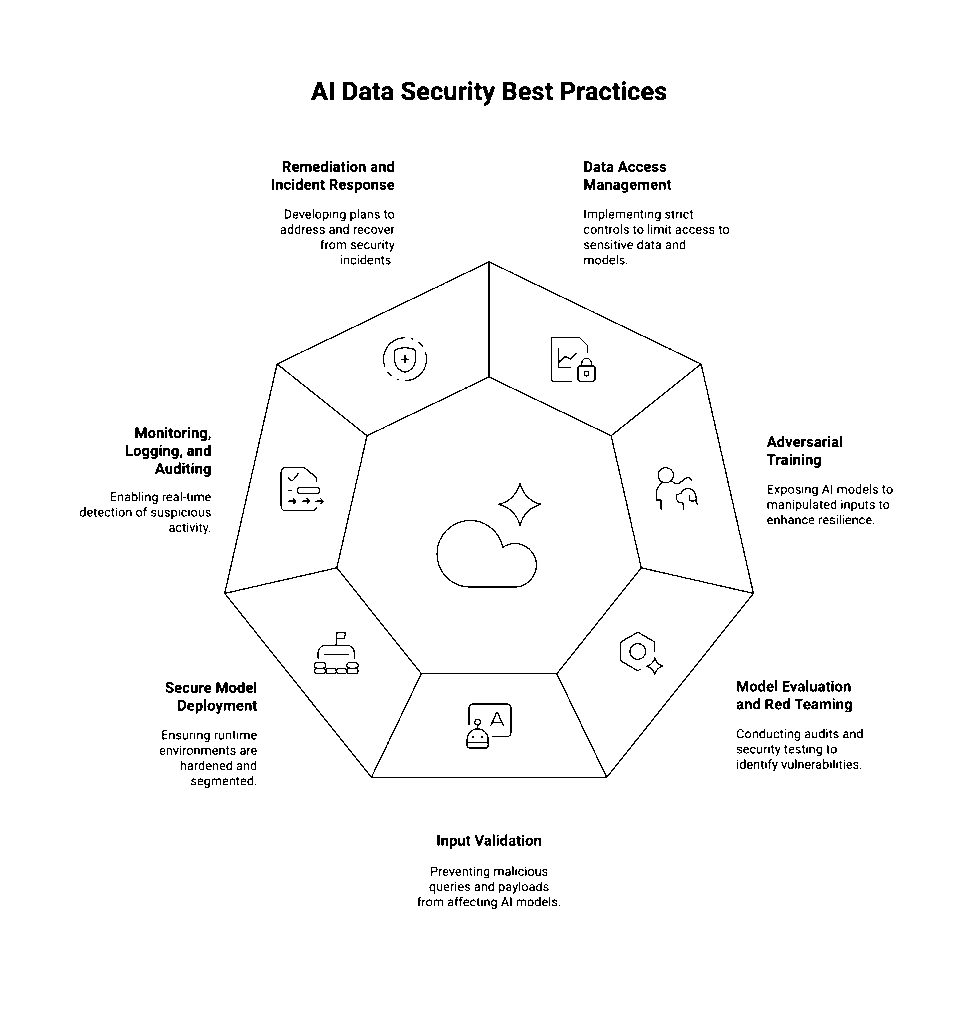

To maintain a strong security posture, organizations must embed AI data security measures throughout the lifecycle of AI systems—from model training to deployment and monitoring.

1. Data Access Management

Implement strict access controls to limit who can view, modify, or download sensitive datasets and models. Key measures include:

- Zero Trust Architecture

- Multi-factor Authentication (MFA)

- Least Privilege Access (LPA)

- Secure API keys and secrets management

This is especially important when automating processes that ingest or expose sensitive data across multiple platforms.

2. Adversarial Training

Integrate adversarial training techniques that expose AI models to manipulated inputs during development, making them more resilient in production.

- Result: Increased robustness against adversarial attacks and fewer false positives/negatives in real-world conditions.

3. Model Evaluation and Red Teaming

Conduct ongoing model audits, security testing, and AI red teaming exercises to identify weaknesses before exploitation.

- Leverage benchmarks such as NIST’s AI Risk Management Framework

- Use tools that simulate real-world threat vectors, including prompt injection and data poisoning

4. Input Validation

Apply input validation mechanisms to prevent users from submitting malicious queries or payloads to AI models.

- Crucial for: Web-facing LLMs, chatbots, and other interactive AI applications

- Defense against: Prompt injection, XSS, SQL injection

5. Secure Model Deployment

When deploying AI models, ensure the runtime environment is hardened and segmented.

- Use containerization and orchestration tools with secure configurations

- Encrypt both in-transit and at-rest data

- Leverage endpoint protection to monitor model behavior

6. Monitoring, Logging, and Auditing

Enable real-time monitoring, anomaly detection, and logging of model interactions to detect suspicious activity or unauthorized access.

- Store logs securely for forensic analysis and regulatory compliance

- Integrate alerts with security information and event management (SIEM) systems

7. Remediation and Incident Response

Develop a dedicated remediation plan for AI-specific incidents. Include procedures for:

- Taking compromised models offline

- Rolling back to secure checkpoints

- Notifying stakeholders and regulators

- Conducting post-incident audits

A fast response can contain damage and minimize legal or operational consequences.

Real-Life Examples of AI Data Breaches

Several incidents highlight the consequences of weak AI data security:

Microsoft AI Leak (2023)

A misconfigured Azure Blob storage instance leaked over 38TB of training data, private keys, passwords, and internal messages used in AI development. The exposure was linked to genAI model training and led to widespread scrutiny of Microsoft’s security posture.

GPT Prompt Injection Exploits

Researchers demonstrated the ability to manipulate GPT-based applications via prompt injection, allowing access to confidential business logic, APIs, or internal tools—showcasing the need for input validation and access management.

Healthcare Model Inversion

AI models trained on anonymized patient data were found to be vulnerable to model inversion attacks, allowing attackers to reconstruct personal data like names and diagnoses from model outputs. This exposed gaps in data anonymization and training data governance.

The Role of AI Providers in Data Security

Third-party providers play a major role in AI security, especially when organizations use pre-trained models, open-source libraries, or cloud-based LLMs.

Best Practices for Working with Providers:

- Perform security due diligence on vendors

- Demand transparency around training data sources and model updates

- Include data protection clauses in service-level agreements (SLAs)

- Monitor for supply chain vulnerabilities stemming from compromised tools or data dependencies

Cloud-based AI tools should also be vetted for compliance with data privacy laws and internal policies.

AI-Driven Security Solutions

Just as AI introduces new threats, it also offers new capabilities to combat them. Organizations can now use AI-driven solutions to enhance their cybersecurity defenses.

Examples of AI-Driven Security Tools:

- Behavioral analytics for detecting insider threats

- Automated classification of data sensitivity

- Real-time threat detection using pattern recognition

- Intelligent access controls that adapt based on user behavior

The automation of threat response and detection using AI reduces response times and frees up security teams for more strategic initiatives.

Conclusion: Securing AI in an Evolving Threat Landscape

AI data security is no longer optional—it is a foundational element of enterprise risk management and cybersecurity strategy. As organizations accelerate their adoption of AI-driven workflows and genAI technologies, the importance of securing the entire AI pipeline becomes clear.

From protecting sensitive information and securing model training, to monitoring AI behavior and addressing supply chain risks, a robust and proactive approach is essential. Organizations must prioritize security controls, enforce zero trust principles, and collaborate with trusted providers to ensure the safe use of AI in an increasingly automated world.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai