Artificial intelligence (AI) technologies are transforming business operations, decision-making, and innovation across sectors. Yet as AI becomes increasingly embedded in high-impact use cases—such as healthcare diagnostics, financial risk assessments, and customer service automation—regulatory compliance has become a critical concern.

AI compliance ensures that organizations deploy and manage AI systems in a way that aligns with legal standards, ethical norms, and data protection frameworks.

This article offers a comprehensive overview of AI compliance, explaining its importance, core principles, regulatory examples, challenges, and how to develop a strong compliance framework. It also explores the growing relationship between AI privacy and compliance, and how technology can automate oversight in real time.

What Is AI in Compliance?

AI compliance refers to the process of ensuring that AI systems—including machine learning models, generative AI tools, and decision-making algorithms—adhere to applicable laws, regulations, and ethical guidelines throughout their lifecycle.

This encompasses the development, deployment, monitoring, and retirement of AI applications.

Compliance in AI spans a wide range of domains:

- Data privacy and protection (e.g., GDPR, HIPAA, PCI DSS)

- Fairness and transparency in algorithmic decision-making

- Cybersecurity and access control to prevent data misuse

- Ethical AI development practices

- Sector-specific regulatory requirements in healthcare, finance, defense, and government

In short, AI compliance is not a single regulation—it’s a multidimensional discipline grounded in responsible AI governance, risk management, and legal adherence.

Why Is AI Compliance Needed?

Legal and Ethical AI Usage

Governments and regulatory bodies worldwide are introducing frameworks to manage risks such as AI misuse, algorithmic bias, and data exploitation. Compliance ensures that AI applications align with legal requirements and ethical standards, protecting personal data and human rights.

AI Regulations

AI regulations are emerging worldwide to ensure that artificial intelligence is developed and used responsibly. While approaches vary, most frameworks share goals of transparency, accountability, safety, and fairness.

- European Union: The EU AI Act is the world’s most comprehensive AI law, categorizing systems by risk level—from banned “unacceptable risk” uses (like social scoring) to “high-risk” applications that require strict governance, documentation, and human oversight.

- United States: The U.S. follows a sector-based approach, guided by the NIST AI Risk Management Framework, FTC guidance, and existing laws such as HIPAA and FCRA. The 2023 Executive Order on Safe, Secure, and Trustworthy AI reinforces responsible AI adoption across federal agencies.

- United Kingdom: The UK applies a pro-innovation, principle-driven model, empowering regulators to enforce safety, transparency, fairness, and accountability without creating new standalone laws.

- Asia-Pacific: Countries like Singapore, Japan, and China balance innovation with oversight through national AI governance frameworks and transparency requirements.

- Canada: Canada’s AIDA and standards from the OECD and ISO/IEC 42001 promote human-centric, trustworthy AI on a global scale.

Learn More: AI Regulations Around the World: Laws, Challenges, and Compliance Strategies

AI Ethics

AI ethics focuses on ensuring that artificial intelligence is developed and used in ways that align with human values, fairness, and societal well-being. It bridges the gap between innovation and responsibility, guiding organizations to use AI systems that are not only legal but also moral and trustworthy.

Core ethical principles include:

- Fairness: Prevent discrimination and algorithmic bias.

- Transparency: Make AI decisions explainable and auditable.

- Accountability: Define ownership for AI outcomes and ensure oversight.

- Privacy: Respect user data and consent throughout the AI lifecycle.

- Human-Centric Design: Ensure AI serves people, not the other way around.

Learn More: The Growing Importance of AI Ethics in the Age of Intelligent Systems

Risk Mitigation

AI systems can amplify organizational risk—especially when they handle sensitive data, automate decision-making, or use opaque models. A strong compliance program mitigates these risks by identifying vulnerabilities and preventing:

- Biased or discriminatory algorithms

- Data leakage and privacy breaches

- Regulatory penalties and enforcement actions

- Operational disruptions from shadow AI usage

Learn More: AI Risk Management: A Structured Approach to Securing the Future of Artificial Intelligence

Building Trust

Trust is essential for AI adoption. AI compliance fosters transparency, accountability, and explainability, enabling customers, regulators, and partners to have confidence in AI-driven outcomes.

How to Be AI Compliant

Achieving AI compliance requires embedding governance and legal accountability throughout the AI lifecycle—from model training to deployment and continuous monitoring.

Key practices include:

- Conducting Data Protection Impact Assessments (DPIAs)

- Validating model performance, accuracy, and fairness

- Logging AI decisions for auditability and traceability

- Applying cybersecurity safeguards against tampering or model theft

- Documenting development assumptions, limitations, and intended use

A structured compliance program integrates legal, ethical, and operational requirements into every stage of AI use.

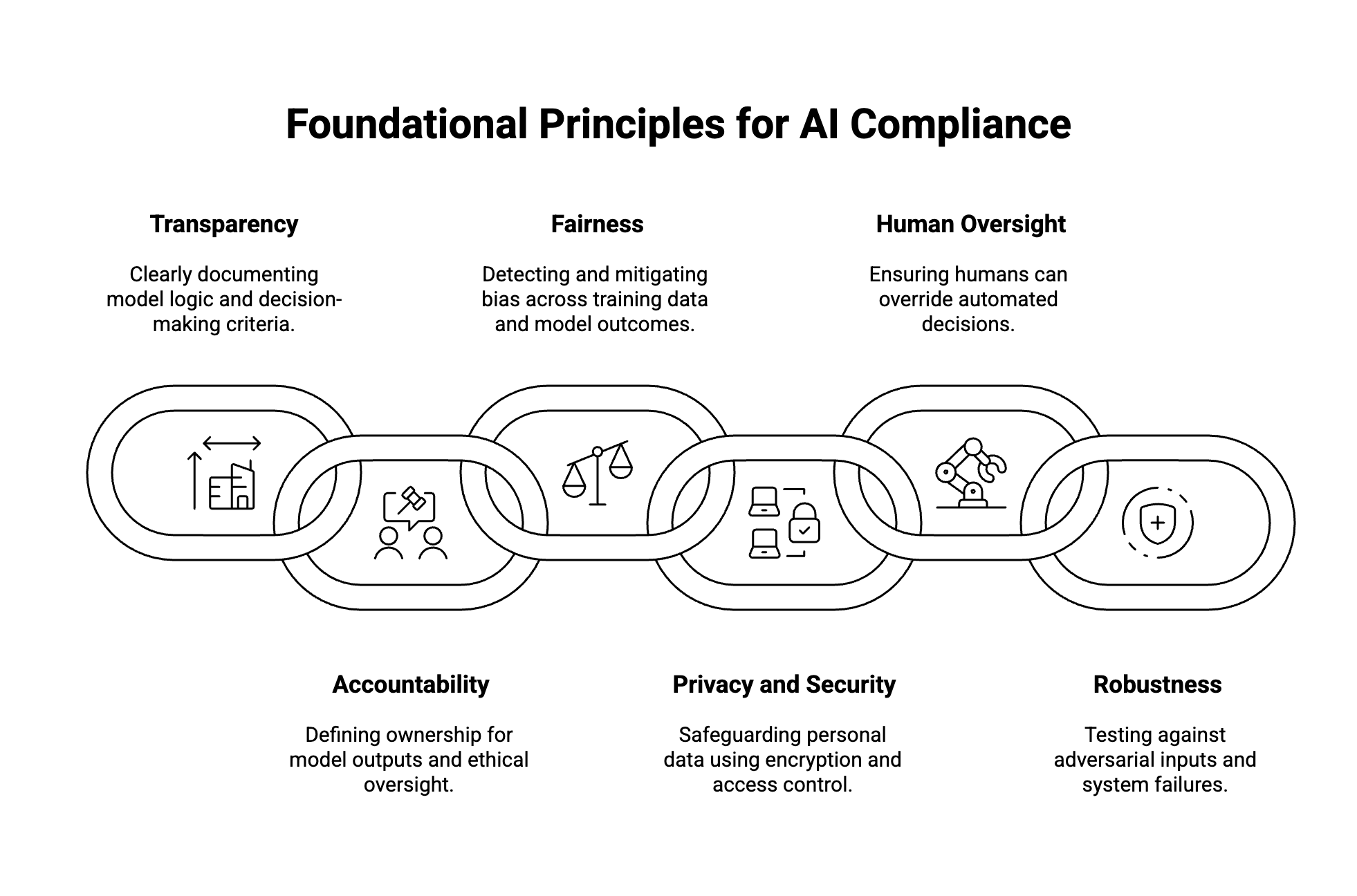

What Are Principles for Ensuring AI Compliance?

Organizations should adopt foundational principles that align with responsible AI development and regulatory expectations.

Core AI compliance principles include:

- Transparency: Clearly document model logic and decision-making criteria.

- Accountability: Define ownership for model outputs and ethical oversight.

- Fairness: Detect and mitigate bias across training data and model outcomes.

- Privacy and Security: Safeguard personal data using encryption, pseudonymization, and access control.

- Human Oversight: Ensure humans can override automated decisions.

- Robustness: Test against adversarial inputs, data drift, and system failures.

These principles form the foundation of any AI governance framework.

What Are Examples of Compliance Standards in AI?

United States

While the U.S. lacks a single AI law, multiple frameworks define responsible practices:

- Executive Order on Safe, Secure, and Trustworthy AI (2023): Directs federal agencies to assess and mitigate AI-related risks.

- NIST AI Risk Management Framework (AI RMF): Provides voluntary guidelines for identifying, measuring, and managing AI risk.

- HIPAA & GLBA: Regulate AI handling of healthcare and financial data.

- FTC Guidance on AI: Warns organizations against discriminatory, deceptive, or unfair use of algorithms.

European Union

The EU AI Act (expected enforcement in 2025–2026) is the most comprehensive AI law globally. It classifies systems by risk:

- Unacceptable risk: e.g., social scoring — prohibited.

- High risk: e.g., biometric ID, credit scoring — subject to strict governance and conformity assessments.

- Limited or minimal risk: Must provide transparency or minimal disclosure.

Complementing this is the GDPR, which enforces strict data protection rules, including automated decision-making transparency and lawful data processing.

Other Jurisdictions

- United Kingdom: AI governance guided by the “pro-innovation” framework and ICO guidelines.

- Canada: Artificial Intelligence and Data Act (AIDA) under review.

- Singapore and Japan: Introduced national AI governance models balancing innovation and accountability.

AI Privacy: The Foundation of AI Compliance

AI privacy is a cornerstone of compliance, focusing on how AI systems collect, process, store, and infer information about individuals. Because AI often operates on vast datasets—including personal, behavioral, or biometric data—privacy compliance is both a legal and ethical obligation.

Core Elements of AI Privacy

- Data Minimization: Collect only the data necessary for the AI’s intended function.

- Informed Consent: Ensure individuals understand how their data is used in AI decision-making.

- Data Anonymization: Use pseudonymization, hashing, or differential privacy to reduce re-identification risk.

- User Rights: Support rights to access, correct, or delete personal data processed by AI models.

- Data Localization: Adhere to regional data residency laws when transferring or processing data cross-border.

Privacy Risks in AI Systems

- Inference Attacks: AI models unintentionally reveal sensitive data through outputs or embeddings.

- Training Data Leakage: Poorly secured datasets expose personal identifiers.

- Shadow AI and Unvetted APIs: Employees using unauthorized tools can transmit sensitive data outside compliance boundaries.

Integrating Privacy-by-Design

Organizations should embed Privacy-by-Design principles directly into AI development pipelines—ensuring privacy protection is proactive, not reactive. Automated redaction, encryption, and access monitoring tools can enhance compliance while preserving model performance.

Learn More: AI Privacy: Understanding and Mitigating Data Privacy Risks in Artificial Intelligence

Do I Need AI Compliance?

If your organization develops, deploys, or integrates AI systems—especially in regulated industries—the answer is unequivocally yes.

You need AI compliance if you:

- Use AI for hiring, lending, diagnostics, or risk scoring

- Process personal, financial, or biometric data

- Operate in jurisdictions covered by GDPR, EU AI Act, CCPA, or similar regulations

- Integrate third-party AI APIs or models into workflows

- Deploy generative AI systems that influence public or internal decision-making

Failing to comply can lead to financial penalties, legal exposure, and reputational damage.

How Can Technology Improve AI Regulatory Compliance?

Modern AI compliance platforms automate governance across the entire model lifecycle:

- Continuous monitoring for drift, bias, and anomaly detection

- Automated audit trails and compliance documentation

- Data masking and access control for sensitive datasets

- Integration with CI/CD pipelines to ensure compliance gates before deployment

- Model lineage tracking to ensure traceability from data to decision

Platforms like WitnessAI enhance compliance visibility, enabling security, governance, and policy enforcement for enterprise-scale AI systems.

What Are the Challenges in Achieving AI Compliance?

- Rapidly Evolving Regulations: Continuous updates from the EU, U.S., and international agencies require agile compliance tracking.

- Shadow AI Usage: Unmonitored AI tools create data governance blind spots.

- Complex Risk Management: Legacy methods struggle to evaluate black-box or generative AI systems.

- Third-Party Dependencies: Vendor models often lack transparency into training data or fairness metrics.

- AI Talent Shortages: Few professionals combine legal, ethical, and technical expertise in AI compliance.

Organizations need integrated governance programs and automated monitoring to overcome these barriers.

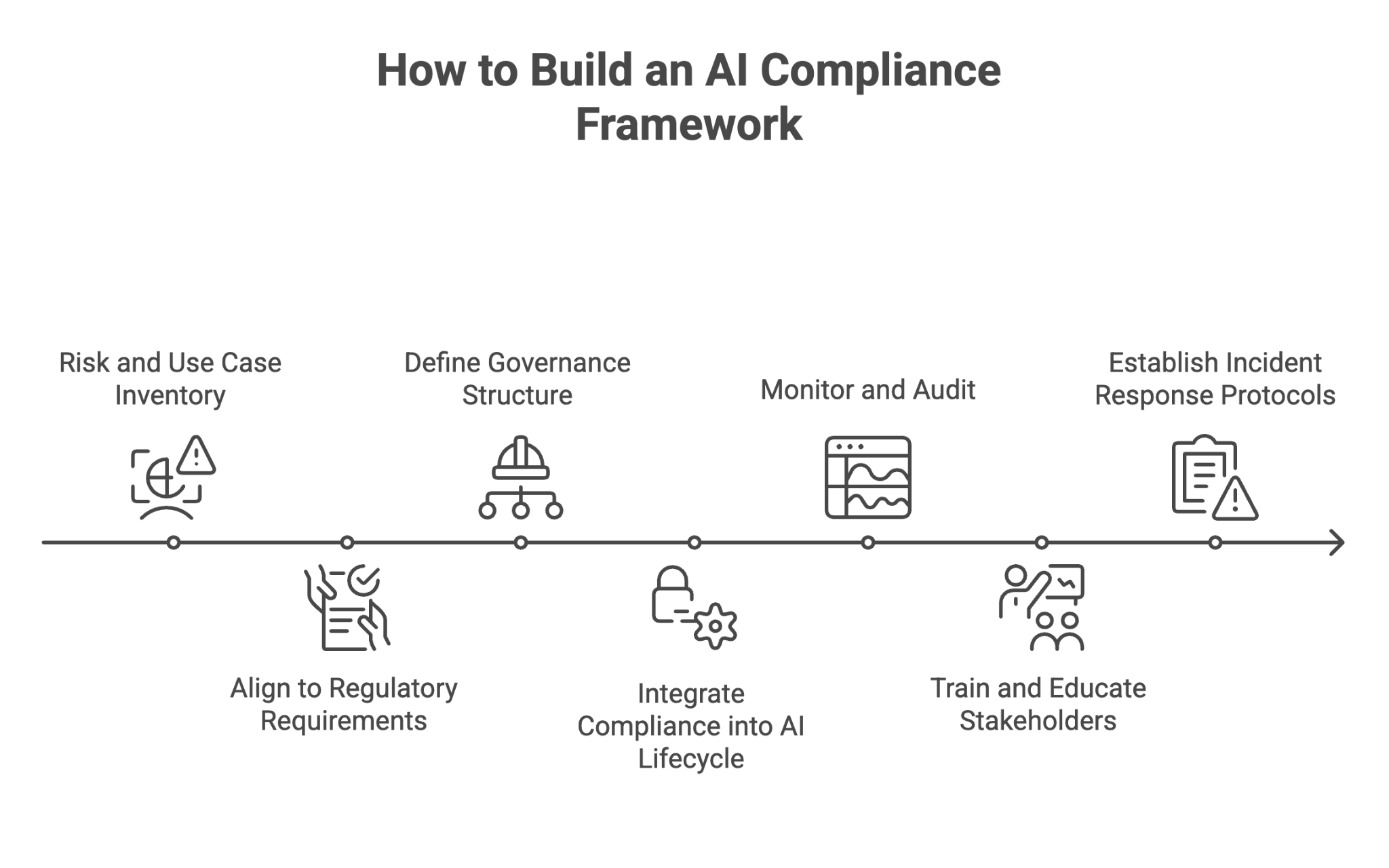

How to Develop an AI Compliance Framework

A mature AI compliance framework brings together governance, people, and automation:

- Risk and Use Case Inventory: Catalog AI use cases and classify them by sensitivity and risk level.

- Regulatory Mapping: Align each use case with applicable global and sector-specific standards.

- Governance Structure: Assign clear accountability to compliance, data privacy, and engineering teams.

- Lifecycle Integration: Embed compliance into model design, training, deployment, and decommissioning.

- Monitoring and Auditing: Continuously track metrics such as bias, data drift, and decision fairness.

- Training and Awareness: Educate employees on ethical AI use, legal implications, and shadow AI risks.

- Incident Response: Define escalation paths for breaches or non-compliance events, including regulator reporting.

This framework provides a blueprint for responsible AI operations across business functions.

Final Thoughts

AI compliance is no longer optional—it’s a business imperative. As AI systems become more autonomous and integrated into critical workflows, compliance ensures that organizations balance innovation with accountability.

With the right AI governance frameworks, privacy safeguards, and compliance technologies, enterprises can unlock the full potential of AI while preserving trust, transparency, and security.

Investing in AI compliance today not only protects against legal and reputational risk but also positions organizations to lead in a future of responsible, ethical, and compliant AI adoption.

Learn More: AI Security Trends to Watch in 2025: AI Gets Compliant

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.

Related Content:

- AI Regulations Around the World: Laws, Challenges, and Compliance Strategies

- AI Privacy: Understanding and Mitigating Data Privacy Risks in Artificial Intelligence

- Understanding Shadow AI: Risks, Challenges, and How to Manage It

- Understanding AI Risks: A Comprehensive Guide to the Dangers of Artificial Intelligence

- AI Risk Management: A Structured Approach to Securing the Future of Artificial Intelligence

- The Growing Importance of AI Ethics in the Age of Intelligent Systems