What is AI Agent Security?

AI agent security refers to the practices, frameworks, and controls designed to protect AI agents—autonomous or semi-autonomous systems built on large language models (LLMs) and other AI models—from misuse, vulnerabilities, and adversarial attacks. Unlike standalone AI systems, agents interact with multiple tools, APIs, and workflows, making them a prime target for cyber threats.

Securing AI agents involves protecting their functions, agent actions, data flows, and decision-making processes. This requires not only addressing traditional AI security risks (e.g., data leakage, adversarial inputs) but also extending defenses into areas unique to agentic AI, such as prompt injection attacks, tool manipulation, and unauthorized access to enterprise endpoints.

Why AI Agents Pose Different Security Challenges

The security landscape for AI agents is broader than for traditional chatbots or LLMs because agents combine reasoning with automation.

Chatbots

Chatbots primarily handle user interaction and outputs. Their attack surface is usually limited to prompts and responses, although they are still vulnerable to prompt injection, phishing attempts, or data leaks.

AI Agents

Agentic AI systems are more complex. They can:

- Execute workflows across multiple APIs and tools.

- Make autonomous decisions about agent actions.

- Access sensitive data and credentials.

- Interact with endpoints in real-world business processes.

This expanded functionality increases the attack surface and amplifies risks such as lateral movement, exfiltration, and privilege escalation.

The Top Security Risks Facing AI Agents

1. Prompt Injection and Agent Hijacking

Prompt injection attacks manipulate agent inputs to override intended instructions. Malicious adversarial inputs may cause agents to exfiltrate sensitive information, reveal credentials, or execute unauthorized workflows. In severe cases, attackers can hijack agent behavior and control downstream decisions.

2. Tool Manipulation and Excessive Permissions

AI agents often rely on APIs or plugins to extend functionality. Poorly scoped permissions or weak authentication can allow unauthorized users or malicious actors to exploit these integrations. This creates risks such as unauthorized data access, workflow disruption, or denial of service attacks.

3. Supply Chain Vulnerabilities

Agent frameworks and open-source libraries power much of today’s agentic AI. While these accelerate adoption, they also introduce supply chain vulnerabilities. Attackers can inject malicious code, manipulate dependencies, or exploit unpatched libraries to compromise secure AI agents.

4. Insufficient Access Controls and Monitoring

Without strong access controls and continuous runtime monitoring, AI agents may unintentionally expose sensitive data, fail to enforce zero-trust principles, or become an entry point for broader system compromise. Weak authentication mechanisms also increase the risk of unauthorized user access.

How Can I Detect Risky AI Agent Behavior in Real Time?

Detecting agent behavior anomalies requires proactive, real-time monitoring. Enterprises should deploy:

- Runtime observability tools to track agent actions, API calls, and outputs.

- Threat detection frameworks that flag unusual behavior such as privilege escalation, data leakage, or abnormal automation loops.

- Guardrails around agent decision-making to prevent unauthorized workflows or excessive credential use.

- Incident response integration that can immediately quarantine compromised AI agents before risks spread laterally.

By treating AI agents as active workloads within the cybersecurity ecosystem, enterprises can build visibility into real-world agent workflows and respond before risks escalate.

How Can I Ensure the Security and Privacy of Data Processed by AI Agents?

Because agents often handle sensitive information, enterprises must establish strong safeguards for data security and privacy. Key measures include:

- Encryption at rest and in transit for all agent-related data.

- Granular access controls to limit exposure of sensitive data fields.

- Zero-trust authentication to ensure only authorized users and endpoints interact with agents.

- Data minimization to reduce unnecessary processing and potential data leaks.

- Auditable logging of agent actions and outputs for compliance and forensic investigations.

This reduces the likelihood of data leaks, exfiltration, or unauthorized disclosure across the AI agent lifecycle.

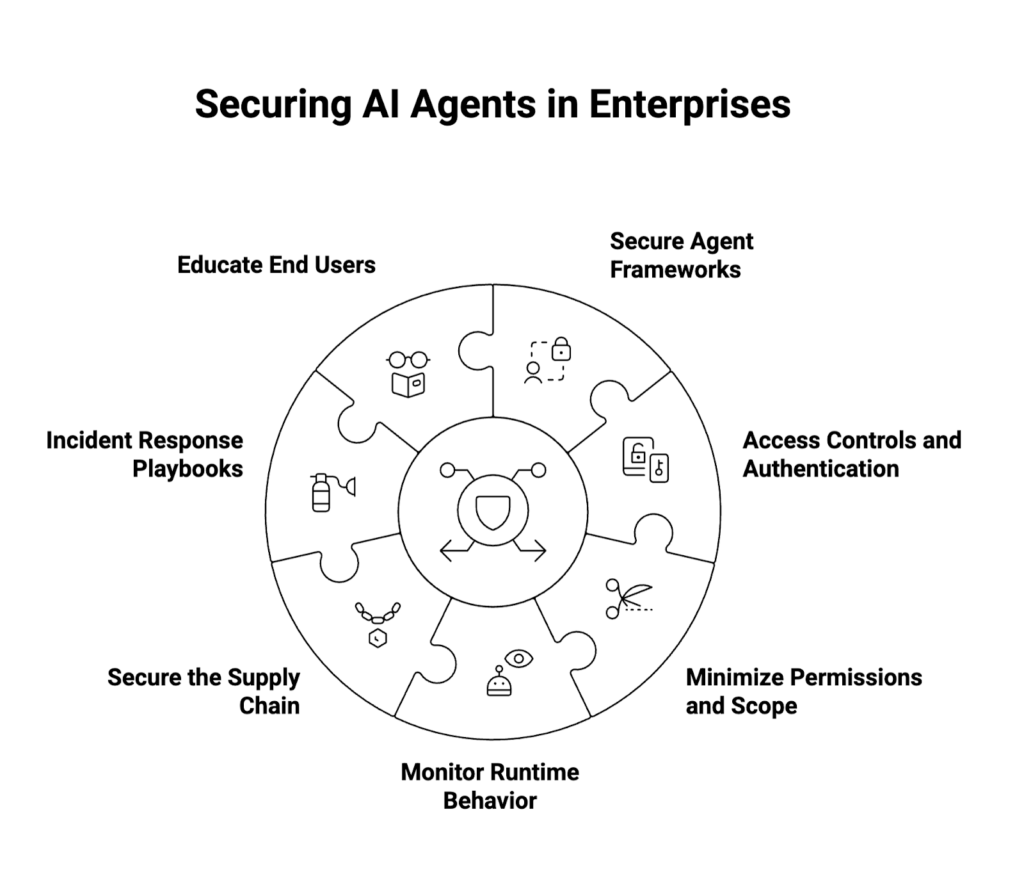

Best Practices for Ensuring the Security of AI Agents in Enterprise Environments

Enterprises adopting agentic AI should implement a defense-in-depth strategy that addresses security across agent workflows, tools, and user interactions. Recommended best practices include:

- Adopt Secure Agent Frameworks

- Choose agent frameworks with built-in security guardrails.

- Favor frameworks with strong community support and frequent security patches.

- Enforce Strong Access Controls and Authentication

- Implement role-based permissions for tools, APIs, and sensitive data.

- Require multi-factor authentication (MFA) for both users and agent services.

- Minimize Permissions and Scope

- Follow least privilege principles for APIs and endpoints.

- Regularly audit permissions to prevent privilege escalation.

- Monitor Runtime Behavior Continuously

- Deploy observability solutions to track real-time agent actions.

- Detect anomalies such as unusual credential use or automation loops.

- Secure the Supply Chain

- Vet all open-source dependencies used in agent frameworks.

- Maintain patch management workflows to address known vulnerabilities.

- Establish Incident Response Playbooks

- Prepare automated mitigation for compromised agent actions.

- Integrate AI agent security into broader enterprise cybersecurity response frameworks.

- Educate End Users

- Train employees to recognize phishing attempts, prompt injection risks, and risky outputs.

- Reinforce responsible use of agentic AI systems.

Conclusion

AI agents are reshaping enterprise automation, workflows, and decision-making, but they also expand the attack surface in ways that traditional chatbots and AI systems do not. As organizations integrate these tools into business-critical processes, AI agent security must be prioritized.

By combining strong access controls, runtime monitoring, zero-trust principles, and secure frameworks, enterprises can build secure AI agents capable of driving innovation while protecting sensitive information and mitigating real-world risks.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.