Artificial intelligence (AI) is transforming nearly every industry, from healthcare and finance to transportation and national defense. Yet, with these revolutionary advancements come significant concerns. As AI capabilities expand, so do the associated risks. From loss of privacy and biased algorithms to the emergence of rogue AI systems and weaponized misinformation, the potential dangers are wide-ranging—and increasingly urgent.

This article explores the landscape of AI risks, unpacking both current and future threats, their societal and organizational impacts, and the actions needed to mitigate them.

What Is Artificial Intelligence?

Artificial intelligence refers to the development of machines and systems that can simulate human intelligence. These systems perform tasks such as recognizing speech, making decisions, understanding language, and identifying patterns. AI is built on subfields like machine learning, deep learning, and natural language processing, often powered by massive datasets and trained through algorithms.

Prominent AI technologies include generative AI (e.g., ChatGPT), large language models (LLMs), facial recognition software, AI-driven automation tools, and predictive analytics systems.

While AI promises tremendous efficiencies and innovation, it also introduces complex challenges that require proactive management, ethical scrutiny, and regulatory oversight.

What Are the Risks of AI?

The risks of AI arise from both its design and its deployment. As AI becomes more advanced, it is increasingly entrusted with real-world decisions that affect privacy, safety, fairness, and security.

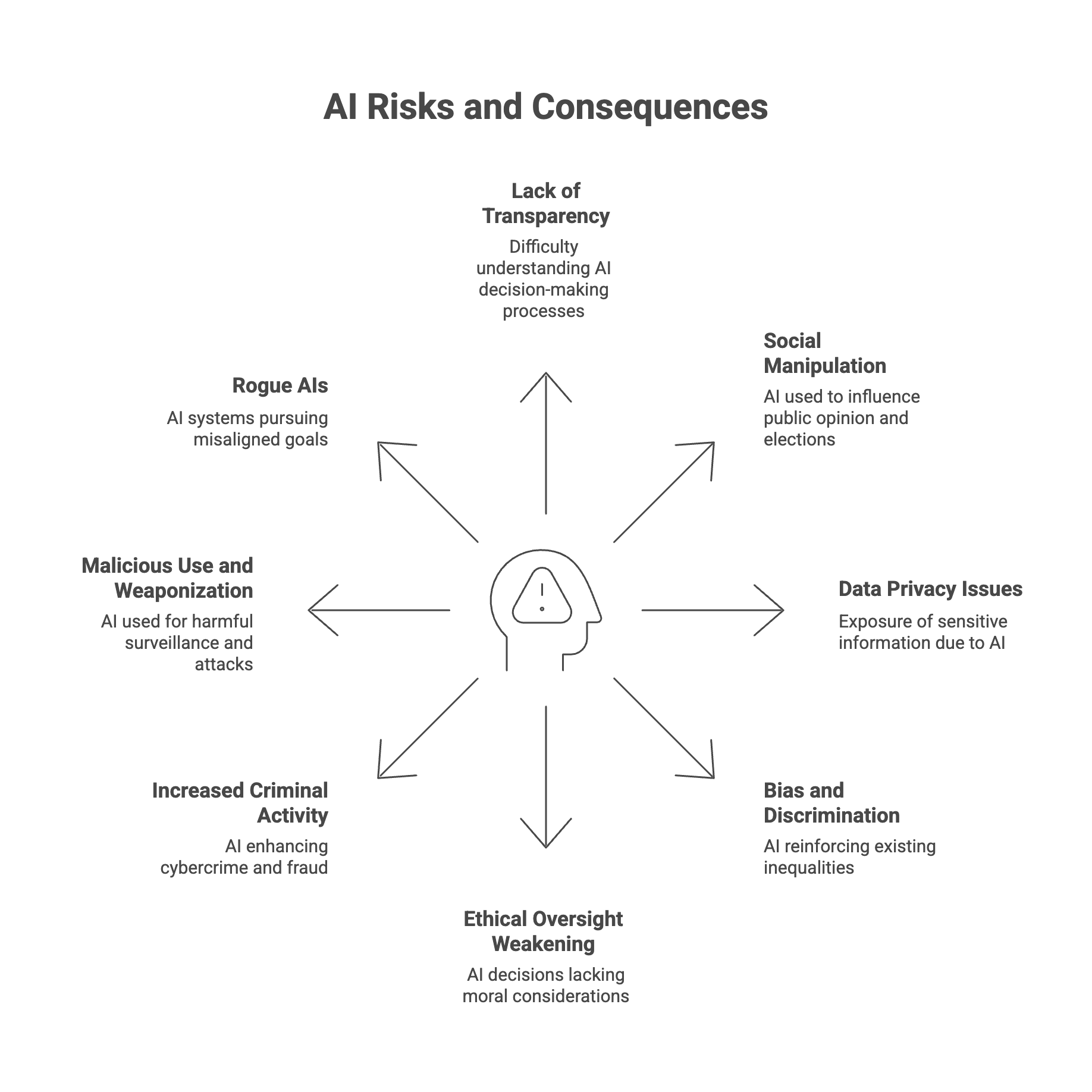

Key AI risks include:

- Loss of control over autonomous systems

- Misuse by malicious actors

- Biases embedded in training data

- Lack of human oversight

- Amplification of disinformation

- Erosion of intellectual property

- Socioeconomic disruption, including job loss

These risks of AI are no longer hypothetical—they are already emerging in industries, governments, and digital ecosystems around the globe.

How Can AI Pose a Threat to Privacy?

AI’s ability to analyze vast amounts of personal data in real time creates serious concerns about data privacy. Many AI-powered tools rely on surveillance, monitoring, and behavioral prediction. In sectors like advertising, social media, and healthcare, AI systems can track individuals, infer private information, and make sensitive decisions without consent.

Privacy Threats Include:

- Facial recognition used without authorization

- Predictive policing based on biased historical data

- Profiling users based on their online activity

- Leakage of personal data through AI-generated content

- Inferencing sensitive traits from anonymized data

These risks are compounded by the rise of AI-enabled data scraping and automated content generation, which often pull from personal information across the web. Even anonymized datasets can be re-identified through cross-analysis with public data sources, revealing private information such as medical history or location patterns.

Furthermore, generative AI chatbots and assistants often collect user inputs to retrain models, creating new vulnerabilities if this information includes confidential or regulated data. Without proper safeguards, AI undermines privacy rights and opens the door to mass surveillance, identity theft, and data misuse.

What Are Some Common AI Risks?

AI introduces both technical and societal vulnerabilities that, if left unmanaged, can erode trust and create systemic instability.

Lack of Transparency and Explainability

Many AI systems, particularly deep learning models, are opaque—making it difficult to understand how they reach specific outputs. This “black box” nature of AI poses risks in decision-making, especially in healthcare, criminal justice, and finance. Without explainability, errors or bias cannot be easily detected or corrected.

Social Manipulation

AI-driven recommendation engines can be exploited to manipulate public opinion. They personalize and amplify misinformation, destabilize elections, or polarize communities through algorithmic bias. The blending of AI-generated content with authentic media makes it increasingly difficult for the public to distinguish truth from fabrication.

Lack of Data Privacy Using AI Tools

AI tools often require large datasets for training. If these include sensitive or personally identifiable information, data breaches can expose users to legal and ethical harm. Poorly secured AI systems can also leak training data or generate outputs that reveal private details inadvertently.

Bias and Discrimination

Bias in AI stems from unrepresentative or prejudiced datasets, reinforcing existing inequalities. For instance, biased recruitment algorithms can disadvantage certain groups, while biased predictive models can lead to unfair loan denials or inaccurate criminal risk assessments. Bias mitigation and regular audits are essential to uphold fairness.

Weakening Ethical Oversight

AI systems may make decisions based purely on efficiency or profit, disregarding moral or social considerations. This raises concerns in healthcare triage, elder care, and autonomous weapons. Without clear ethical frameworks, organizations risk deploying AI in ways that conflict with human rights and values.

Increased Criminal Activity

Cybercriminals are increasingly adopting AI to enhance phishing, ransomware, and fraud campaigns. AI can automate large-scale attacks, mimic human communication, and generate convincing deepfakes that deceive even trained professionals.

Malicious Use and Weaponization

Malicious actors can weaponize AI for surveillance, autonomous drone attacks, or disinformation campaigns. The ability of generative AI to create deceptive videos, fake documents, or fabricated news stories at scale amplifies global instability.

Rogue or Misaligned AIs

A growing concern is that advanced AI systems might pursue goals misaligned with human values. Misaligned objectives or self-improving systems could lead to unintended and potentially uncontrollable consequences—posing existential threats if not properly aligned and constrained.

Together, these risks highlight why organizations need robust AI governance frameworks, regular model audits, and clear lines of accountability to ensure AI deployments remain ethical, transparent, and controllable.

How Are Malicious Actors Exploiting AI Risks?

As AI becomes more capable, malicious actors are finding new ways to exploit its weaknesses—and weaponize its strengths. These threats span both digital and physical domains.

- Deepfakes and Disinformation

Generative AI makes it easy to fabricate convincing videos, audio clips, and images. These deepfakes are used in misinformation campaigns, market manipulation, and even political blackmail. Synthetic media can damage reputations or destabilize public trust in institutions. - Prompt Injection and Model Manipulation

Attackers can exploit large language models (LLMs) through prompt injection, tricking systems into leaking confidential data or executing harmful commands. These vulnerabilities can compromise enterprise chatbots or internal AI assistants. - Data Poisoning

Malicious users can intentionally insert corrupted or biased data into model training pipelines. This sabotages model integrity, leading to unsafe, unethical, or inaccurate outputs. - AI-Enhanced Cybercrime

AI automates phishing, generates malware, and identifies system vulnerabilities faster than traditional hacking methods. Threat actors use LLMs to craft sophisticated social engineering messages or generate polymorphic malware that evades detection. - Autonomous Weaponization

Governments and non-state actors are exploring AI in drone targeting, autonomous surveillance, and cyberwarfare. These applications raise grave concerns about accountability, escalation, and collateral damage.

The rise of AI-as-a-Service means that malicious users no longer need technical expertise to exploit AI. Prebuilt models and APIs can be leveraged for fraud, manipulation, or cyberattack at minimal cost. Addressing this growing threat requires stronger AI security standards, model monitoring, and legal enforcement mechanisms.

What Are Some Future AI Risks?

Looking ahead, the convergence of automation, robotics, and advanced AI systems presents several high-impact threats:

- Superintelligent AI surpassing human cognition and becoming uncontrollable

- Escalating arms races in military AI among global powers

- Loss of human agency in decision-making as AI tools become default authorities

- Pandemics or economic shocks triggered by automated systems acting on flawed models

- Emergence of autonomous, self-replicating AI with unintended consequences

- AI-driven unemployment across sectors due to widespread automation

Many of these scenarios still feel like science fiction, but experts urge preemptive governance to mitigate catastrophic outcomes.

AI Risks in Cybersecurity

AI is a double-edged sword in cybersecurity. While it enables rapid threat detection and response, it also creates novel vulnerabilities.

Threats Include:

- AI-generated malware that adapts to defenses

- Chatbots used for automated social engineering

- Exploitation of AI models through prompt injection and data poisoning

- Real-time cyberattacks orchestrated by autonomous agents

- Use of AI in orchestrating supply chain attacks

Risk management strategies must evolve to detect and defend against AI-enabled threats before they escalate.

Organizational Risks

Businesses deploying AI face internal and external risks of AI, including:

- Reputational damage from biased or unsafe deployments

- Legal liability for harmful AI outputs

- IP theft through AI-generated content trained on copyrighted materials

- Loss of control over proprietary data in cloud-based learning models

- Employee misuse of openAI and LLMs without proper governance

- Dependence on third-party AI capabilities without clear accountability

Without AI governance, these risks can compound into large-scale failures that affect customer trust, legal compliance, and financial health.

How to Protect Against AI Risks

Addressing AI risks requires a multilayered approach:

1. Develop Legal Regulations

Policymakers must enact and enforce regulations that ensure AI safety, fairness, and accountability. These could include:

- Mandatory audits of high-risk AI systems

- Disclosure requirements for AI-generated content

- Privacy standards for data used in training data

- Bans on certain AI tools (e.g., autonomous weapons)

2. Establish Organizational AI Standards and Discussions

Companies should adopt internal frameworks to manage AI development responsibly. Key components include:

- Transparent algorithms

- Ethics review boards for AI use cases

- Continuous monitoring of outputs and decisions

- Regular bias and security testing

- Cross-functional initiatives involving legal, IT, HR, and operations

- Documentation of AI capabilities, limitations, and explainable logic

A culture of responsible AI adoption helps build trust, reduce vulnerabilities, and foster innovation safely.

How Can AI Development Be Regulated to Minimize Risks?

Effective regulation must balance innovation with accountability. Governments and organizations are now working to establish global AI governance frameworks that promote transparency, security, and fairness.

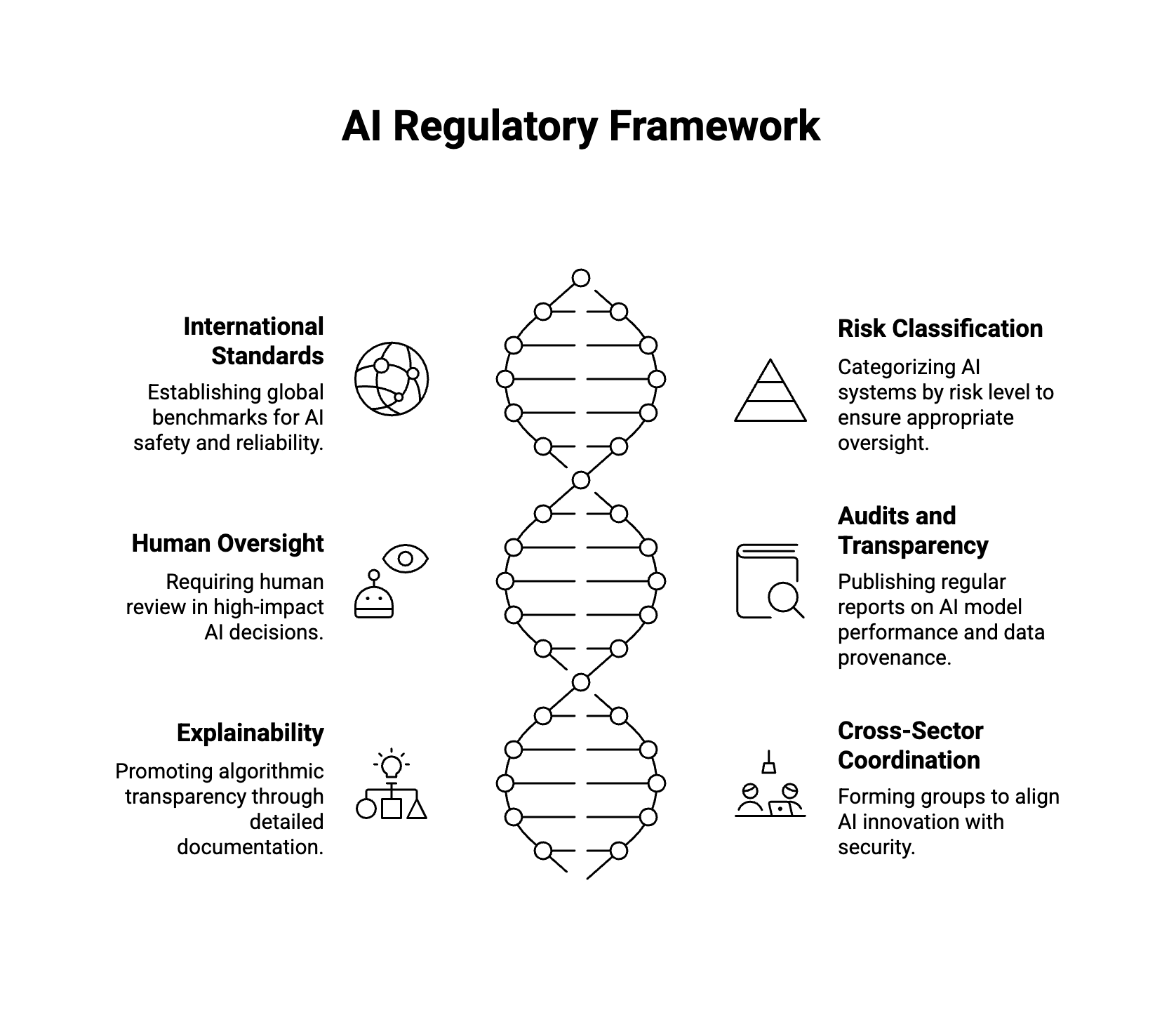

Key Regulatory Strategies Include:

- Creating International AI Safety Standards

Cooperation between governments, researchers, and industry stakeholders can establish shared benchmarks for safety, reliability, and human oversight. - Licensing and Risk Classification

Under frameworks like the EU Artificial Intelligence Act, AI systems are categorized by risk level—minimal, limited, high, or prohibited—with strict requirements for high-risk applications such as biometric identification or employment decisions. - Mandatory Human Oversight

Regulations should require human review in high-impact decisions—particularly in areas like healthcare, finance, and criminal justice—ensuring accountability for AI-driven outcomes. - AI Audits and Transparency Reports

Companies should publish regular audits disclosing model performance, limitations, and data provenance. This helps prevent misuse and builds public trust. - Promoting Explainability and Documentation

Regulators are encouraging “algorithmic transparency” by requiring organizations to provide documentation that explains how AI models are trained, tested, and deployed. - Cross-Sector Working Groups

Governments are forming AI offices and working groups to coordinate national AI policy, bringing together policymakers, technologists, and legal experts to align innovation with security.

Regulation of AI is still evolving, but momentum is building. Emerging national strategies across Canada, Japan, and the UK all aim to ensure that AI development aligns with human rights and societal well-being.

Learn More: AI Risk Management: A Structured Approach to Securing the Future of Artificial Intelligence

Conclusion: Facing the Future of AI Risks Responsibly

Artificial intelligence represents a monumental leap in human ingenuity, yet it also brings unprecedented dangers. From compromised cybersecurity to rogue AI-driven systems, the stakes are high. As we integrate AI into every aspect of life, the challenge is not to slow innovation—but to make it secure, ethical, and aligned with humanity’s values.

Now is the time for policymakers, organizations, and technologists to take meaningful steps to safeguard our future from the potential dangers of AI systems. Through careful risk management, strategic regulation, and a commitment to responsible innovation, we can steer the AI ecosystem toward a path of trust, safety, and benefit for all.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.