What Are AI Governance Frameworks?

An AI governance framework is a structured set of principles, policies, processes, and tools that guide the responsible use of artificial intelligence across its lifecycle. These frameworks are essential for ensuring that AI technologies are developed and deployed in a manner that aligns with ethical standards, regulatory requirements, and organizational values.

As AI systems become increasingly integrated into real-world applications such as healthcare, finance, and law enforcement, governance structures ensure that decision-making processes are transparent, fair, and accountable. Effective AI governance frameworks aim to mitigate risks, uphold human rights, and ensure compliance with evolving regulatory mandates like the EU AI Act or NIST’s AI Risk Management Framework.

Organizations like IBM have been at the forefront of developing responsible AI governance strategies, contributing significantly to the establishment of global best practices. As machine learning and automation continue to drive technological advancements, implementing AI governance has become a strategic imperative to manage the growing impact of AI on society and business.

How Do You Create an Effective AI Governance Framework?

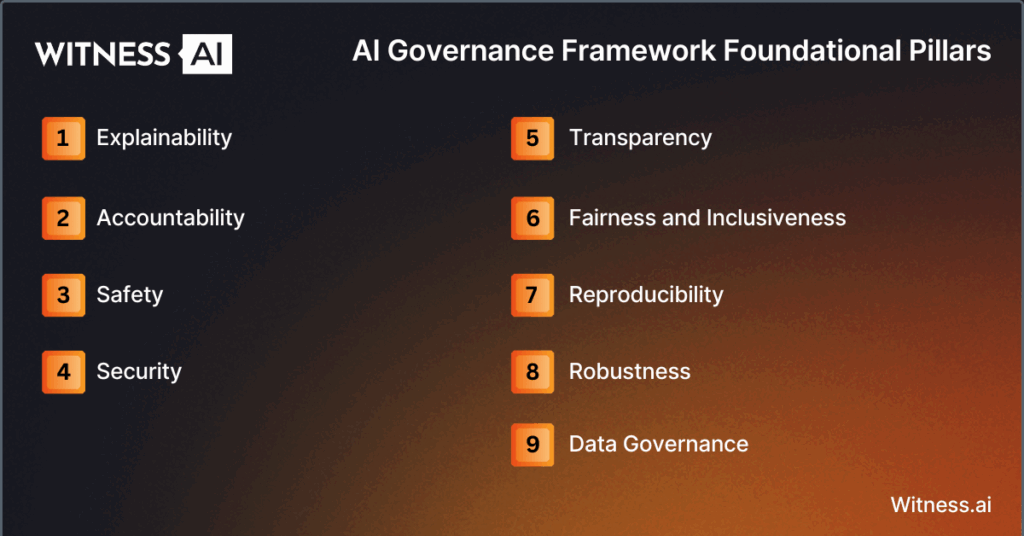

Establishing an effective AI governance framework requires a multi-dimensional approach. It must address the full AI lifecycle, from the development of AI models to the deployment and monitoring of AI-driven outputs. Below are the foundational pillars:

Explainability

Explainability involves making AI systems understandable to both technical and non-technical stakeholders. This ensures that the rationale behind automated decision-making can be interpreted, reviewed, and challenged. For high-risk applications, especially in healthcare and criminal justice, explainability is critical for maintaining transparency and user trust.

Accountability

Accountability assigns responsibility for the outcomes of AI applications. Governance structures must clearly delineate who is responsible for monitoring, auditing, and intervening in AI systems if adverse outcomes occur. Establishing accountability mechanisms ensures that stakeholders can trace decisions back to human oversight.

Safety

Safety encompasses the measures taken to prevent harm caused by AI systems. These include stress-testing algorithms under different scenarios, avoiding unexpected behavior in edge cases, and implementing fallback mechanisms. Ensuring safety is especially important in critical infrastructure and autonomous systems.

Security

Security refers to protecting AI models and data from unauthorized access, adversarial attacks, or manipulation. As generative AI expands, robust security controls must be embedded into the AI lifecycle to safeguard training data, outputs, and APIs.

Transparency

Transparency ensures that stakeholders have visibility into how AI systems are designed, trained, and deployed. Transparent documentation of datasets, algorithms, and decision-making logic promotes accountability and fosters regulatory compliance.

Fairness and Inclusiveness

Fairness and inclusiveness focus on preventing discrimination and ensuring equitable outcomes across different demographic groups. This includes auditing for bias in training data, applying fairness metrics, and engaging diverse stakeholders throughout the AI development process.

Reproducibility

Reproducibility ensures that AI models deliver consistent results under similar conditions. It supports validation, testing, and continuous improvement, making the system more robust and reliable.

Robustness

Robustness means the AI system can perform reliably across various conditions and is resilient to adversarial inputs or data shifts. Rigorous testing, simulation, and validation are required to strengthen robustness.

Data Governance

Data governance ensures the responsible handling of data throughout the AI lifecycle. It encompasses data quality, data protection, data privacy, and appropriate data usage policies. Organizations must align data governance with regulatory frameworks to ensure ethical AI development.

These foundational pillars must be supported by a clear governance roadmap, outlining strategic objectives, implementation phases, risk mitigation plans, and performance metrics. This roadmap ensures that AI initiatives are aligned with organizational goals and regulatory expectations.

What Role Does Transparency Play in AI Governance Frameworks?

Transparency is the cornerstone of any AI governance framework. It builds trust with stakeholders by clarifying how AI models are trained, how decisions are made, and how the system behaves under different conditions.

Transparent AI systems help:

- Facilitate internal and external audits

- Meet regulatory disclosure obligations

- Allow users to understand AI outputs

- Identify biases and potential risks early

Without transparency, stakeholders cannot validate the fairness or accuracy of AI applications, making it nearly impossible to ensure responsible use of AI. Transparency also allows organizations to benchmark performance and demonstrate accountability across use cases and industry verticals.

How Do AI Governance Frameworks Address Ethical Concerns?

Ethical considerations are deeply embedded in AI governance practices. These frameworks apply ethical principles such as autonomy, non-maleficence, justice, and beneficence to guide the development and deployment of AI technologies.

Governance frameworks address AI ethics by:

- Requiring ethical impact assessments at various stages of the AI lifecycle

- Mandating inclusive stakeholder engagement to capture diverse viewpoints

- Incorporating ethical standards into model training, evaluation, and monitoring

- Establishing ethical review boards or oversight committees

By codifying ethical principles into operational policies, AI governance frameworks ensure alignment with societal values and mitigate risks such as algorithmic bias and discriminatory outcomes. Responsible AI implementation is not just a regulatory requirement but a foundation for public trust and long-term adoption of AI technologies.

How Does an AI Governance Framework Impact Data Privacy and Security?

AI governance frameworks play a pivotal role in managing data privacy and security risks. The use of personal data to train and deploy AI models introduces significant concerns around consent, data leakage, and unauthorized usage.

Governance structures address these challenges through:

- Strong data governance policies that include encryption, anonymization, and access control

- Compliance with regulatory requirements like the GDPR, CCPA, and the EU AI Act

- Audits of datasets to identify and remove sensitive data

- Monitoring AI systems for data exfiltration or misuse of outputs

Effective AI governance ensures that the handling of sensitive data aligns with both legal obligations and public expectations around data protection. As regulatory frameworks from the European Union and other jurisdictions evolve, the governance of AI must remain proactive in addressing privacy and security issues.

Why Is AI Governance Important for Businesses and Organizations?

Adopting a formal AI governance framework offers several strategic advantages for organizations using or developing AI technologies.

Ensuring Trustworthiness and Ethical Considerations

Stakeholders—including customers, regulators, and investors—expect AI systems to operate ethically. Demonstrating a commitment to ethical AI increases stakeholder confidence and reduces reputational risk.

Data Transparency and Compliance

Governance frameworks help organizations comply with regulatory frameworks by embedding transparency and documentation practices into the AI lifecycle. This is especially important for high-risk AI applications where regulatory scrutiny is high.

Data-Driven Decisions

Governance facilitates the responsible use of AI for decision-making by ensuring that data and models are accurate, fair, and explainable. This increases the reliability of AI-driven outcomes and supports better business performance.

Additionally, businesses that implement strong governance practices are better positioned to:

- Mitigate potential risks from AI failures or misuse

- Streamline audits and regulatory reviews

- Integrate AI solutions across departments while maintaining oversight

- Align AI initiatives with corporate ethics and regulatory expectations

In sectors such as healthcare, finance, and government, the impact of AI on outcomes and public perception underscores the necessity for comprehensive governance structures.

How Can Organizations Ensure Compliance With AI Governance Standards?

Ensuring compliance with AI governance standards involves a combination of technical, organizational, and procedural measures:

- Establish Cross-Functional Governance Committees: Include legal, compliance, IT, data science, and business leaders to oversee AI practices.

- Adopt Recognized Frameworks: Align with international standards like NIST’s AI Risk Management Framework, ISO/IEC 42001, or EU AI Act guidelines.

- Define Policies and Procedures: Create documented policies for explainability, robustness, fairness, and incident management.

- Implement Monitoring and Auditing Tools: Use automated tools to track model behavior, detect drift, and log decision-making activities.

- Conduct Regular Risk Assessments: Evaluate AI applications against ethical, legal, and operational risks.

- Invest in Training and Awareness: Educate employees and stakeholders on responsible use of AI and the importance of governance structures.

- Integrate Governance into the AI Lifecycle: Embed checkpoints and governance reviews during the development, deployment, and retirement stages of AI systems.

- Maintain Documentation and Metrics: Track performance, outcomes, and compliance with ethical and regulatory benchmarks.

Organizations must also account for evolving AI regulation, both domestic and international, to maintain continuous compliance. By implementing AI governance early in the roadmap for digital transformation, businesses can future-proof their AI strategies and leverage technological advancements responsibly.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.