What is AI Application Security?

AI application security refers to the practice of identifying, mitigating, and preventing security vulnerabilities and threats in artificial intelligence (AI)-powered applications. As AI systems become integral to business operations—driving decisions, automating workflows, and analyzing sensitive data—they simultaneously introduce new security challenges that traditional appsec approaches aren’t equipped to address alone.

With the rise of machine learning (ML) models, generative AI (GenAI), and large language models (LLMs), the attack surface of modern applications has significantly expanded. AI application security is not only about securing code but also about safeguarding algorithms, training data, APIs, and the outputs of autonomous decision-making processes.

What is an AI Application?

An AI application is a software program that integrates artificial intelligence capabilities to perform tasks typically requiring human intelligence. This includes applications that:

- Use ML models to predict user behavior

- Generate content using GenAI or LLMs

- Automate decision-making based on complex datasets

- Adapt functionality based on real-time inputs

Examples span industries and use cases—from fraud detection in financial systems to personalized recommendations in e-commerce and autonomous decision systems in healthcare.

Unlike traditional software, AI applications continuously evolve through training and fine-tuning, making them more powerful but also harder to secure. Their reliance on training data, open-source components, and third-party providers further complicates their security posture.

The Application Attack Surface

AI applications introduce new vectors into the traditional application attack surface. These include:

- Training data manipulation (data poisoning)

- Prompt injection and output manipulation in LLM-based apps

- Model theft or inversion attacks that expose intellectual property

- Unauthorized access to inference APIs or model endpoints

- Adversarial attacks that subtly alter input data to mislead AI outputs

These threats target different lifecycle phases of AI applications—from development and fine-tuning to deployment and runtime. As such, AI application security must encompass a broader, AI-specific scope than traditional cybersecurity.

How Can AI Be Used to Enhance Application Security?

Ironically, AI is not just a target—it is also a powerful security tool. When used appropriately, AI can strengthen application security in the following ways:

- Real-time threat detection: AI-driven threat intelligence platforms can analyze behavioral patterns to detect anomalies and malware in real-time.

- Automated remediation: AI security tools can identify and even fix potential vulnerabilities before they are exploited.

- Enhanced risk management: AI models can assess security risks across complex environments and prioritize remediation based on potential impact.

- Reducing false positives: Traditional detection tools often suffer from alert fatigue. ML algorithms can improve signal-to-noise ratios, helping security teams focus on credible threats.

AI-enabled security tools are especially useful in environments with massive datasets, dynamic workloads, and evolving attack techniques.

What Role Does Data Privacy Play in AI Application Security?

Data privacy is foundational to AI application security. Because AI systems rely heavily on data—often including sensitive information—securing data inputs, storage, and outputs is critical.

Key data privacy concerns in AI applications include:

- Exposure of personal or sensitive information during model training or inference

- Insecure data sources feeding models with unverified or malicious content

- Inadequate data protection leading to leakage through model outputs or APIs

- Compliance risks with regulations like GDPR, HIPAA, or CCPA

To secure AI applications, organizations must integrate robust access controls, data encryption, and auditing mechanisms throughout the AI lifecycle.

How Can AI Application Security Protect Against Adversarial Attacks?

Adversarial attacks are sophisticated threats that subtly manipulate input data to cause AI systems to make incorrect or harmful decisions. For example, by adding noise to an image, attackers can fool a computer vision model into misclassifying it.

AI application security can protect against these attacks through:

- Robust model validation techniques that test models against known adversarial patterns

- Defensive training to improve ML model resilience

- Runtime input sanitization to identify and neutralize manipulated data

- Threat intelligence integration to update models with evolving attack vectors

As adversarial techniques become more advanced, continuous monitoring and validation become essential to safeguard AI functionality and trustworthiness.

How Do You Protect AI Applications from Security Threats?

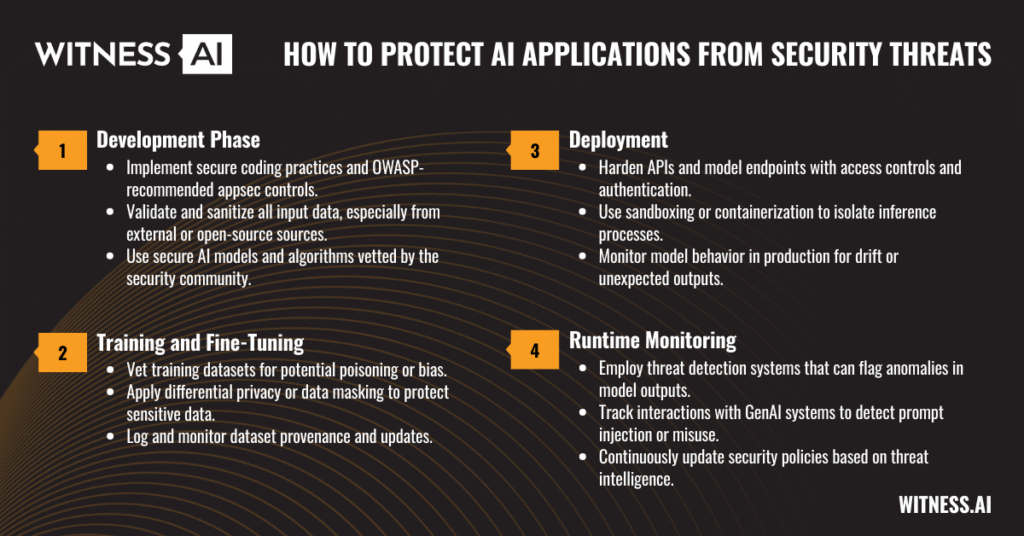

Securing AI applications requires a holistic, lifecycle-oriented approach:

- Development Phase

- Implement secure coding practices and OWASP-recommended appsec controls.

- Validate and sanitize all input data, especially from external or open-source sources.

- Use secure AI models and algorithms vetted by the security community.

- Training and Fine-Tuning

- Vet training datasets for potential poisoning or bias.

- Apply differential privacy or data masking to protect sensitive data.

- Log and monitor dataset provenance and updates.

- Deployment

- Harden APIs and model endpoints with access controls and authentication.

- Use sandboxing or containerization to isolate inference processes.

- Monitor model behavior in production for drift or unexpected outputs.

- Runtime Monitoring

- Employ threat detection systems that can flag anomalies in model outputs.

- Track interactions with GenAI systems to detect prompt injection or misuse.

- Continuously update security policies based on threat intelligence.

What Are AI Application Security Solutions?

A variety of AI application security solutions exist to protect against AI-specific and general application threats:

- Model protection platforms: Secure LLMs and ML models from theft, inversion, and adversarial attacks.

- API security tools: Monitor and control access to AI model endpoints and sensitive data.

- Data lineage and validation platforms: Ensure trustworthy, secure datasets during model training.

- Prompt injection protection tools: Detect and prevent manipulation of GenAI model inputs.

- Security orchestration and automation (SOAR): Integrate threat detection, remediation, and reporting across workflows.

Leading providers now offer AI-specific modules to traditional appsec tools, addressing the growing need to secure intelligent applications.

AI Application Development Security

During development, AI applications are particularly vulnerable due to reliance on third-party tools, open-source libraries, and training data. Developers must:

- Adopt secure software development lifecycle (SDLC) practices tailored for AI systems.

- Conduct code reviews with a focus on ML and GenAI-specific logic.

- Scan open-source components for known vulnerabilities.

- Define secure workflows for model training, version control, and fine-tuning.

- Limit access to training environments to trusted personnel and enforce role-based permissions.

Embedding security early in the development phase reduces remediation costs and minimizes the introduction of potential vulnerabilities into production systems.

AI Application Deployment Security

Once deployed, AI applications face unique runtime risks that differ from traditional apps:

- Dynamic inference behavior: LLMs and GenAI systems generate non-deterministic outputs, which attackers can exploit.

- Continuous learning risks: If models update in real-time, they may incorporate adversarial data.

- External exposure: APIs or model-as-a-service deployments increase exposure to cyberattacks.

Deployment security best practices include:

- Rate limiting and throttling inference requests

- Output validation to prevent model hallucination or information leakage

- Audit logging of model interactions for traceability

- Secure supply chain management for containers and model dependencies

Security teams must treat deployed models as live assets that require ongoing protection and observability.

The Role of AI in Application Security

As organizations scale their use of AI applications, AI will play a growing role in securing other software systems. Specifically, AI contributes to application security through:

- Proactive vulnerability discovery using ML to scan codebases and identify issues faster

- Predictive analytics for breach likelihood and risk scoring

- Behavioral monitoring to flag anomalous activity that traditional systems may miss

- Security decision-making support via automated insights and recommendations

In short, the use of AI in appsec improves responsiveness, scalability, and accuracy—especially in large, complex environments where manual detection fails.

Conclusion

AI application security is no longer optional—it’s a core requirement for any organization adopting AI at scale. As AI systems become more pervasive, so do the associated security risks. From prompt injection and data poisoning to adversarial attacks and unauthorized access, securing AI applications demands specialized tools, policies, and expertise.

A successful AI application security strategy encompasses the entire lifecycle—from development and training to deployment and runtime. It leverages AI both as a defensive tool and as a system to be protected. By implementing robust risk management, validation, and remediation processes, enterprises can strengthen their security posture and optimize the performance and trustworthiness of their AI-powered apps.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.