What is AI Transparency?

AI transparency refers to the degree to which stakeholders can understand, trace, and evaluate the operations, decisions, and underlying processes of artificial intelligence systems. It involves making the inner workings of AI models—particularly complex ones like neural networks—accessible and explainable to users, developers, regulators, and the broader public.

Transparent AI systems allow users to comprehend how inputs (e.g., datasets, personal data) influence outputs (e.g., recommendations, decisions), why specific predictions are made, and what role automation plays in the overall process. It’s a critical concept for ensuring that AI decision-making aligns with ethical, legal, and social expectations, especially in sensitive sectors such as healthcare, finance, and social media.

Why is AI Transparency Important?

- Building Trust: Transparent AI builds trust with users by demystifying AI-generated decisions. This is especially vital in AI applications that directly impact individuals, such as chatbots used in customer service or AI-driven diagnostic tools in healthcare.

- Supporting Regulation: Frameworks like the EU AI Act and regulations such as GDPR require organizations to disclose information about automated decisions, their purposes, and the underlying data sources.

- Enabling Risk Management: Transparency allows organizations to identify, assess, and mitigate AI risks. Clear insights into training data, algorithms, and decision-making processes enable stronger risk management and compliance.

- Ensuring Ethical Use: Transparent AI practices support the development of ethical AI systems by exposing potential biases, black box behaviors, and unintended consequences during AI development.

What are the Three Layers of AI Transparency?

Transparency in AI is multi-dimensional, typically categorized into three interconnected layers:

1. Explainability

Explainability refers to the extent to which the outputs of an AI system can be explained in human-understandable terms. This includes explainable AI (XAI) techniques that help clarify how a model arrives at its decisions. For example, in a loan approval model, explainability would allow a user to understand why their application was accepted or rejected.

Key techniques:

- SHAP and LIME for model explanations

- Feature attribution methods

- Natural language summaries of model behavior

2. Interpretability

Interpretability involves understanding how AI algorithms process input data. While explainability focuses on why an outcome occurs, interpretability emphasizes how the outcome is derived. It is more technical and often aimed at data scientists, engineers, and AI auditors.

Key considerations:

- Algorithmic transparency

- Visualization of neural networks or machine learning models

- Simpler, interpretable models when possible

3. Accountability

Accountability ensures there is a clear assignment of responsibility for the behavior of AI systems. This means organizations must provide governance mechanisms, audits, and documentation to show who is responsible for outcomes and how decisions were made.

Mechanisms:

- Transparency reports

- Human oversight processes

- Clear roles for developers, risk officers, and stakeholders

How Can AI Transparency Improve Trust in Technology?

Trust is foundational to the adoption and acceptance of AI technologies. Transparency bridges the gap between black box models and public understanding. By disclosing model limitations, highlighting data sources, and communicating outcomes clearly, organizations can:

- Increase user confidence in AI-driven decisions

- Reduce resistance to automation

- Promote acceptance of AI-generated content

- Address concerns over data privacy and misuse of personal data

For example, generative AI tools that clearly label AI-generated outputs and disclose the type of model used (e.g., OpenAI’s GPT models) tend to be more trusted by end users and regulators alike.

How to Show Transparency in AI?

Demonstrating transparency involves practical steps and strategic communication. Organizations can show transparency through:

Documentation & Reporting

- Share transparency reports outlining the model lifecycle, data inputs, and system behavior.

- Maintain detailed AI governance documentation.

XAI Tools

- Integrate explainable AI methods to help users understand decisions in real time.

Disclosure Practices

- Clearly state where and how AI tools are used, especially in user-facing use cases like virtual assistants or social media moderation systems.

Data Practices

- Provide insights into datasets, including how training data was collected, cleaned, and validated.

- Comply with data protection regulations to ensure ethical AI use.

Internal Oversight

- Build oversight committees and conduct regular audits of AI systems.

- Ensure human oversight is part of decision-making loops.

What are the Challenges of Transparency in AI?

Despite its benefits, implementing transparent AI comes with several challenges:

- Complexity of Models

Advanced models like deep learning systems are inherently hard to interpret. Providing meaningful insights into their inner workings can be technically difficult. - Trade-Offs with Performance

Increasing interpretability sometimes requires simplifying models, potentially compromising accuracy. This trade-off must be managed based on the impact of AI in each application. - Intellectual Property Concerns

Some AI providers are reluctant to disclose algorithms or datasets due to proprietary restrictions, creating tension between transparency and competitive advantage. - Data Privacy Regulations

Striving for transparency may conflict with GDPR or similar laws that restrict the exposure of personal data, limiting how much information can be shared. - Lack of Standardization

There is no universal framework for transparency requirements, making implementation inconsistent across the AI ecosystem.

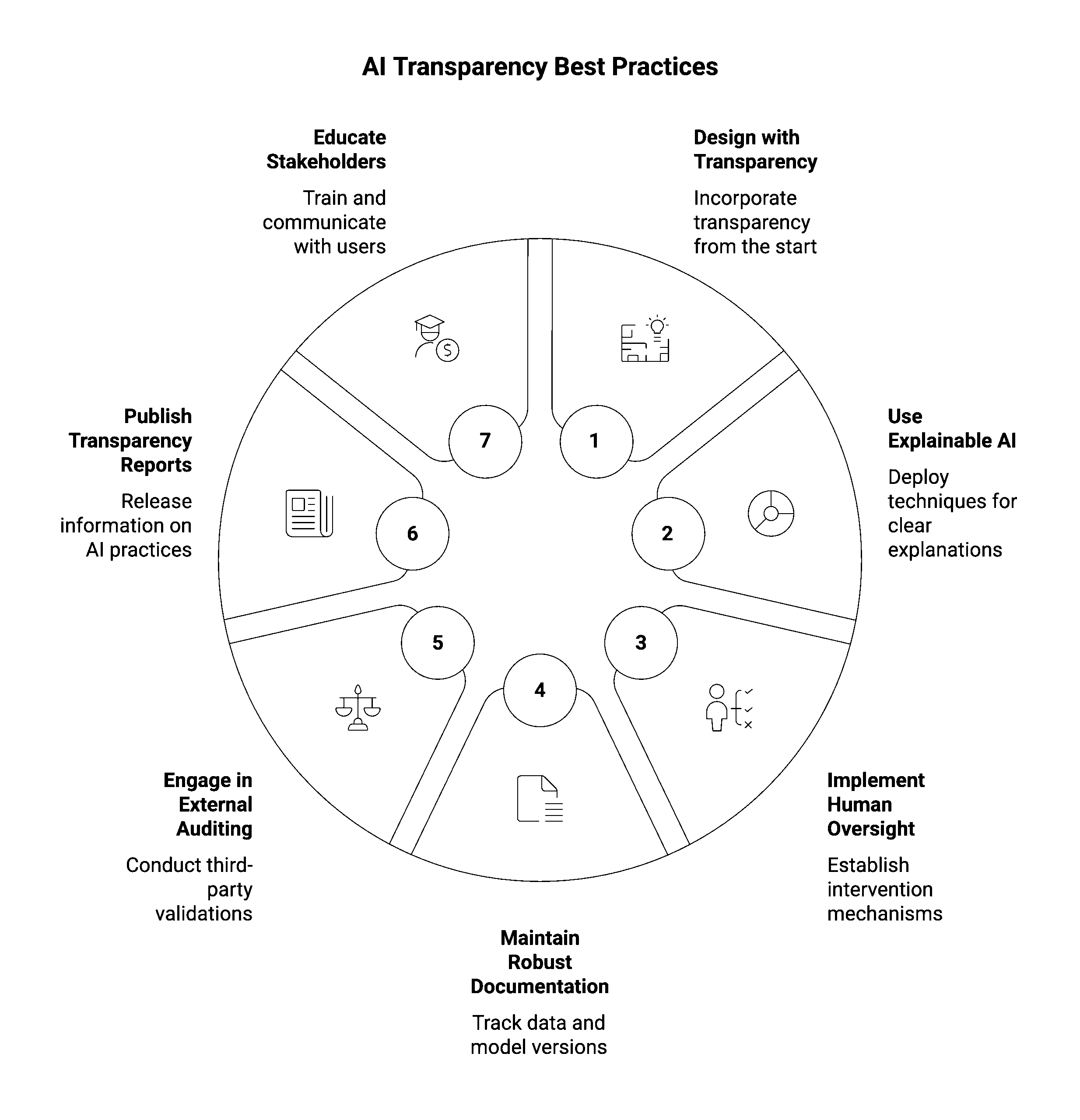

What are the AI Transparency Best Practices?

To promote trustworthy AI and meet evolving AI regulation, organizations should follow these best practices:

1. Design with Transparency in Mind

- Incorporate transparency as a design principle from the start of AI development.

- Choose interpretable models when possible, especially for high-stakes use cases.

2. Use Explainable AI (XAI) Techniques

- Deploy methods like LIME, SHAP, or counterfactual explanations.

- Tailor explanations to both technical and non-technical audiences.

3. Implement Human Oversight

- Establish clear escalation paths and intervention mechanisms.

- Empower teams to review and override AI decision-making when necessary.

4. Maintain Robust Documentation

- Track training data, model versions, updates, and performance metrics.

- Share information with stakeholders, including regulators and users.

5. Engage in External Auditing

- Conduct third-party audits to validate transparency claims and flag risks.

- Collaborate with independent bodies to ensure objectivity.

6. Publish Transparency Reports

- Regularly release information on AI models, algorithms, and data sources.

- Highlight AI practices that align with responsible AI principles.

7. Educate Stakeholders

- Train data scientists, developers, and leadership on transparent AI principles.

- Communicate clearly with end users about how AI systems work and their limitations.

Final Thoughts

AI transparency is more than a technical feature—it’s a strategic imperative for building trust, ensuring compliance, and advancing responsible AI. As AI technologies become more embedded in daily life, organizations must prioritize explainability, interpretability, and accountability to address public concerns and meet regulatory expectations. By adopting best practices and embracing transparency as a core value, companies can foster a more ethical, secure, and trusted AI ecosystem.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.