What is AI Bias?

AI bias refers to systematic and unfair discrimination that can emerge in the behavior or outputs of artificial intelligence (AI) systems. These biases occur when machine learning models, algorithms, or generative AI tools produce results that reflect and even amplify existing societal inequalities. Biased AI can result in discriminatory outcomes for certain demographics—especially marginalized groups—across sectors like healthcare, finance, law enforcement, and hiring.

AI bias often manifests in subtle but harmful ways, such as language models generating text that perpetuates gender stereotypes, or facial recognition systems misidentifying people with darker skin tones. As AI technologies like chatbots, image generators, and recommendation engines continue to influence decision-making processes in real-world applications, the need to identify and mitigate bias becomes more urgent.

What Causes AI Bias?

Bias in AI originates from multiple sources, often intertwined with the limitations and flaws in how these systems are developed and trained.

What Are the Top Sources of Bias in AI?

- Biased Training Data: Most AI models learn from datasets derived from historical data. If that data reflects human biases—such as gender bias, racial disparities, or socioeconomic inequalities—the model will likely replicate and even magnify those biases.

- Lack of Diverse Representation: Underrepresented demographics, such as people with disabilities, certain ethnic groups, or non-Western populations, may be excluded from datasets, leading to skewed outputs.

- Labeling and Annotation Bias: Human decision-makers involved in labeling training data may bring cognitive bias into the process, affecting the accuracy and neutrality of data used for model training.

- Algorithmic Design Choices: Developers’ assumptions and priorities can influence how algorithms weigh different types of data, potentially introducing algorithmic bias.

- Feedback Loops from Deployment: AI models that learn from user interactions—like chatbots on social media platforms—can inherit the biases present in user behavior or engagement data.

- Data Collection Practices: Inadequate data governance or flawed sampling methodologies can lead to overrepresentation or underrepresentation of certain groups.

Examples: IBM research has shown that some facial recognition systems struggle with accurate identification of individuals with darker skin. In credit scoring, biased AI may penalize individuals from certain zip codes or educational backgrounds, reproducing socioeconomic disadvantages.

What Are the Impacts of Bias in AI Systems?

Bias in AI systems can lead to serious, far-reaching consequences across domains:

- Healthcare: AI tools used for diagnosis or treatment planning may provide less accurate recommendations for patients from marginalized groups, exacerbating health disparities.

- Law Enforcement: Predictive policing algorithms have been criticized for disproportionately targeting communities of color, further entrenching existing inequalities in the justice system.

- Hiring and HR Tools: Automated resume screeners can unintentionally filter out candidates based on gendered language or educational backgrounds tied to specific socioeconomic demographics.

- Finance: AI systems used for loan approvals or credit scoring may produce discriminatory outcomes, denying services to applicants from underrepresented groups.

- Education and Social Media: Generative AI models have been found to replicate harmful stereotypes in text and image generation, influencing public perception and reinforcing discriminatory norms.

These outcomes not only harm individuals and communities but also damage public trust in AI technologies and the organizations deploying them.

Why Is It Important to Reduce AI Bias?

Reducing AI bias is essential for promoting responsible AI development and use. Here’s why:

- Equity and Fairness: Fairness is a fundamental principle in automated decision-making. Ensuring AI does not discriminate is crucial for treating all individuals equally.

- Compliance and Regulation: Increasingly, governments and regulatory bodies (e.g., the EU AI Act) are demanding greater transparency and fairness in AI systems. Biased AI could expose organizations to legal liabilities.

- Public Trust and Adoption: People are more likely to use and trust AI tools if they believe the technology respects their rights and works equitably across demographics.

- Innovation and Accuracy: Reducing bias enhances the performance of AI algorithms. More inclusive datasets and robust evaluation metrics improve model generalization across diverse real-world contexts.

- Moral and Ethical Responsibility: Developers, researchers, and stakeholders have a duty to prevent harm caused by technology, especially to marginalized and historically disadvantaged communities.

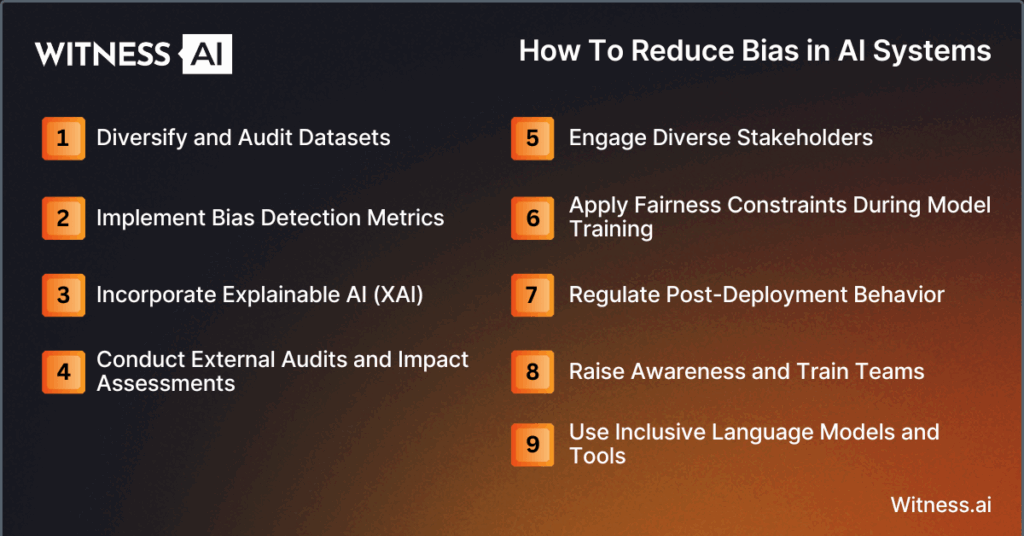

How Can We Reduce Bias in AI Systems?

Addressing AI bias requires a combination of technical, organizational, and ethical approaches. Below are several strategies to mitigate bias throughout the AI lifecycle:

1. Diversify and Audit Datasets

- Use datasets that reflect the diversity of real-world populations, including various races, genders, ages, and socioeconomic statuses.

- Perform regular audits to identify gaps or overrepresentation.

- Apply techniques like data balancing, augmentation, and re-sampling to address disparities.

2. Implement Bias Detection Metrics

- Use fairness-aware metrics (e.g., equal opportunity difference, disparate impact ratio) to quantify bias.

- Evaluate model performance separately across different demographic groups.

3. Incorporate Explainable AI (XAI)

- Use explainable AI techniques to understand how models arrive at decisions.

- Explainability helps uncover hidden patterns that could point to algorithmic bias.

4. Conduct External Audits and Impact Assessments

- Third-party audits provide unbiased evaluations of model fairness and ethical performance.

- Conduct AI impact assessments that evaluate risks to different groups of people.

5. Engage Diverse Stakeholders

- Involve domain experts, ethicists, and representatives from underrepresented communities during AI development.

- Foster inclusive design thinking to surface potential blind spots.

6. Apply Fairness Constraints During Model Training

- Introduce algorithmic constraints or regularization methods that explicitly enforce fairness objectives.

- Use adversarial debiasing or reweighting strategies in training data.

7. Regulate Post-Deployment Behavior

- Monitor AI-generated outputs over time for bias drift.

- Apply reinforcement learning strategies or feedback loops that prioritize equitable outcomes.

8. Raise Awareness and Train Teams

- Educate developers and data scientists on sources of bias and mitigation practices.

- Encourage ethical decision-making across all levels of AI development and deployment.

9. Use Inclusive Language Models and Tools

- Evaluate and refine language models to avoid harmful stereotypes.

- Re-train or fine-tune generative AI models like image generators to ensure representation of all demographics.

Organizations such as IBM and OpenAI are leading initiatives to create fairer machine learning models, but industry-wide collaboration and standards are needed to institutionalize best practices.

Conclusion

AI bias is not an inevitable flaw of automation—it’s a challenge that reflects broader societal inequities. By recognizing the sources of bias in AI systems and actively mitigating them through better data practices, algorithmic fairness, and stakeholder engagement, we can create more just and trustworthy AI technologies. As artificial intelligence continues to power critical decision-making processes across industries, reducing bias must remain a foundational pillar of responsible AI development.

About WitnessAI

WitnessAI is the confidence layer for enterprise AI, providing the unified platform to observe, control, and protect all AI activity. We govern your entire workforce, human employees and AI agents alike, with network-level visibility and intent-based controls. We deliver runtime security for models, applications, and agents. Our single-tenant architecture ensures data sovereignty and compliance. Learn more at witness.ai.