What Are AI Guardrails?

Artificial intelligence is advancing at an unprecedented pace. Large language models (LLMs), generative AI (GenAI) systems, and domain-specific AI applications are being embedded across industries, from healthcare diagnostics to financial modeling. But with these advancements come risks: biased outputs, exposure of personally identifiable information (PII), and even vulnerabilities to prompt injection or jailbreak attacks.

This is where AI guardrails come in. AI guardrails are a collection of safeguards—policy-driven, technical, and procedural—that define how AI systems behave. Much like physical guardrails on a highway, they don’t dictate every move but ensure systems stay within safe and ethical boundaries.

Guardrails can be applied during model design, training data preparation, or at runtime, where they monitor user prompts and generated outputs. They help ensure that AI systems operate responsibly, reduce risk for providers and end users, and protect sensitive information.

Why Are AI Guardrails Important?

The increasing use of AI in sensitive domains has heightened concerns about trust, safety, and compliance. Without proper safeguards, AI systems can:

- Leak personal data: An AI chatbot might accidentally reveal personal data from its underlying datasets.

- Generate harmful content: AI outputs may include discriminatory language, disallowed material, or security-sensitive information.

- Be manipulated by malicious actors: Attacks like prompt injection or jailbreaks can override built-in controls.

- Create compliance gaps: Organizations risk violating data protection rules like GDPR or HIPAA if models mishandle PII or sensitive data.

For organizations deploying AI agents, customer-facing chat interfaces, or automated decision-making systems, AI guardrails provide the foundation for responsible AI. They are not simply technical measures—they are part of broader AI governance frameworks that reassure stakeholders and regulators.

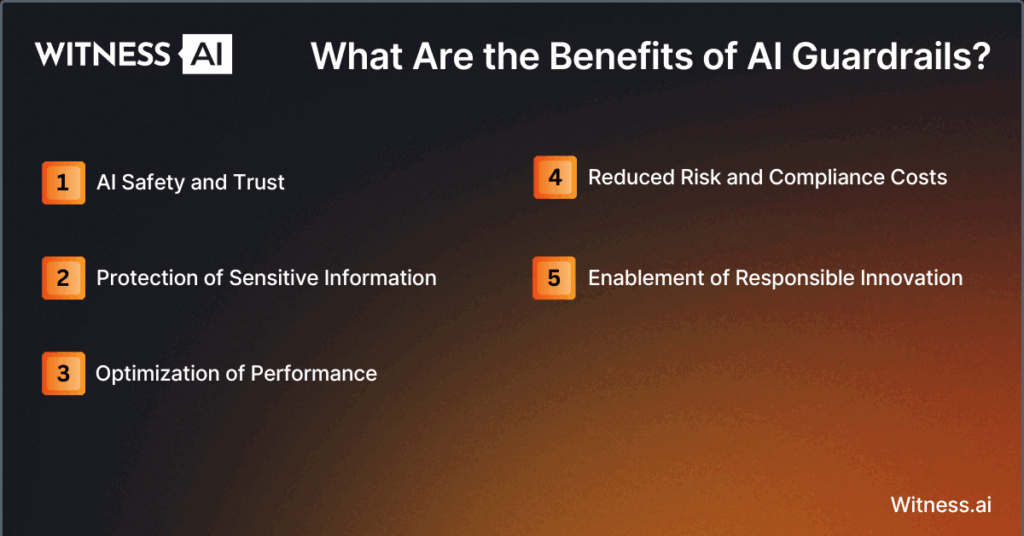

What Are the Benefits of AI Guardrails?

Organizations that adopt AI guardrails gain both operational and strategic advantages:

- AI Safety and Trust

Guardrails prevent unsafe, biased, or misleading AI outputs, reinforcing trust among customers, regulators, and internal stakeholders. - Protection of Sensitive Information

Built-in filters stop the accidental exposure of PII, personal data, or intellectual property during AI interactions. - Optimization of Performance

Guardrails track metrics such as latency, reliability, and accuracy. This ensures real-time systems can remain efficient while upholding compliance. - Reduced Risk and Compliance Costs

Proactive controls minimize costly regulatory violations and data breaches. They also support validation and benchmarks for audits. - Enablement of Responsible Innovation

By creating confidence in the safe use of AI, guardrails encourage more creative adoption of GenAI and machine learning in regulated industries.

What Are the Main Types of AI Guardrails?

AI guardrails come in several forms, often layered together to cover different stages of the AI lifecycle:

- Data Guardrails

- Secure sensitive datasets and enforce data privacy rules.

- Examples: redacting PII, applying access controls, and validating training inputs.

- Model Guardrails

- Built into AI models themselves, addressing bias and vulnerabilities during the development process.

- Example: removing skewed training data, applying fine-tuning to reduce harmful behaviors.

- Output Guardrails

- Active in real-time, monitoring model outputs before they reach the end user.

- Example: filtering disallowed language or blocking responses that could disclose sensitive data.

- Access Guardrails

- Protect the system at the API and infrastructure level.

- Example: requiring authentication tokens, limiting functionality by role, and applying cybersecurity safeguards.

- Lifecycle Guardrails

- Continuous checks applied throughout the AI development lifecycle—from training data validation to post-deployment monitoring.

- Ensure systems evolve responsibly as AI development advances.

How Do AI Guardrails Work?

Guardrails are not a single function; they operate across layers of design, deployment, and monitoring.

- At Input: Guardrails check user prompts for malicious intent, disallowed topics, or injection attempts.

- Within Models: Guardrails align functions and AI outputs with ethical and regulatory standards, often through fine-tuning or reinforcement learning.

- At Output: Guardrails validate responses against compliance thresholds, flagging or blocking unsafe content.

- Post-Deployment: Automated tools benchmark, test, and adapt guardrails as advancements in adversarial threats emerge.

Preventing Biased Outcomes

Bias remains one of the most visible risks in AI systems. Guardrails counter it through:

- Fairness metrics and audits during training data preparation.

- Automated validation to prevent discriminatory outcomes.

- Ongoing monitoring of model drift, ensuring AI outputs remain equitable.

Mitigating Risks

Guardrails are critical in stopping active threats:

- Prompt Injection: Filters detect manipulation attempts where attackers insert malicious instructions.

- Jailbreaks: Guardrails enforce constraints that stop users from bypassing restrictions.

- Sensitive Data Leakage: Systems identify and redact PII or personal data before outputs are delivered.

How to Deploy AI Guardrails

Deployment is a structured process that involves multiple stakeholders across security, compliance, and AI development teams.

- Define Use Cases and Risk Profile

- Map potential vulnerabilities based on how the AI applications will be used.

- Example: a healthcare chatbot faces different risks than a marketing content generator.

- Select the Right Guardrails

- For customer-facing chatbots, prioritize output guardrails and data privacy filters.

- For enterprise integrations, emphasize API and access controls.

- Integrate into the Development Process

- Embed guardrails during AI model design and validation, not just post-deployment.

- Apply fine-tuning to ensure responsible behavior.

- Test Guardrails Against Benchmarks

- Run red-team exercises, simulate adversarial user prompts, and evaluate outputs.

- Measure against compliance benchmarks and latency requirements.

- Monitor and Adapt

- Deploy monitoring tools to track performance in real-time.

- Update controls as new vulnerabilities or regulations emerge.

AI Guardrails Best Practices

1. Regularly Test Guardrail Effectiveness

- Conduct adversarial testing to probe weaknesses.

- Benchmark outputs against both technical standards and regulatory requirements.

- Continuously validate performance to adapt to new use cases.

2. Integrate Security Throughout the AI Lifecycle

- Apply guardrails during training data ingestion, model design, and runtime deployment.

- Maintain alignment with AI safety frameworks.

- Ensure security measures do not excessively impact system latency or end user experience.

3. Stay Informed About Emerging Threats

- Monitor research in open-source security, machine learning adversarial attacks, and GenAI vulnerabilities.

- Anticipate risks like API exploitation, adversarial examples, or data poisoning.

- Update internal policies and controls to match advancements in AI threats.

The Future of AI Guardrails

As AI development accelerates, guardrails will evolve from add-on safeguards to embedded, standardized features across platforms. Providers such as OpenAI and Microsoft already apply layers of guardrails to their LLMs, but enterprises will need tailored solutions.

We can expect:

- Automated guardrail systems with adaptive learning.

- Standardized benchmarks across industries.

- Closer alignment between cybersecurity and AI governance.

- Guardrails that scale seamlessly for multi-agent AI systems and complex use cases.

Ultimately, AI guardrails are not about limiting creativity but about enabling trust and safety. They provide the structure needed for organizations to innovate with confidence while protecting users, data, and reputation.

Conclusion

AI guardrails are essential to the safe and responsible use of AI. They protect sensitive data, mitigate vulnerabilities, and ensure AI outputs align with ethical, legal, and operational expectations.

By embedding guardrails into the development process and monitoring their effectiveness across the AI lifecycle, organizations can embrace GenAI and machine learning innovations while minimizing risk.

As AI becomes more deeply integrated into critical functions, guardrails will determine whether these technologies remain trustworthy and sustainable.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.