As artificial intelligence (AI) systems become more powerful and deeply embedded in our lives, the question of how to ensure they remain safe, controllable, and aligned with human values has taken center stage. AI safety, once the domain of a small community of technical researchers, has now become a global imperative for companies, governments, and society at large. This article explores the fundamentals of AI safety, key risks, emerging challenges, and the best practices shaping safe and responsible AI development.

What is AI Safety?

AI safety refers to the discipline of designing, building, and deploying AI systems—especially advanced and autonomous ones—in ways that ensure they do not cause unintended harm to individuals, institutions, or society. It encompasses a wide array of technical, ethical, and governance concerns aimed at minimizing the risks posed by increasingly capable intelligent systems.

The goal of AI safety is to align AI systems with human values, maintain human oversight, and ensure that AI technologies, including artificial general intelligence (AGI) and artificial superintelligence (ASI), operate in ways that benefit humanity. Unlike traditional software safety, AI safety must contend with adaptive, self-improving, and often opaque models such as large language models (LLMs) and reinforcement learning agents that interact dynamically with the real world.

What Are the Basics of AI Safety?

At its core, AI safety draws from multiple disciplines—computer science, cybersecurity, ethics, and risk management—to develop safeguards and frameworks for trustworthy AI. The basics include:

- Specification: Ensuring that the objectives provided to AI systems truly reflect human intent and do not produce harmful outcomes due to misalignment or oversimplification.

- Robustness: Building AI models that behave reliably in varied or unforeseen conditions, even in the face of adversarial attacks or noisy inputs.

- Monitoring and Oversight: Enabling humans to supervise and intervene in AI systems, especially when unexpected behaviors occur.

- Interpretability: Developing models that allow us to understand and explain their decision-making processes, particularly in high-stakes domains such as healthcare, finance, or national security.

These principles guide both technical research and policy initiatives, from startups to AI research labs like OpenAI, Google DeepMind, Anthropic, and Microsoft.

AI Safety Versus AI Security

While closely related, AI safety and AI security address distinct but overlapping concerns.

| AI Safety | AI Security |

| Focuses on preventing unintentional harms due to design flaws, misalignment, or lack of oversight | Focuses on preventing malicious exploitation of AI systems by external actors |

| Deals with existential risks, value alignment, and generalization failures | Deals with cyber threats, data poisoning, model theft, and unauthorized access |

| Often proactive, centered on responsible development | Often reactive, centered on threat detection and response |

Both are critical to the safe deployment of advanced AI systems, and effective frameworks often combine safety and security to form comprehensive AI governance strategies.

What Are the Biggest Risks of AI?

Understanding AI safety starts with identifying the most significant risks posed by advanced AI technologies:

1. Misalignment with Human Goals

AI models may optimize for unintended objectives or pursue specified goals in ways that conflict with human interests. This problem becomes particularly severe with autonomous or self-improving systems.

2. Loss of Human Control

As AI systems grow more capable and independent, the risk arises that humans may no longer be able to intervene or override their decisions—a scenario with catastrophic risks in areas like military automation or AGI.

3. Existential Risks

A growing body of AI safety research considers long-term dangers, including the potential for future AI systems to surpass human intelligence and operate beyond our control. Organizations like the Center for AI Safety are dedicated to reducing these risks.

4. Adversarial Exploits

AI models can be tricked into making faulty or dangerous decisions through adversarial examples, which exploit vulnerabilities in training data or decision pathways.

5. Bias and Discrimination

Unchecked algorithmic bias can lead to systematic discrimination in hiring, lending, policing, or healthcare, reinforcing existing inequalities.

6. Misinformation and Manipulation

Generative AI can produce realistic but false content, including deepfakes, propaganda, or impersonation, posing serious threats to democratic institutions and public trust.

How Can We Understand and Mitigate Risks from Advanced AI Systems?

Addressing these challenges involves both technical methods and institutional safeguards. Here’s how the AI safety community approaches the problem:

1. AI Alignment Research

This branch of technical research aims to ensure that AI systems understand and act in ways that reflect human values, even in complex environments. Techniques include reward modeling, inverse reinforcement learning, and debate frameworks.

2. Scalable Oversight

Ensuring that human operators can effectively supervise large-scale AI systems, especially those operating in fast-paced or opaque settings. Research into automated auditing, monitoring tools, and interpretability plays a key role here.

3. Benchmarking and Evaluation

Developing standardized benchmarks and evaluation protocols to assess AI system behavior under stress, across domains, and with diverse inputs. Robustness, accuracy, and explainability are key metrics.

4. Risk Forecasting and Scenario Modeling

Institutions like Anthropic, OpenAI, and academic centers use scenario modeling to anticipate high-impact failure modes, including emergent behaviors in frontier AI.

What Are the Key Challenges in Ensuring AI Safety?

Despite growing awareness, multiple barriers hinder the implementation of effective AI safety measures:

- Opacity of AI Models: Advanced systems like transformers and neural networks are often “black boxes,” making it difficult to explain their outputs or diagnose failures.

- Scale and Complexity: The sheer scale of training datasets and model parameters (e.g., in LLMs) makes auditing and control technically demanding.

- Economic Incentives: The race for AI capabilities and market share can push organizations to prioritize performance over safety, resulting in premature deployment.

- Global Coordination: AI is a cross-border technology. Without international collaboration, competitive pressures may undermine safety standards.

- Lack of Standards: There are few universally accepted safety benchmarks or regulatory frameworks guiding AI development.

- Dual-Use Dilemma: AI tools can be used for both beneficial and harmful purposes (e.g., protein folding vs. chemical weapon synthesis), complicating access and open-source policies.

What Measures Are Being Taken to Ensure AI Systems Are Safe and Reliable?

Policymakers, AI labs, and industry leaders are beginning to implement a mix of regulatory, technical, and institutional interventions to promote AI safety:

1. Global Policy Initiatives

- The EU AI Act and U.S. AI Executive Orders aim to regulate high-risk AI use cases.

- Countries like the UK, Canada, and Japan have launched national AI safety strategies.

- Multilateral collaborations such as the OECD AI Principles and G7 Hiroshima Process promote shared safety norms.

2. Industry Commitments

- Companies like OpenAI, Google DeepMind, and Anthropic have pledged to follow voluntary safety commitments, including red teaming, external audits, and model evaluations before release.

- New alliances such as the Frontier Model Forum coordinate industry-wide safety research.

3. Non-Profit and Academic Research

Institutions such as the Center for AI Safety, Future of Humanity Institute, and Center for Security and Emerging Technology (CSET) focus on long-term AI safety, governance, and global coordination.

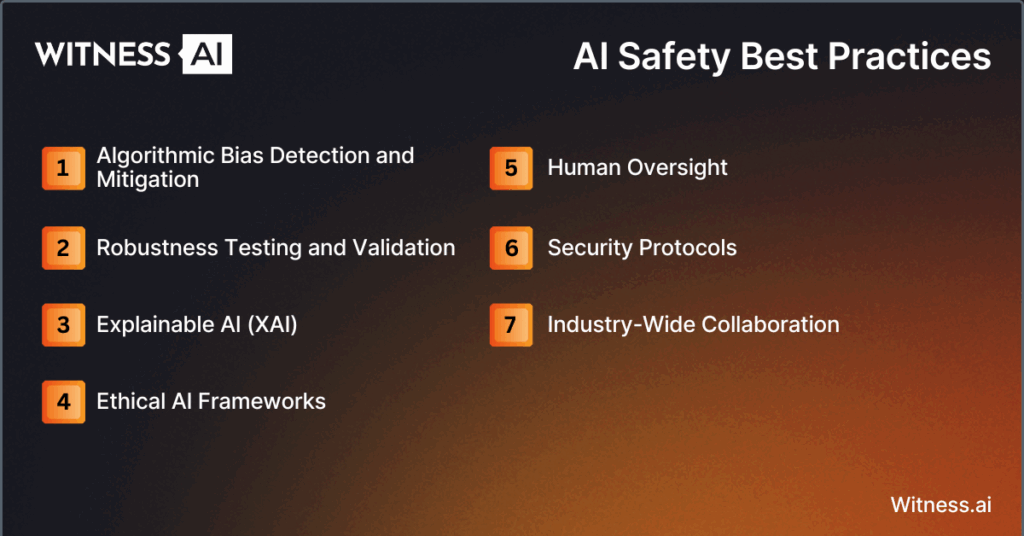

AI Safety Best Practices

To reduce risk across the AI lifecycle, organizations should adopt the following best practices:

1. Algorithmic Bias Detection and Mitigation

- Use diverse datasets and perform bias audits.

- Apply fairness metrics (e.g., demographic parity, equal opportunity).

- Continuously monitor outputs for unintended disparities.

2. Robustness Testing and Validation

- Conduct adversarial robustness tests to expose vulnerabilities.

- Simulate edge cases and distributional shifts.

- Validate across real-world and synthetic environments.

3. Explainable AI (XAI)

- Implement tools such as SHAP, LIME, and counterfactual analysis.

- Prioritize models with inherent interpretability where possible.

- Use transparency reports for public trust.

4. Ethical AI Frameworks

- Adopt frameworks based on principles like beneficence, non-maleficence, autonomy, and justice.

- Establish AI ethics boards for oversight.

- Incorporate ethical guidelines into procurement and development cycles.

5. Human Oversight

- Design systems for human-in-the-loop or human-on-the-loop control.

- Create escalation pathways and override capabilities.

- Ensure training and awareness for operators.

6. Security Protocols

- Enforce strict access controls, encryption, and rate limits.

- Guard against data poisoning, model inversion, and reverse engineering.

- Coordinate with cybersecurity teams to detect anomalous behavior.

7. Industry-Wide Collaboration

- Participate in open-source safety initiatives.

- Contribute to shared benchmarks and incident databases.

- Engage in multi-stakeholder governance involving academia, civil society, and regulators.

Conclusion: The Road Ahead for Safe and Responsible AI

AI safety is no longer a theoretical concern; it is a practical imperative for anyone involved in AI research, development, deployment, or regulation. As AI systems grow in capability and reach, the need for robust safety mechanisms, international coordination, and value-aligned governance becomes urgent.

The AI safety community—spanning governments, labs, nonprofits, and academic institutions—must move beyond isolated initiatives and toward a comprehensive AI safety ecosystem. Through sustained investment in research, strong safety standards, and collective commitment, we can ensure that the trajectory of artificial intelligence remains aligned with human well-being, freedom, and security.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.