What Is Model Governance?

Model governance refers to the structured framework, policies, and processes that guide the development, deployment, monitoring, and management of models—especially machine learning (ML) and artificial intelligence (AI) models—throughout their lifecycle. It ensures that models are developed responsibly, perform as intended, and meet regulatory and organizational standards.

Effective model governance encompasses both technical and organizational disciplines. It supports transparency in how models are built and deployed, enhances explainability of model outputs, and ensures risk management practices are in place to safeguard against model misuse or degradation over time.

Model governance has become particularly critical with the rise of automated decision-making in high-stakes use cases like loan approvals, credit scoring, healthcare diagnostics, pricing strategies, and fraud detection. In these domains, even small shifts in a model’s performance or outputs can lead to real-world consequences with regulatory, ethical, or financial implications.

Why Is Model Governance Important?

Model governance is not merely a technical best practice—it is a foundational component of responsible AI and ML deployment. Here’s why it’s essential:

1. Risk Management

ML models can introduce significant risks, including:

- Bias in decision-making, especially in demographic-sensitive areas like credit scoring or healthcare

- Model drift due to changes in input data or market conditions

- Inaccurate predictions leading to faulty business decisions

Governance practices provide safeguards to detect and mitigate these issues through model validation, monitoring, and documentation.

2. Regulatory Compliance

Financial institutions and other regulated sectors must comply with specific model risk management (MRM) standards:

- OCC’s SR 11-7 guidance

- Basel Committee on Banking Supervision’s standards

- EU AI Act and GDPR requirements

Model governance ensures traceability, auditability, and adherence to such regulatory requirements.

3. Business Impact and Accountability

A robust model governance framework improves decision-making by:

- Promoting transparency and explainability in model usage

- Holding model developers, users, and validators accountable

- Enhancing the credibility and reliability of model results across the organization

What Are the Roles of Model Governance?

Model governance encompasses a wide range of roles, each contributing to the oversight and effectiveness of models throughout their lifecycle. These roles include:

- Model Owners: Responsible for ensuring the model’s intended use, performance, and compliance with governance policies.

- Model Developers: Data scientists and engineers who design and build the model using appropriate algorithms and training data.

- Model Validators: Independent teams that evaluate the model’s methodology, assumptions, and outcomes.

- Model Risk Managers: Oversee the overall model risk management process, including inventory, validation cycles, and issue remediation.

- Internal Auditors: Review governance practices and ensure compliance with internal controls and external regulations.

- Executive Sponsors and Business Units: Provide oversight and define how model outputs inform real-time decision-making in operations.

Each of these roles ensures that models are appropriately governed from ideation to retirement.

What Are the Components of Model Governance?

An effective model governance framework includes several core components:

1. Model Inventory

A centralized catalog of all models in use, including:

- Model type (e.g., credit scoring, interest rate forecasting)

- Status in the model lifecycle (development, validation, production, retired)

- Associated business unit and risk level

2. Model Documentation

Comprehensive and standardized documentation covering:

- Purpose and scope of the model

- Methodology and algorithms used

- Assumptions and limitations

- Datasets and data quality assessments

- Validation and testing results

- Metrics for monitoring model performance

Templates and documentation standards ensure consistency and audit-readiness.

3. Model Validation

Independent evaluation of:

- Conceptual soundness of model logic

- Back-testing against historical data

- Sensitivity analysis and stress testing

- Benchmarking against alternative models or rules-based systems

Validation must occur pre-deployment and at regular intervals.

4. Model Monitoring

Continuous surveillance of:

- Model performance metrics (e.g., accuracy, AUC, precision-recall)

- Drift in inputs, outputs, and underlying data distributions

- Compliance with model usage policies

- Market conditions affecting model assumptions

Real-time alerts and dashboards can support early detection of anomalies.

5. Model Risk Tiers

Classification of models based on their impact and complexity:

- High-risk models (e.g., loan approval, pricing)

- Medium-risk models (e.g., customer segmentation)

- Low-risk models (e.g., marketing optimization)

Governance requirements scale according to tier.

6. Model Change Management

Procedures for:

- Version control of models and datasets

- Approval workflows for material model changes

- Retesting and documentation following updates

How Does Model Governance Help in Managing Compliance and Risk in AI Systems?

AI and machine learning models—especially generative AI models—pose unique challenges due to their opacity, adaptability, and autonomy. Model governance mitigates these risks through:

1. Regulatory Compliance

By ensuring end-to-end traceability, model governance supports alignment with:

- OCC and Basel guidelines for model risk management

- GDPR mandates on data subject rights and explainability

- Industry-specific standards in healthcare and finance

2. Risk Assessment and Controls

Governance practices help institutions perform comprehensive risk assessments based on:

- Model outputs and their impact on stakeholders

- The quality and sensitivity of training data

- The nature of automated decision-making (e.g., black-box vs. interpretable models)

3. Auditability and Accountability

Proper model documentation and lifecycle tracking enable internal audit functions and regulators to:

- Reconstruct how model results were generated

- Evaluate decisions driven by model outputs

- Assign responsibility for governance failures

4. Explainability and Trust

Well-governed models include explainability mechanisms like SHAP or LIME, which:

- Help internal stakeholders understand decision logic

- Enhance customer transparency, especially in use cases like credit scoring or healthcare eligibility

Who Are the Main Stakeholders in Model Governance?

Model governance involves cross-functional collaboration. Key stakeholders include:

Data Scientists and ML Engineers

- Build and train models

- Must follow governance guidelines and document methodologies

Risk and Compliance Teams

- Evaluate model usage against regulatory frameworks

- Ensure proper validation and control implementation

Business Executives and Model Owners

- Use model results in strategic and operational decision-making

- Sponsor model development and set risk appetite

Internal Audit and Legal Teams

- Verify governance adherence

- Address legal exposures related to model bias, explainability, or data misuse

IT and DevOps

- Support infrastructure for model deployment, monitoring, and access controls

- Enable scalable, real-time governance workflows

Stakeholder alignment ensures that governance is not a siloed function but an organizational commitment.

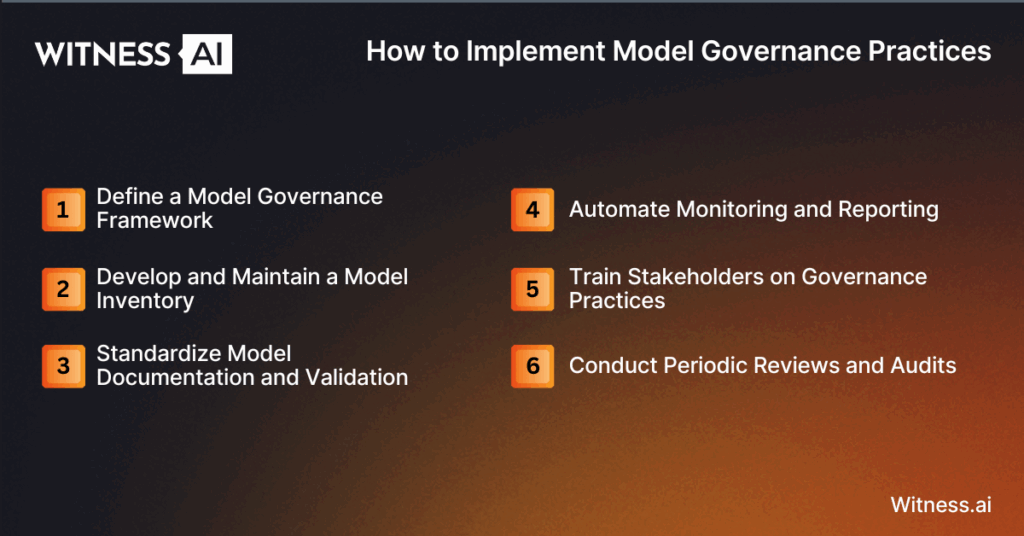

How to Implement Model Governance Practices

Establishing a robust model governance program requires a structured, phased approach:

1. Define a Model Governance Framework

Start by creating a formal policy that outlines:

- Governance objectives

- Stakeholder roles and responsibilities

- Risk tiers and oversight levels

- Approval workflows and documentation standards

2. Develop and Maintain a Model Inventory

Use a centralized platform or tool to:

- Catalog models by risk, status, and use case

- Track model lifecycle stages and owners

- Assign monitoring and validation responsibilities

3. Standardize Model Documentation and Validation

Create templates and tools for:

- Documenting algorithms, assumptions, and datasets

- Capturing validation results and performance metrics

- Performing benchmarking and back-testing

4. Automate Monitoring and Reporting

Implement real-time dashboards and alerting systems to:

- Track model performance against defined metrics

- Detect input or output drift

- Flag compliance violations or policy breaches

5. Train Stakeholders on Governance Practices

Conduct regular training for data scientists, business leaders, and validators to:

- Ensure consistent understanding of governance standards

- Encourage responsible model usage and decision-making

6. Conduct Periodic Reviews and Audits

Schedule independent reviews of:

- Model documentation and risk assessments

- Governance processes and policy adherence

- Real-world performance and impact assessments

This ensures continuous improvement and adaptation to evolving regulatory or market conditions.

Conclusion

In a world increasingly driven by algorithms and automation, model governance is essential for ensuring that AI and ML models are accurate, reliable, and accountable. By implementing strong governance practices—spanning inventory management, validation, monitoring, and stakeholder collaboration—organizations can optimize model performance while mitigating compliance, ethical, and operational risks.

As regulatory scrutiny intensifies and AI models become more complex, the need for effective model governance will only grow. Organizations that invest early in building a robust model governance framework will be better positioned to harness the full potential of AI technologies while maintaining trust, transparency, and control.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.