As artificial intelligence (AI) becomes central to enterprise operations, the importance of AI red teaming grows in parallel. From identifying adversarial vulnerabilities in large language models (LLMs) to assessing bias, fairness, and data privacy risks, red teaming is now a critical component of AI system evaluations.

This article explores what AI red teaming is, how it works, and why it’s a key part of AI security and regulatory compliance in the era of generative AI (GenAI).

What Is Red Teaming in AI?

AI red teaming is a structured, adversarial testing methodology used to assess the security, safety, and reliability of AI systems. It simulates real-world attacks and misuse scenarios to uncover vulnerabilities in algorithms, training data, and system behavior.

Unlike traditional penetration testing, which focuses on finding software exploits, AI red teaming targets AI-specific weaknesses—such as prompt injection, data poisoning, and model jailbreaks—to assess the resilience of machine learning (ML) and LLM-based systems.

Red teaming originated in military and cybersecurity contexts but has evolved to address AI ecosystems where threats are more probabilistic, emergent, and context-dependent.

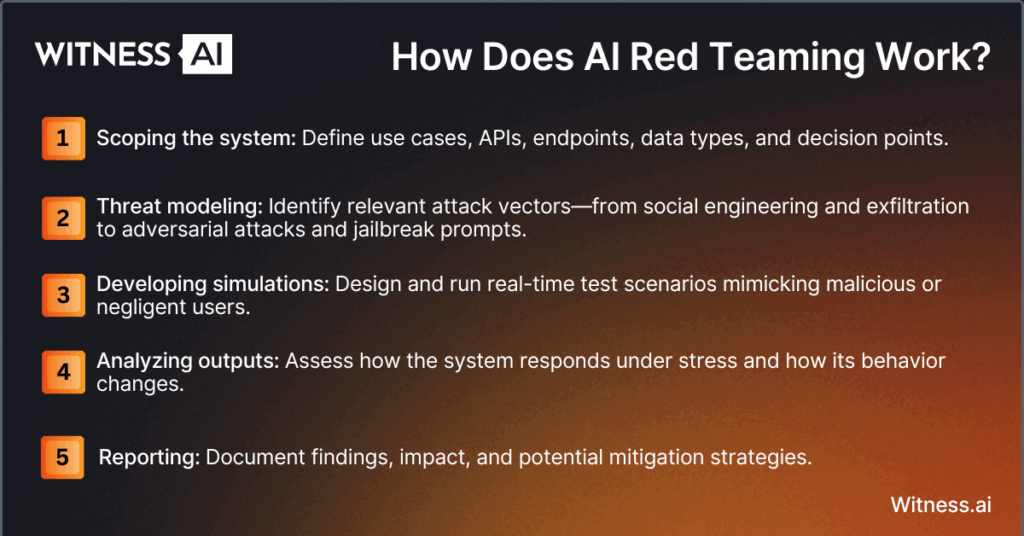

How Does AI Red Teaming Work?

AI red teaming is conducted by security teams, researchers, or third-party red teamers who simulate attacker behavior to test the system’s defenses. The process typically includes:

- Scoping the system: Define use cases, APIs, endpoints, data types, and decision points.

- Threat modeling: Identify relevant attack vectors—from social engineering and exfiltration to adversarial attacks and jailbreak prompts.

- Developing simulations: Design and run real-time test scenarios mimicking malicious or negligent users.

- Analyzing outputs: Assess how the system responds under stress and how its behavior changes.

- Reporting: Document findings, impact, and potential mitigation strategies.

This methodology may involve both manual testing and automated red teaming tools to scale testing across multiple datasets, algorithms, and LLM instances.

What Are the Benefits of AI Red Teaming?

1. Risk Identification

AI red teaming uncovers system vulnerabilities and uncovers threats such as prompt injection, training data leaks, and toxic outputs that other assessments may miss.

2. Resilience Building

By stress-testing AI models, red teaming reveals where guardrails fail, helping teams build more resilient systems that withstand real-world misuse.

3. Regulatory Alignment

AI regulations—including the EU AI Act, White House AI Executive Orders, and NIST AI Risk Management Framework—increasingly encourage or require red teaming exercises for high-risk AI applications.

4. Bias and Fairness Testing

Red teamers simulate edge cases to assess how AI systems treat different demographics, identifying bias in training data or outputs.

5. Performance Degradation Under Stress

Simulated overload or adversarial inputs can degrade model performance. Red teaming evaluates how an AI system performs under unexpected stressors or system abuse.

6. Data Privacy Violations

Red teaming probes for sensitive information exposure, particularly with LLMs that may memorize or regenerate PII from training datasets.

7. Human-AI Interaction Risks

Red teaming tests chatbots, virtual agents, and other AI interfaces for unsafe behavior in user interactions, helping improve user safety and trust.

8. Scenario-Specific Threat Modeling

From healthcare to finance, AI red teaming can simulate industry-specific threats, creating benchmarks for sector-specific use cases.

Red Team vs Penetration Testing vs Vulnerability Assessment

| Method | Focus | Best For |

| Red Teaming | Simulated adversarial scenarios | Real-world risk testing, AI misuse |

| Penetration Testing | Exploiting technical security weaknesses | Network, API, and infrastructure flaws |

| Vulnerability Assessment | Inventory of known flaws | Compliance and patch management |

Unlike the deterministic nature of vulnerability assessments, AI red teaming is in-depth, creative, and scenario-driven, often requiring domain knowledge and an understanding of AI behavior.

What Is Automated Red Teaming?

Automated red teaming refers to the use of AI-powered tools and frameworks to continuously probe AI systems for weaknesses. These tools simulate:

- Prompt injection attempts

- Jailbreak prompts

- Adversarial inputs

- Toxicity or misinformation generation

Companies like Microsoft and OpenAI are building automated red teaming platforms that combine real-time simulations, large-scale API testing, and automated output analysis for rapid feedback and continuous improvement.

This automation is essential for testing at the scale of modern AI deployments, especially for complex systems with thousands of potential input/output pathways.

Regulations for AI Red Teaming

The regulatory landscape is increasingly embracing AI red teaming as a best practice for AI safety and compliance:

- White House Executive Order (2023) mandates that developers of powerful AI systems conduct red teaming before public deployment.

- The EU AI Act requires risk assessments and stress-testing for high-risk systems, which align with red teaming goals.

- The NIST AI Risk Management Framework outlines testing, evaluation, and validation as critical to trustworthy AI.

- Industry-specific guidance (e.g., healthcare, finance) is evolving to require scenario-specific red teaming for GenAI and LLM use.

Governments, providers, and open-source ecosystems are converging on red teaming as a key layer in AI security guardrails.

Conclusion

As AI systems become more advanced and embedded in critical operations, red teaming stands out as a powerful tool for uncovering hidden threats, ensuring compliance, and building trust.

By integrating red teaming exercises into the AI development lifecycle, organizations can proactively address security risks, improve outputs, and align with emerging regulatory standards.

Enterprises deploying LLMs, generative AI, or AI APIs should treat red teaming not as a one-time assessment—but as an ongoing strategy to stay ahead of adversarial threats, data leaks, and AI misuse.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.