As enterprise adoption of artificial intelligence accelerates, organizations are embedding large language models (LLMs) and generative AI (GenAI) into workflows, customer experiences, and data pipelines. But this integration introduces unique security risks not addressed by conventional tools like next-generation firewalls (NGFWs) or traditional Web Application Firewalls (WAFs).

Enter the AI firewall—an emerging category of ai-powered cybersecurity solution purpose-built to protect AI systems, APIs, and model outputs against threats like prompt injection, data leakage, and adversarial manipulation. AI firewalls monitor and control the flow of inputs and outputs in real-time, enhancing application security and ensuring data privacy in sensitive environments.

This guide explains what AI firewalls are, why they’re needed, how they function, how they compare to existing firewall technologies, and how they impact model performance and user experience.

What Are AI Firewalls?

An AI firewall is a specialized security layer designed to protect AI models, LLM applications, and AI-driven APIs from a new wave of cyber threats. Unlike traditional firewalls that guard the perimeter by filtering network packets or traffic ports, AI firewalls operate closer to the application layer, often embedded into AI workflows or deployed as API gateways.

AI firewalls protect against both input-side and output-side vulnerabilities, including:

- Prompt injection attacks that manipulate model behavior

- Toxic or harmful outputs generated by LLMs

- Sensitive data exfiltration, such as unauthorized exposure of PII or proprietary code

- Overuse or abuse of APIs, especially in SaaS or public-facing AI applications

- Model reverse engineering, where attackers try to infer model architecture or training data

These firewalls can be deployed across a variety of architectures—on-premises, at the cloud edge, in data centers, or inline with SaaS AI services—offering protection tailored to modern AI pipelines.

Why Do You Need an AI Firewall?

AI is inherently dynamic. Unlike static applications, LLMs generate contextual, probabilistic outputs based on unstructured input. This flexibility makes them powerful—but also vulnerable.

Organizations need AI firewalls for several critical reasons:

1. Protection from Prompt Injection and Jailbreaking

Attackers can exploit prompt injection techniques to override model instructions, forcing an LLM to produce malicious content, bypass safety filters, or leak sensitive logic. AI firewalls detect and neutralize such inputs before they reach the model.

2. Data Privacy Enforcement

LLMs can unintentionally output PII, confidential code, or sensitive business data if such information was included in training or past interactions. AI firewalls monitor responses to prevent data leaks and uphold privacy regulations like GDPR, HIPAA, and CPRA.

3. API Abuse Mitigation

Publicly exposed LLM endpoints, especially via API, are susceptible to DDoS, overuse, and credential stuffing attacks. AI firewalls enforce rate limits, monitor usage patterns, and apply threat detection for real-time blocking of malicious bots or automated attacks.

4. Regulatory and Compliance Requirements

As AI regulations evolve, including the EU AI Act and NIST AI RMF, organizations are increasingly responsible for AI explainability, accountability, and security. AI firewalls enable organizations to meet these requirements with audit logs, usage controls, and automated guardrails.

5. Model Safety and Brand Protection

An LLM producing biased, offensive, or misleading outputs can cause reputational harm. AI firewalls apply output filtering, blacklist violations, and automated moderation to maintain ethical standards and prevent model misuse.

How Does an AI Firewall Enhance Network Security?

AI firewalls extend and augment traditional network security models by focusing on AI-specific risks and workflows. Here’s how they strengthen enterprise defenses:

1. Intelligent Traffic Inspection

While NGFWs and WAFs inspect headers, ports, or URLs, AI firewalls go deeper—parsing semantic intent, user prompts, and LLM tokens to detect hidden threats embedded in natural language.

2. Behavioral Analysis for AI Applications

AI firewalls leverage machine learning to model normal usage patterns. Deviations—such as unusual input structures or access behaviors—are flagged for real-time enforcement.

3. Adaptive Policy Enforcement

AI firewalls allow dynamic rules based on risk scoring, user identity, API usage, or model type. For instance, a policy could restrict public users from asking finance-related questions to a legal chatbot.

4. Integration with Threat Intelligence

AI firewalls integrate with external feeds and internal SOC tools to enrich detection with context from emerging threats, enabling proactive response to new zero-day attacks or prompt manipulation techniques.

5. Protection Across Layers

Beyond the application interface, AI firewalls can defend endpoints, support load balancing, and operate inline with existing SIEM, IAM, or DLP systems, offering multilayered protection for AI-driven networks.

How Do I Deploy an AI Firewall?

AI firewall deployment depends on your architecture, LLM vendor, and security requirements. Here are the most common models:

1. API Gateway Integration

For SaaS or cloud-hosted LLMs, AI firewalls can be deployed as a proxy or wrapper around your API endpoints. This allows input/output filtering, authentication enforcement, and rate limiting.

2. On-Premises Inference Guard

For self-hosted AI models (e.g., via Hugging Face, OpenLLM, or NVIDIA Triton), the firewall can sit between the inference engine and frontend service. It inspects traffic and controls requests before model execution.

3. Containerized Sidecar in Kubernetes

In containerized AI workflows, deploy the AI firewall as a sidecar that shares a pod with your AI service. This offers localized control with low latency and high deployment agility.

4. Reverse Proxy with Content Inspection

Insert the AI firewall as a reverse proxy (e.g., NGINX or Envoy-based) that handles input sanitization, output redaction, and token tracking, particularly effective for LLM-based chatbots.

5. Hybrid Integration with NGFW/WAF

Many enterprises extend their next-generation firewall or WAF stack with an AI-aware inspection layer. This adds LLM context-awareness to existing policies, without duplicating infrastructure.

Make sure your AI firewall supports logging, alerting, role-based access control (RBAC), and integration with SIEMs to enable centralized visibility and governance.

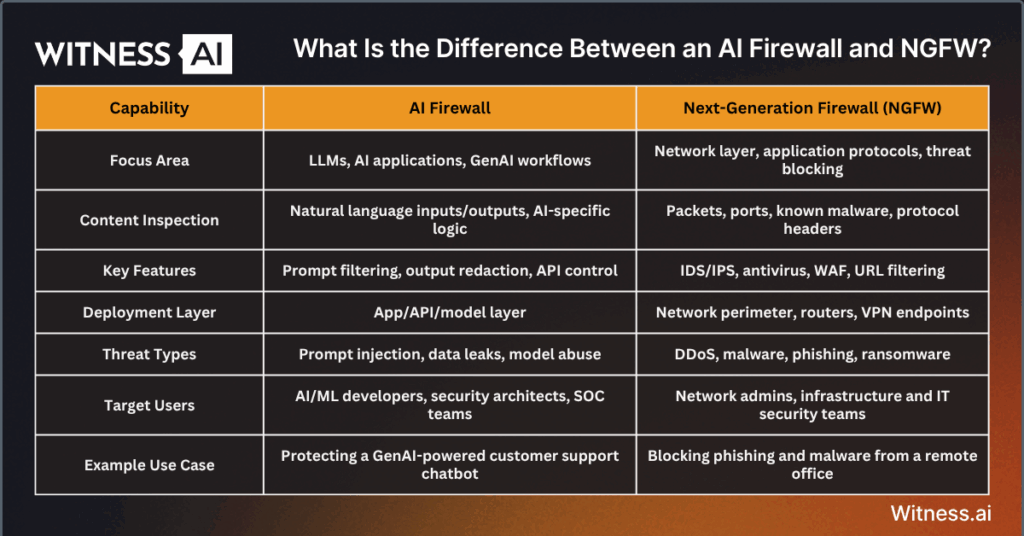

What Is the Difference Between an AI Firewall and NGFW?

Understanding the distinction between AI firewalls and NGFWs is key to building layered defenses.

| Capability | AI Firewall | Next-Generation Firewall (NGFW) |

| Focus Area | LLMs, AI applications, GenAI workflows | Network layer, application protocols, threat blocking |

| Content Inspection | Natural language inputs/outputs, AI-specific logic | Packets, ports, known malware, protocol headers |

| Key Features | Prompt filtering, output redaction, API control | IDS/IPS, antivirus, WAF, URL filtering |

| Deployment Layer | App/API/model layer | Network perimeter, routers, VPN endpoints |

| Threat Types | Prompt injection, data leaks, model abuse | DDoS, malware, phishing, ransomware |

| Target Users | AI/ML developers, security architects, SOC teams | Network admins, infrastructure and IT security teams |

| Example Use Case | Protecting a GenAI-powered customer support chatbot | Blocking phishing and malware from a remote office |

Bottom Line: NGFWs are critical for foundational network security, while AI firewalls provide granular, adaptive protection for AI-specific use cases.

How Does a Firewall for AI Impact AI Model Performance and Latency?

Performance and user experience are critical when deploying real-time AI applications. Excessive latency can degrade workflows, reduce productivity, and create poor UX—especially in chatbots, voice interfaces, or automated assistants.

Modern AI firewalls are optimized to minimize latency, with techniques such as:

- Asynchronous scanning of model inputs and outputs

- Token-based inspection instead of entire payload parsing

- Edge caching for repeat prompts or pre-approved queries

- Load balancing and auto-scaling for high availability

- Parallelized inference pipelines using serverless functions

In most scenarios, AI firewalls introduce only 30–50ms latency, well below user-noticeable thresholds. For enterprise use cases, this is a small trade-off for the benefits of data protection, threat prevention, and compliance enforcement.

Additionally, AI firewalls may actually enhance performance by blocking spammy or malicious queries, thereby reducing load on the underlying AI models and preserving compute resources.

Conclusion

The growing use of LLMs and AI-powered applications demands a new generation of security solutions. Traditional NGFWs and WAFs, while essential, are not equipped to understand the nuanced logic, natural language inputs, and unpredictable outputs of modern AI systems.

AI firewalls fill this critical gap—offering real-time, adaptive protection for generative models, APIs, and enterprise AI use cases. From filtering prompts and blocking attacks to enforcing data privacy and monitoring outputs, they form a cornerstone of scalable, secure AI adoption.

For security architects, AI engineers, and compliance leaders, deploying an AI firewall is not just a best practice—it’s a necessity for safe, ethical, and compliant AI deployment.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.