Artificial intelligence (AI) is transforming nearly every industry, from healthcare and finance to transportation and national defense. Yet, with these revolutionary advancements come significant concerns. As AI capabilities expand, so do the associated risks. From loss of privacy and biased algorithms to the emergence of rogue AI systems and weaponized misinformation, the potential dangers are wide-ranging—and increasingly urgent.

This article explores the landscape of AI risks, unpacking both current and future threats, their societal and organizational impacts, and the actions needed to mitigate them.

What Is Artificial Intelligence?

Artificial intelligence refers to the development of machines and systems that can simulate human intelligence. These systems perform tasks such as recognizing speech, making decisions, understanding language, and identifying patterns. AI is built on subfields like machine learning, deep learning, and natural language processing, often powered by massive datasets and trained through algorithms.

Prominent AI technologies include generative AI (e.g., ChatGPT), large language models (LLMs), facial recognition software, AI-driven automation tools, and predictive analytics systems.

While AI promises tremendous efficiencies and innovation, it also introduces complex challenges that require proactive management, ethical scrutiny, and regulatory oversight.

What Are the Risks of AI?

The risks of AI arise from both its design and its deployment. As AI becomes more advanced, it is increasingly entrusted with real-world decisions that affect privacy, safety, fairness, and security.

Key AI risks include:

- Loss of control over autonomous systems

- Misuse by malicious actors

- Biases embedded in training data

- Lack of human oversight

- Amplification of disinformation

- Erosion of intellectual property

- Socioeconomic disruption, including job loss

These risks of AI are no longer hypothetical—they are already emerging in industries, governments, and digital ecosystems around the globe.

How Can AI Pose a Threat to Privacy?

AI’s ability to analyze vast amounts of personal data in real-time creates serious concerns about data privacy. Many AI-powered tools rely on surveillance, monitoring, and behavioral prediction. In sectors like advertising, social media, and healthcare, AI systems can track individuals, infer private information, and make sensitive decisions without consent.

Privacy Threats Include:

- Facial recognition used without authorization

- Predictive policing based on biased historical data

- Profiling users based on their online activity

- Leakage of personal data through AI-generated content

- Inferencing sensitive traits from anonymized data

Without proper safeguards, AI undermines privacy rights and opens the door to mass surveillance, identity theft, and data misuse.

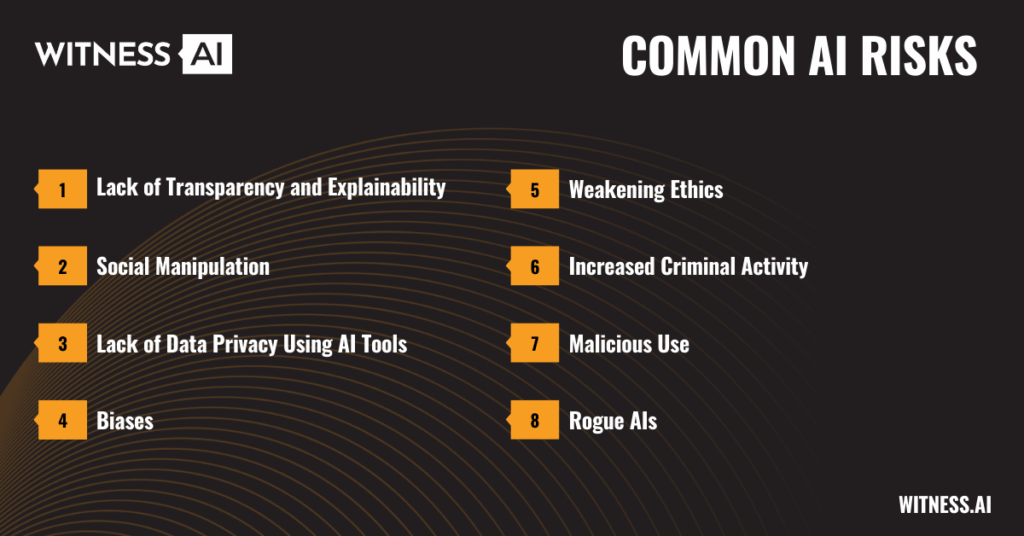

What Are Some Common AI Risks?

1. Lack of Transparency and Explainability

Many AI systems, particularly deep learning models, are opaque—making it difficult to understand how they reach specific outputs. This “black box” nature of AI models poses risks in decision-making, especially in healthcare, criminal justice, and finance.

2. Social Manipulation

AI-driven recommendation engines can be exploited to manipulate public opinion. AI can personalize and amplify misinformation, destabilize elections, or polarize communities through algorithmic bias on social media platforms.

3. Lack of Data Privacy Using AI Tools

AI tools often require access to training data that includes sensitive information. Poorly secured models may leak this information, leading to violations of data protection laws.

4. Biases

Bias in AI stems from unrepresentative or prejudiced datasets, reinforcing existing social inequalities. These biases are particularly damaging in hiring algorithms, lending decisions, and legal risk assessments.

5. Weakening Ethics

AI systems may make decisions based on utility rather than ethical principles, raising questions about human values, dignity, and fairness. This is especially problematic as AI is deployed in morally sensitive areas such as elder care and war.

6. Increased Criminal Activity

Cybercriminals are adopting AI technologies to enhance phishing, cyberattacks, and fraud. AI can also generate convincing deepfakes to deceive users or commit identity theft.

7. Malicious Use

Malicious actors can weaponize AI for surveillance, autonomous drone attacks, or large-scale disinformation campaigns. Generative AI lowers the barrier to creating harmful content at scale.

8. Rogue AIs

There is a growing concern about advanced AI systems that might evolve goals misaligned with human intent—leading to existential risks. AI researchers warn that without strict alignment mechanisms, AI development could spiral beyond human control.

What Are Some Future AI Risks?

Looking ahead, the convergence of automation, robotics, and advanced AI systems presents several high-impact threats:

- Superintelligent AI surpassing human cognition and becoming uncontrollable

- Escalating arms races in military AI among global powers

- Loss of human agency in decision-making as AI tools become default authorities

- Pandemics or economic shocks triggered by automated systems acting on flawed models

- Emergence of autonomous, self-replicating AI with unintended consequences

- AI-driven unemployment across sectors due to widespread automation

Many of these scenarios still feel like science fiction, but experts urge preemptive governance to mitigate catastrophic outcomes.

AI Risks in Cybersecurity

AI is a double-edged sword in cybersecurity. While it enables rapid threat detection and response, it also creates novel vulnerabilities.

Threats Include:

- AI-generated malware that adapts to defenses

- Chatbots used for automated social engineering

- Exploitation of AI models through prompt injection and data poisoning

- Real-time cyberattacks orchestrated by autonomous agents

- Use of AI in orchestrating supply chain attacks

Risk management strategies must evolve to detect and defend against AI-enabled threats before they escalate.

Organizational Risks

Businesses deploying AI face internal and external risks of AI, including:

- Reputational damage from biased or unsafe deployments

- Legal liability for harmful AI outputs

- IP theft through AI-generated content trained on copyrighted materials

- Loss of control over proprietary data in cloud-based learning models

- Employee misuse of openAI and LLMs without proper governance

- Dependence on third-party AI capabilities without clear accountability

Without AI governance, these risks can compound into large-scale failures that affect customer trust, legal compliance, and financial health.

How to Protect Against AI Risks

Addressing AI risks requires a multilayered approach:

1. Develop Legal Regulations

Policymakers must enact and enforce regulations that ensure AI safety, fairness, and accountability. These could include:

- Mandatory audits of high-risk AI systems

- Disclosure requirements for AI-generated content

- Privacy standards for data used in training data

- Bans on certain AI tools (e.g., autonomous weapons)

2. Establish Organizational AI Standards and Discussions

Companies should adopt internal frameworks to manage AI development responsibly. Key components include:

- Transparent algorithms

- Ethics review boards for AI use cases

- Continuous monitoring of outputs and decisions

- Regular bias and security testing

- Cross-functional initiatives involving legal, IT, HR, and operations

- Documentation of AI capabilities, limitations, and explainable logic

A culture of responsible AI adoption helps build trust, reduce vulnerabilities, and foster innovation safely.

How Can AI Development Be Regulated to Minimize Risks?

Effective regulation should balance innovation with security. Leading strategies for regulating AI development include:

- Creating international AI safety standards

- Investing in AI researchers focused on alignment and control

- Mandating human oversight in high-risk deployment of AI

- Requiring licenses for training and releasing large-scale LLMs

- Promoting transparency in how AI models are trained and tested

- Encouraging open-source auditing of commercial AI tools

Governments, industries, and civil society must collaborate to ensure that technological advancements do not outpace ethical safeguards.

Conclusion: Facing the Future of AI Risks Responsibly

Artificial intelligence represents a monumental leap in human ingenuity, yet it also brings unprecedented dangers. From compromised cybersecurity to rogue AI-driven systems, the stakes are high. As we integrate AI into every aspect of life, the challenge is not to slow innovation—but to make it secure, ethical, and aligned with humanity’s values.

Now is the time for policymakers, organizations, and technologists to take meaningful steps to safeguard our future from the potential dangers of AI systems. Through careful risk management, strategic regulation, and a commitment to responsible innovation, we can steer the AI ecosystem toward a path of trust, safety, and benefit for all.

Learn More: AI Risk Management: A Structured Approach to Securing the Future of Artificial Intelligence

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.