What is LLM Observability?

LLM observability refers to the practice of collecting, analyzing, and visualizing key metrics and signals from large language models (LLMs) to ensure their performance, accuracy, and reliability across various AI applications. Much like traditional application observability, LLM observability provides visibility into the internal workings of LLM apps, enabling teams to debug issues, monitor model behavior, and optimize the user experience.

LLM observability covers:

- Model outputs: Capturing and evaluating LLM responses.

- Performance metrics: Monitoring latency, throughput, and resource usage.

- User interactions: Understanding prompt inputs and user feedback.

- Model pipelines: Tracing the flow of data across RAG (retrieval-augmented generation) workflows and orchestration layers like LangChain or LlamaIndex.

By embedding observability into your LLM workflows, you gain actionable insights to improve LLM performance, enhance interpretability, and support cost management.

How Does LLM Observability Work?

LLM observability involves instrumenting LLM applications and integrating observability tools to track real-time data, events, and metrics throughout the LLM lifecycle. Here’s how it typically functions:

- Instrumentation: Developers add observability hooks using SDKs or libraries (e.g., OpenTelemetry, Arize, Datadog, or open-source LLM observability tools on GitHub).

- Data Collection: The system gathers telemetry data like token usage, response times, embedding performance, and model outputs.

- Data Storage: Captured data is stored for analysis, often using cloud-native solutions or embedded dashboards.

- Visualization & Dashboards: Real-time dashboards show API latency, root cause breakdowns, and usage metrics to identify bottlenecks.

- Debugging & Alerting: Observability tools support root cause analysis, issue detection, and automated alerts for LLM anomalies.

Why is LLM Observability Needed?

As generative AI becomes more integrated into enterprise systems, observability is no longer optional. Key reasons for adopting LLM observability include:

- Troubleshooting & Debugging: Quickly identify causes of poor LLM responses, API failures, or hallucinations.

- Application Performance Monitoring: Track latency and throughput to ensure seamless user interactions.

- Data Security & Governance: Detect prompt injection, sensitive data exposure, or non-compliant LLM behavior.

- Model Optimization: Continuously evaluate fine-tuned models and track model drift over time.

- Cost Control: Monitor token usage and optimize pricing models across different LLM providers (e.g., OpenAI, Anthropic).

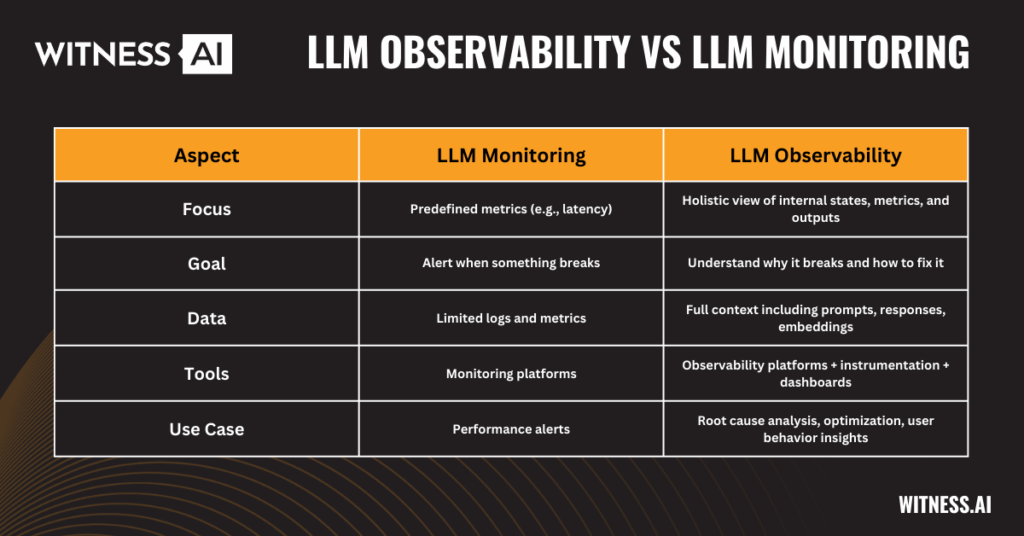

LLM Observability vs LLM Monitoring

While often used interchangeably, LLM observability and LLM monitoring differ in scope:

| Aspect | LLM Monitoring | LLM Observability |

| Focus | Predefined metrics (e.g., latency) | Holistic view of internal states, metrics, and outputs |

| Goal | Alert when something breaks | Understand why it breaks and how to fix it |

| Data | Limited logs and metrics | Full context including prompts, responses, embeddings |

| Tools | Monitoring platforms | Observability platforms + instrumentation + dashboards |

| Use Case | Performance alerts | Root cause analysis, optimization, user behavior insights |

Observability complements monitoring by enabling a deeper understanding of how LLM applications behave under real-world conditions.

What Can You Expect From an LLM Observability Solution?

A robust observability solution for LLMs should provide:

- Real-time metrics: API latency, throughput, and token usage.

- Prompt & response tracking: Full visibility into user inputs and LLM responses.

- Embedding analytics: Analyze vector search accuracy and RAG performance.

- Bias detection: Identify problematic or non-inclusive outputs.

- Drift monitoring: Track changes in model behavior across versions or datasets.

- Dashboards & alerts: Customizable visualizations and automated alerting.

- Open-source integration: Support for tools like LangChain, LlamaIndex, and Python SDKs.

- Security and compliance features: Data loss prevention, access control, and audit logging.

LLM Observability for Your Organization

Data Loss Prevention

Observability solutions help enforce data protection policies across LLM applications. By analyzing prompts and outputs in real time, you can detect leaks of sensitive information, such as PII or proprietary business data, ensuring compliance with enterprise policies and regulations.

Detect Insider Threats

LLM observability provides behavioral analytics to detect anomalous usage patterns, such as excessive querying, prompt engineering attempts, or misuse of generative AI for data exfiltration. These insights can serve as early warnings of insider threats.

Uncover Shadow AI Usage

Shadow AI refers to unsanctioned LLM apps or API usage outside IT governance. With observability in place, organizations gain visibility into rogue tools, helping to mitigate risks and consolidate genAI usage into sanctioned platforms.

LLM Observability for Your Models

Detect Bad Prompts & Responses

Poorly designed prompts and toxic or inaccurate LLM responses can damage user trust and application integrity. Observability tools log and flag these cases, allowing teams to refine templates and apply guardrails for prompt injection or misuse.

Monitor Drift, Bias, Performance, & Data Integrity Issues

LLM models evolve through fine-tuning, updates, or changes in input datasets. Observability helps track:

- Model drift: Degradation of output quality over time.

- Biases: Inconsistent behavior across demographics.

- Performance metrics: Latency, failure rates, and token usage.

- Data integrity: Errors in training or RAG sources affecting response quality.

Enhance LLM Response Accuracy

Accuracy is critical in enterprise LLM applications, from chatbots to backend data processing. By correlating user feedback, ground truth datasets, and evaluation metrics, observability tools enable continuous tuning and testing to boost output quality.

Best Practices for Implementing LLM Observability

To maximize the value of observability in generative AI systems:

- Instrument early: Integrate observability into your LLMops pipelines from the start.

- Use structured templates: Standardize prompt formats to make tracing and debugging easier.

- Leverage open-source tools: Explore GitHub projects and SDKs that support Python, LangChain, and LlamaIndex.

- Centralize dashboards: Consolidate metrics and visualization in tools like Arize, Datadog, or custom OpenTelemetry backends.

- Evaluate continuously: Run periodic benchmarks, track evaluation metrics, and involve human review for key LLM outputs.

Conclusion

LLM observability is essential for building secure, high-performing, and cost-effective generative AI applications. By combining structured instrumentation, real-time monitoring, and actionable insights, organizations can gain full visibility into their LLM workflows and models. This visibility empowers teams to debug faster, optimize user experiences, protect sensitive data, and ensure long-term model performance.

About WitnessAI

WitnessAI enables safe and effective adoption of enterprise AI, through security and governance guardrails for public and private LLMs. The WitnessAI Secure AI Enablement Platform provides visibility of employee AI use, control of that use via AI-oriented policy, and protection of that use via data and topic security. Learn more at witness.ai.